An Opinionated Guide to Using AI Right Now

What AI to use in late 2025

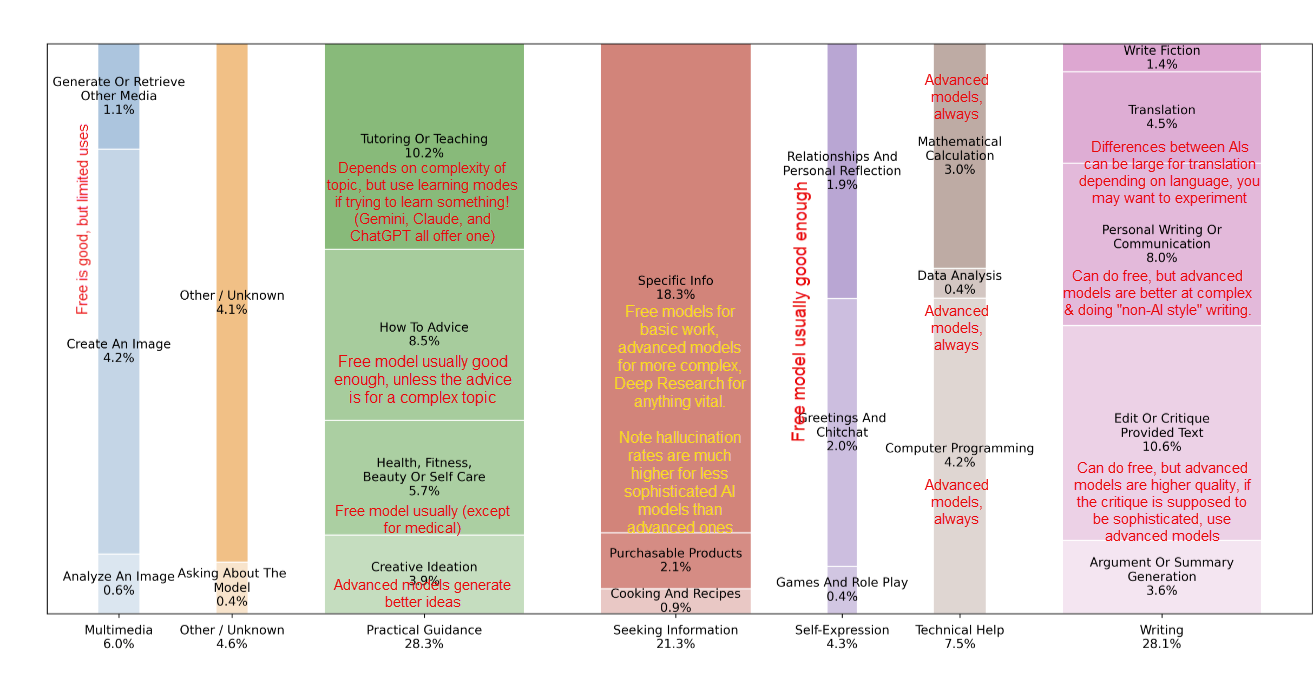

Every few months I write an opinionated guide to how to use AI1, but now I write it in a world where about 10% of humanity uses AI weekly. The vast majority of that use involves free AI tools, which is often fine… except when it isn’t. OpenAI recently released a breakdown of what people actually use ChatGPT for (way less casual chat than you’d think, way more information-seeking than you expected). This means I can finally give you advice based on real usage patterns instead of hunches. I annotated OpenAI’s chart with some suggestions about when to use free versus advanced models.

If the chart suggests that a free model is good enough for what you use AI for, pick your favorite and use it without worrying about anything else in the guide. You basically have nine or so choices, because there are only a handful of companies that make cutting-edge models. All of them offer some free access. The four most advanced AI systems are Claude from Anthropic, Google’s Gemini, OpenAI’s ChatGPT, and Grok by Elon Musk’s xAI. Then there are the open weights AI families, which are almost (but not quite) as good: Deepseek, Kimi, Z and Qwen from China, and Mistral from France. Together, variations on these AI models take up the first 35 spots in almost any rating system of AI. Any other AI service you use that offers a cutting-edge AI from Microsoft Copilot to Perplexity (both of which offer some free use) is powered by one or more of these nine AIs as its base.

How should you pick among them? Some free systems (like Gemini and Perplexity) do a good job with web search, while others cannot search the web at all. If you want free image creation, the best option is Gemini, with ChatGPT and Grok as runners-up. But, ultimately, these AIs differ in many small ways, including privacy policies, levels of access, capabilities, the approach they take to ethical issues, and “personality.” And all of these things fluctuate over time. So pick a model you like based on these factors and use it. However, if you are considering potentially upgrading to a paid account, I would suggest starting with the free accounts from Anthropic, Google, or OpenAI. If you just want to use free models, the open weights models and aggregation services like Microsoft Copilot have higher usage limits.

Now on the hard stuff.

Picking an Advanced AI System

If you want to use an advanced AI seriously, you’ll need to pay either $20 or around $200 a month, depending on your needs (though companies are now experimenting with other pricing models in some parts of the world). The $20 tier works for the vast majority of people, while the $200 tier is for people with complex technical and coding needs.

You will want to pick among three systems to spend your $20: Claude from Anthropic, Google’s Gemini, and OpenAI’s ChatGPT. With all of the options, you get access to advanced, agentic, and fast models, a voice mode, the ability to see images and documents, the ability to execute code, good mobile apps, the ability to create images and video (Claude lacks here, however), and the ability to do Deep Research. They all have different personalities and strengths and weaknesses, but for most people, just selecting the one they like best will suffice. Some people, especially big users of X, might want to consider Grok by Elon Musk’s xAI, which has some of the most powerful AI models and is rapidly adding features, but has not been as transparent about product safety as some of the other companies. Microsoft’s Copilot offers many of the features of ChatGPT and is accessible to users through Windows, but it can be hard to control what models you are using and when. So, for most people, just stick with Gemini, Claude, or ChatGPT.

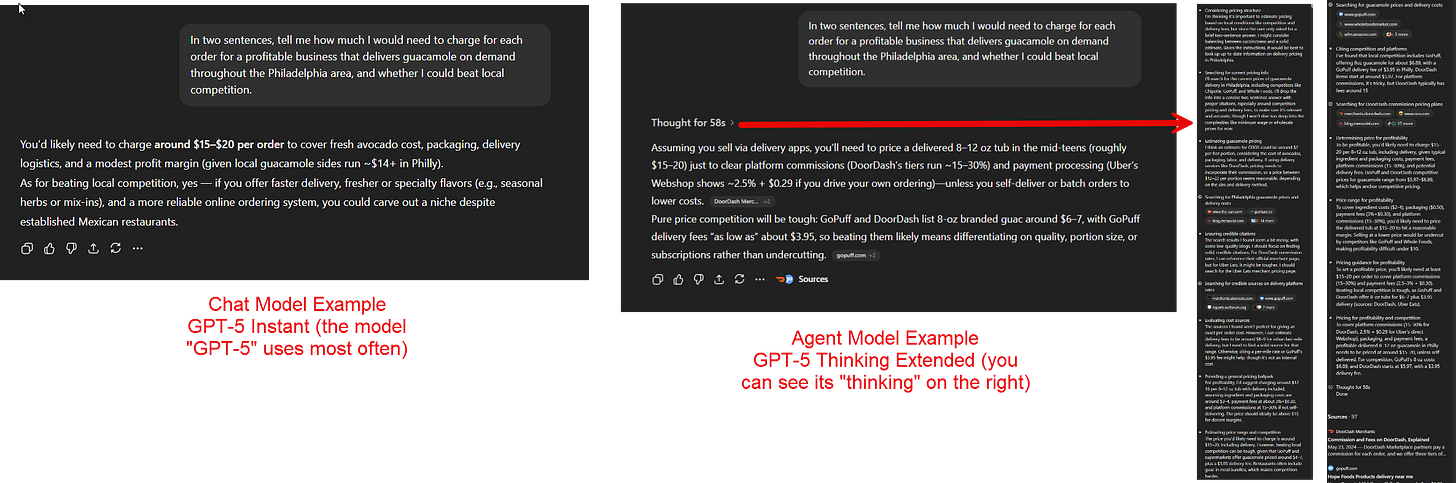

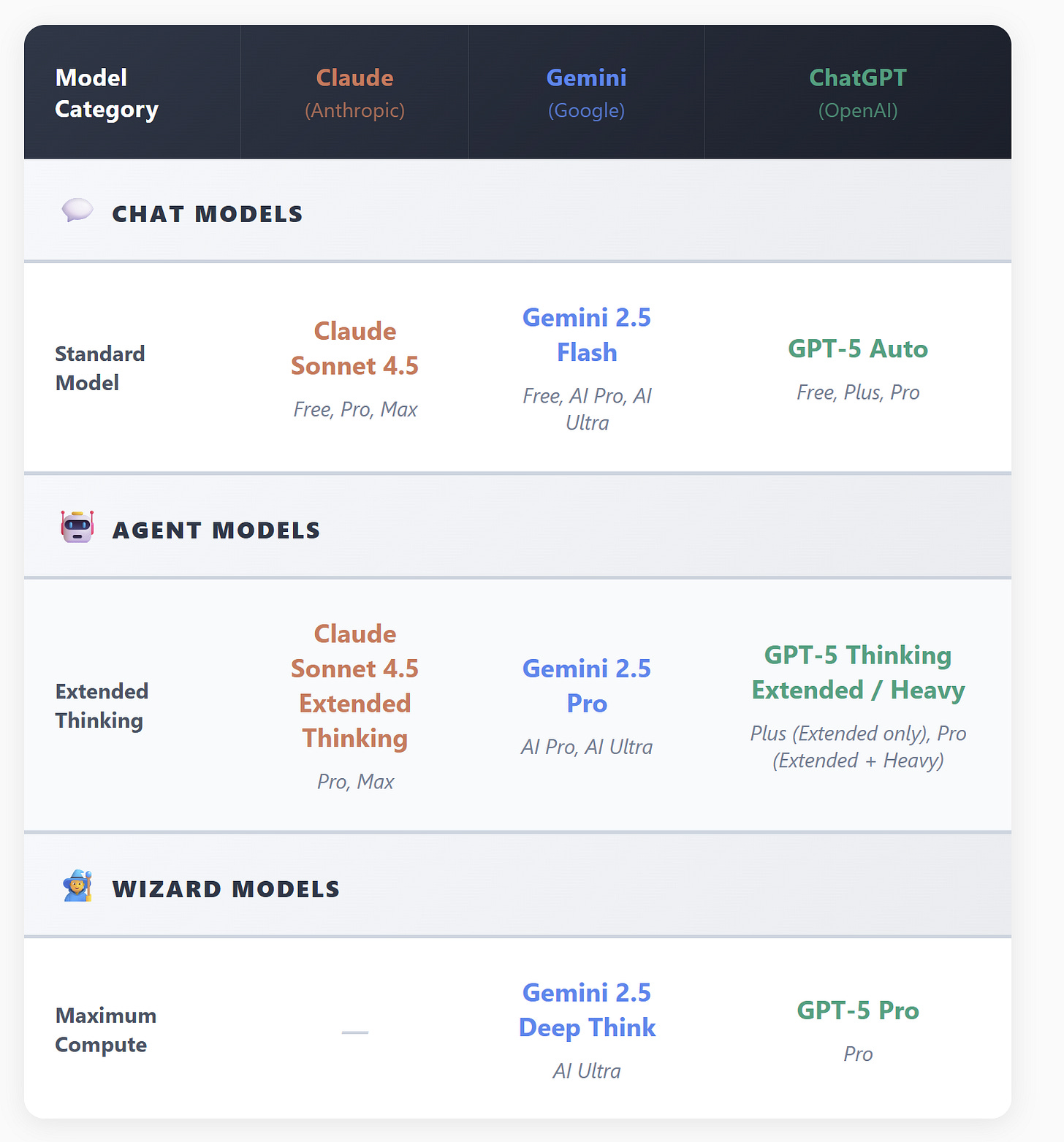

Just picking one of these three isn’t enough, however, because each AI system has multiple AI models to select. Chat models are generally the ones you get for free and are best for conversation, because they answer quickly and are usually the most personable. Agent models take longer to answer but can autonomously carry out many steps (searching the web, using code, making documents), getting complex work done. Wizard models take a very long time and handle very complex academic tasks. For real work that matters, I suggest using Agent models, they are more capable and consistent and are much less likely to make errors (but remember that all AI models still have a lot of randomness associated with them and may answer in different ways if you ask the same question again.)

Picking the model

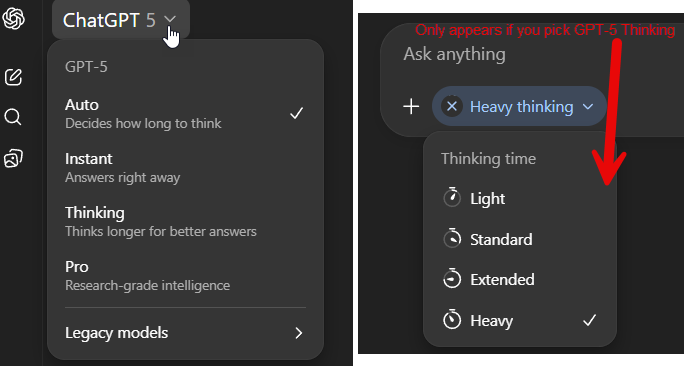

For ChatGPT, no matter whether you use the free or pay version, the default model you are given is “ChatGPT 5”. The issue is that GPT-5 is not one model, it is many, from the very weak GPT-5 mini to the very good GPT-5 Thinking to the extremely powerful GPT-5 Pro. When you select GPT-5, what you are really getting is “auto” mode, where the AI decides which model to use, often a less powerful one. By paying, you get to decide which model to use, and, to further complicate things, you can also select how hard the model “thinks” about the answer. For anything complex, I always manually select GPT-5 Thinking Extended (on the $20 plan) or GPT-5 Thinking Heavy (if you are paying for the $200 model). For a really hard problem that requires a lot of thinking, you can pick GPT-5 Pro, the strongest model, which is only available at the highest cost tier.

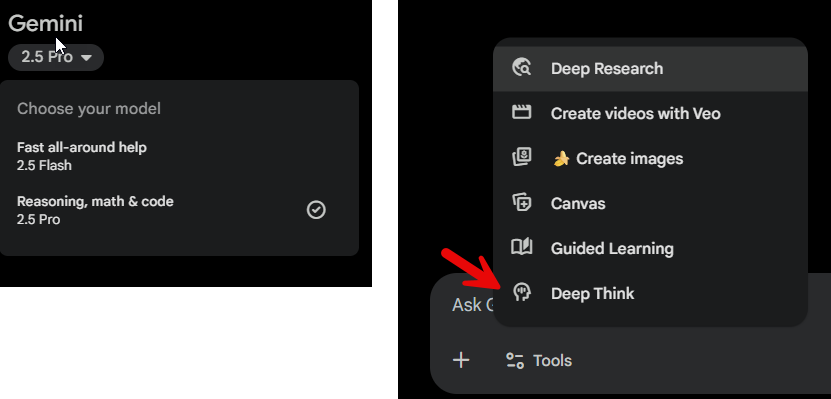

For Gemini, you only have two options: Gemini 2.5 Flash and Gemini 2.5 Pro, but, if you pay for the Ultra plan, you get access to Gemini Deep Think (which is in another menu). At this point, Gemini 2.5 is the weakest of the major AI models (though still quite capable and Deep Think is very powerful), but a new Gemini 3 is expected at some point in the coming months.

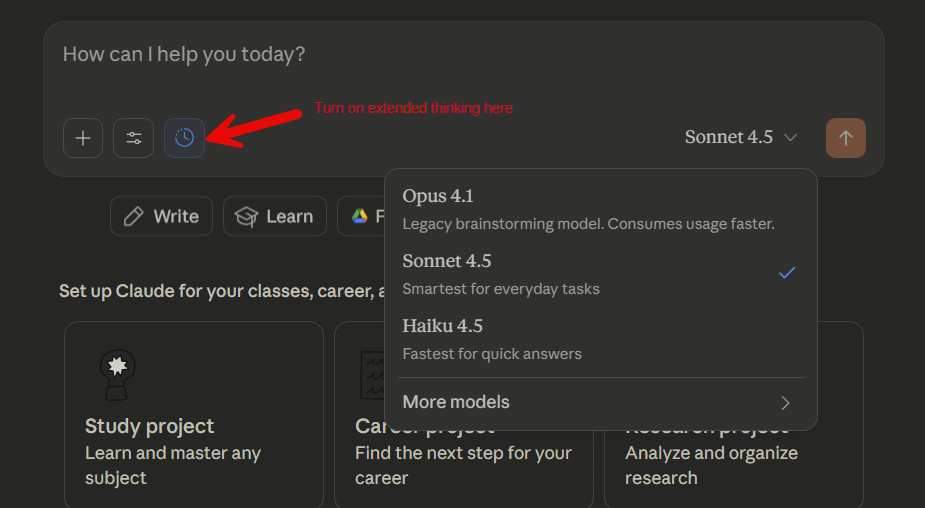

Finally, Claude makes it relatively easy to pick a model. You probably want to use Sonnet 4.5 for everything, with the only question being whether you select extended thinking (for harder problems). Right now, Claude does not have an equivalent to GPT-5 Pro.

If you are using the paid version of any of these models and want to make sure your data is never used to train a future AI, you can turn off training easily for ChatGPT and Claude without losing any functionality, but at the cost of some functionality for Gemini. All of the AIs also come with a range of other features like projects and memory that you may want to explore as you get used to using them.

Getting better answers

The biggest uses for AI were practical guidance and getting information, and there are two ways to dramatically improve the quality your results for those kinds of problems: by either triggering Deep Research mode and/or connecting the AI to your data (if you feel comfortable doing that).

Deep Research is a mode where the AI conducts extensive web research over 10-15 minutes before answering. Deep Research is a key AI feature for most people, even if they don’t know it yet, and it is useful because it can produce very high-quality reports that often impress information professionals (lawyers, accountants, consultants, market researchers) that I speak to. Deep Research reports are not error-free but are far more accurate than just asking the AI for something, and the citations tend to actually be correct. Also note that each of the Deep Research tools work a little differently, with different strengths and weaknesses. Even without deep research, GPT-5 Thinking does a lot of research on its own, and Claude has a “medium research” option where you turn on Web Search but not research.

Connections to your own data are very powerful and increasingly available for everything from Gmail to SharePoint. I have found Claude to be especially good in integrating searches across email, calendars, various drives, and more - ask it “give me a detailed briefing for my day” when you have connected it to your accounts and you will likely find it impressive. This is an area where the AI companies are putting in a lot of effort, and where offerings are evolving rapidly.

Multimodal inputs

I have mentioned it before, but an easy way to use AI is just to start with voice mode. The two best implementations of voice mode are in the Gemini app and ChatGPT’s app and website. Claude’s voice mode is weaker than the other two systems. Note the voice models are optimized for chat (including all of the small pauses and intakes of breath designed to make it feel like you are talking to a person), so you don’t get access to the more powerful models this way.

All the models also let you put all sorts of data into them: you can now upload PDFs, images and even video (for ChatGPT and Gemini). For the app versions, and especially ChatGPT and Gemini, one great feature is the ability to share your screen or camera. Point your phone at a broken appliance, a math problem, a recipe you’re following, or a sign in a foreign language. The AI sees what you see and responds in real-time. It makes old assistants like Siri and Alexa feel very primitive.

Making Things for You: Images, Video, Code, and Documents

Claude and ChatGPT can now make PowerPoints and Excel files of high quality (right now, Claude has a lead in these two document formats, but that may change at some point). All three systems can also produce a wide variety of other outputs by writing code. To get Gemini to do this reliably, you need to select the Canvas option when you want these systems to run code or produce separate outputs. Claude has a specialized artifacts section to show some examples of what it can make with code. There are also very powerful specialized coding tools from each of these models, but those are a bit too complex to cover in this guide.

ChatGPT and Gemini will also make images for you if you ask (Claude cannot). Gemini has the strongest AI image generation model right now. Both Gemini and OpenAI also have strong video generation capabilities in Veo 3.1 and Sora 2. Sora 2 is really built as a social media application that allows you to put yourself into any video, while Veo 3.1 is more generally focused. They both produce videos with sound.

As many of you know, my test of any new AI image or video model is whether it can make an otter using Wi-Fi on an airplane. That is no longer a challenge. So here is Sora 2 showing otter on an airplane as a nature documentary... and an 80s music video... and a modern thriller... and a 50s low budget SciFi film... and a safety video, and a film noir... and anime... and a 90s video game cutscene... and a French arthouse film.

I have been warning about this for years, but, as you can see, you really can’t trust anything you see online anymore. Please take all videos with a grain of salt. And, as a reminder, this is what you got if you prompted an AI to provide the image of an otter on an airplane four years ago. Things are moving fast.

Quick Tips

Beyond the basics of selecting models, there are a few things that come up quite often that are worth considering:

Hallucinations: In many ways, hallucinations are far less of a concern than they used to be, as newer AI models are better at not hallucinating. However, no matter how good the AI is, it will still make errors and mistakes and still give you confident answers where it is wrong. They also can hallucinate about their own capabilities and actions. Answers are more likely to be right when they come from advanced models, and if the AI did web searches. And remember, the AI doesn’t know “why” it did something, so asking it to explain its logic will not get you anywhere. However, if you find issues, the thinking trace of AI models can be helpful.

Sycophancy and Personality: All of the AI chatbots have become more engaging and likeable. On one hand, that makes them more fun to use, on the other it risks making AIs seem like people when they are not, which creates a danger that people may form stronger attachments to AI. A related issue is sycophancy, where the AI agrees with what you say. The reasons for this are complicated but when you need real feedback, explicitly tell the AI to act as a critic. Otherwise, you might be talking to a very sophisticated yes-man.

Give the AI context to work with. Though memory features are being added, most AI models only know basic user data and the information in the current chat, they do not remember or learn about you beyond that. So, you need to provide the AI with context: documents, images, PowerPoints, or even just an introductory paragraph about yourself can help - use the file option to upload files and images whenever you need, or else use the connectors we discussed earlier.

Don’t worry too much about prompting “well”: Older AI models required you to generate a prompt using techniques like chain-of-thought. But as AI models get better, the importance of this fades and the models get better at figuring out what you want. In a recent series of experiments, we have discovered that these techniques don’t really help anymore (and no, threatening them or being nice to them does not seem to help on average).

Experiment and have fun: Play is often a good way to learn what AI can do. Ask a video or image model to make a cartoon, ask an advanced AI to turn your report or writing into a game, do a deep research report on a topic that you are excited about, ask the AI to guess where you are from a picture, show the AI an image of your fridge and ask for recipe ideas, work with the AI to plot out a dream trip. Try things and you will learn the limits of the system.

Where this goes

I started this guide mentioning that 10% of humanity uses AI weekly. By the time I write the next update in a few months, that number will likely be higher, the models will be better, and some of the specific recommendations I made today will be outdated. What won’t change is the fact that people who learn to use these systems well will find ways to benefit from them, and to build intuition for the future.

The chart at the top of this post shows what people use AI for today. But I’d bet that in two years, that chart looks completely different. And that isn’t just because AI changed what it can do, but also because users figured out what it should do. So, pick a system and start with something that actually matters to you, like a report you need to write, a problem you’re trying to solve, or a project you have been putting off. Then try something ridiculous just to see what happens. The goal isn’t to become an AI expert. It’s to build intuition about what these systems can and can’t do, because that intuition is what will matter as these tools keep evolving.

The future of AI isn’t just about better models. It’s about people figuring out what to do with them.

This is an opinionated guide because, like all of my writing on this Substack, social media, and my books, I write it all myself and I only get AI feedback when I am done with a draft. I might make mistakes, and my opinion may not be yours, but I do not take money from any of the AI companies, so they very much are my opinions.

I fear sycophancy more than, say, hallucination. Enthusiastic endorsement is great for endorphins, but when I am pointed in the wrong direction, I need to be told - unwaveringly.

Fortunately, I am married.

Absolutely fantastic article. I often include "do challenge me with sarcasm" in some of my prompts when I get sick of the praise. 🤣