On Working with Wizards

Verifying magic on the jagged frontier

In my book, Co-Intelligence, I outlined a way that people could work with AI, which was, rather unsurprisingly, as a co-intelligence. Teamed with a chatbot, humans could use AI as a sort of intern or co-worker, correcting its errors, checking its work, co-developing ideas, and guiding it in the right direction. Over the past few weeks, I have come to believe that co-intelligence is still important but that the nature of AI is starting to point in a different direction. We're moving from partners to audience, from collaboration to conjuring.

A good way to illustrate this change is to ask an AI to explain what has happened since I wrote the book. I fed my book and all 140 or so One Useful Thing posts (incidentally, I can’t believe I have written that many posts!) into NotebookLM and chose the new video overview option with a basic prompt to make a video about what has happened in the world of AI.

A few minutes later, I got this. And it is pretty good. Good enough that I think it is worth watching to get an update on what has happened since my book was written.

But how did the AI pick the points it made? I don’t know, but they were pretty good. How did it decide on the slides to use? I don’t know, but they were also pretty on target (though images remain a bit of a weak point, as it didn’t show me the promised otter). Was it right? That seemed like something I should check.

So, I went through the video several times, checking all the facts. It got all the numbers right, including the data on MMLU scores and the results of AI performance on the neurosurgery exam data (I am not even sure when I cited that material). My only real issue was that it should have noted that I was one of several co-authors in our study of Boston Consulting Group that also introduced the term “jagged frontier.” Also, I wouldn’t have said everything the way the AI did (it was a little bombastic, and my book is not out-of-date yet!), but there were no substantive errors.

I think this process is typical of the new wave of AI, for an increasing range of complex tasks, you get an amazing and sophisticated output in response to a vague request, but you have no part in the process. You don’t know how the AI made the choices it made, nor can you confirm that everything is completely correct. We're shifting from being collaborators who shape the process to being supplicants who receive the output. It is a transition from working with a co-intelligence to working with a wizard. Magic gets done, but we don’t always know what to do with the results. This pattern — impressive output, opaque process — becomes even more pronounced with research tasks.

Asking for Magic

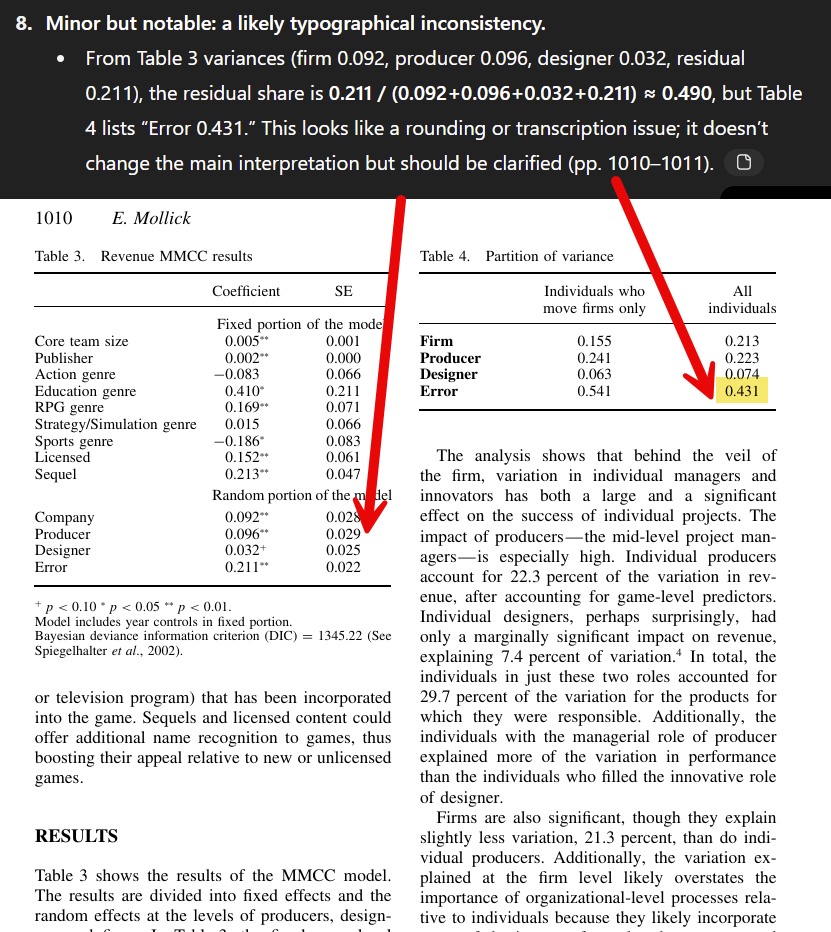

Right now, no AI model feels more like a wizard than GPT-5 Pro, which is only accessible to paying users. GPT-5 Pro is capable of some frankly amazing feats. For example, I gave it an academic paper to read with the instructions “critique the methods of this paper, figure out better methods and apply them.” This was not just any paper, it was my job market paper, which means my first major work as an academic. It took me over a year to write and was read carefully by many of the brightest people in my field before finally being peer reviewed and published in a major journal.

Nine minutes and forty seconds later, I had a very detailed critique. This wasn’t just editorial criticism, GPT-5 Pro apparently ran its own experiments using code to verify my results, including doing Monte Carlo analysis and re-interpreting the fixed effects in my statistical models. It had many suggestions as a result (though it fortunately concluded that “the headline claim [of my paper] survives scrutiny”), but one stood out. It found a small error, previously unnoticed. The error involved two different sets of numbers in two tables that were linked in ways I did not explicitly spell out in my paper. The AI found the minor error, no one ever had before.

Again, I was left with the wizard problem: was this right? I checked through the results, and found that it was, but I still have no idea of what the AI did to discover this problem, nor whether the other things it claimed to have done happened as described. But I was impressed by GPT-5 Pro’s analysis, which is why I now throw all sorts of problems, big and small at the model: Is the Gartner hype cycle real? Did census data show AI use declining at large firms? Just ask GPT-5 Pro and get the right answer. I think. I haven’t found an error yet, but that doesn’t mean there aren’t any. And, of course, there are many other tasks that the AI would fail to deliver any sort of good answer for. Who knows with wizards?

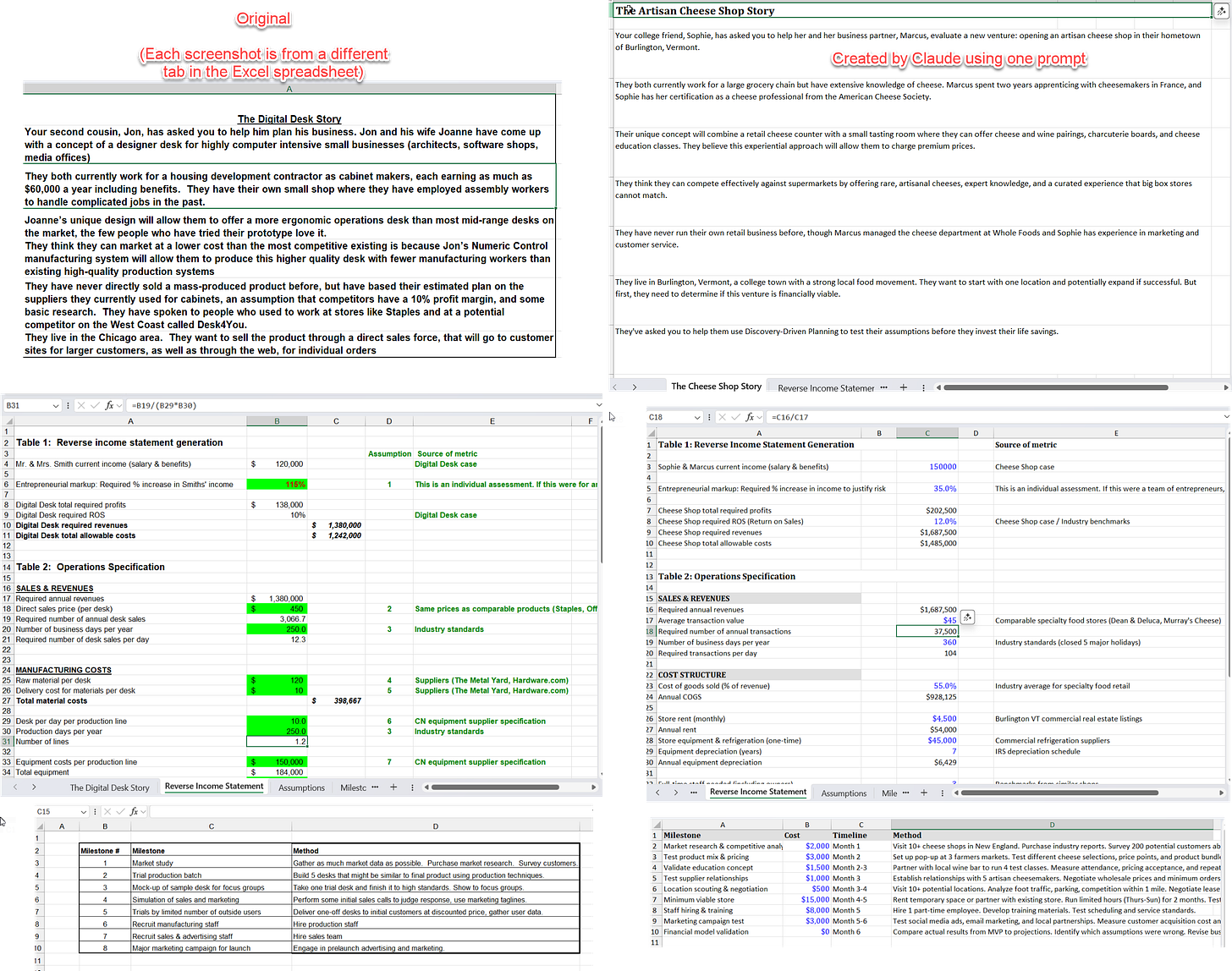

To see how this might soon apply to work more broadly, consider another advanced AI, Claude 4.1 Opus, which recently gained the ability to work with files. It is especially talented at Excel, so I gave it a hard challenge on an Excel file I knew well. There is an exercise I used in my entrepreneurship classes that involves analyzing the financial model of a small desk manufacturing business as a lesson about how to plan despite uncertainty. I gave Claude the old, multi-tab Excel file, and asked the AI to update it for a new business - a cheese shop - while still maintaining the goal of the overall exercise.

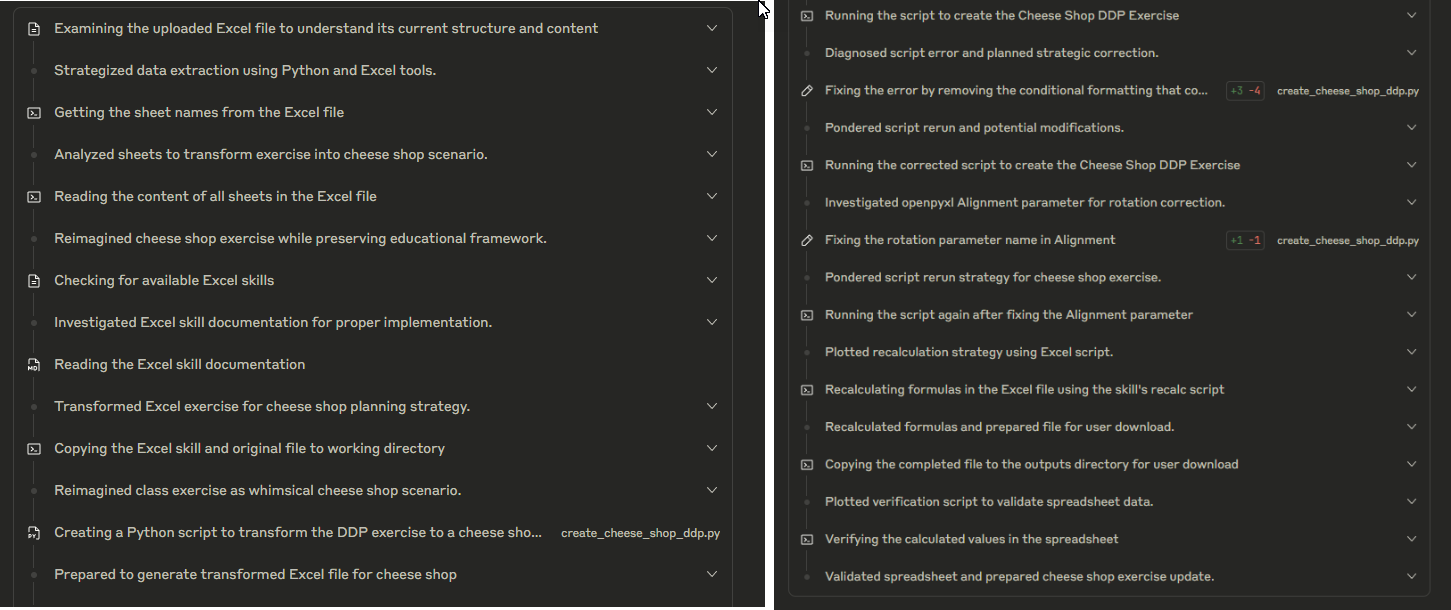

With just that instruction, it read the lesson plan and the old spreadsheets, including their formulas, and created a new one, updating all of the information to be appropriate for a cheese shop. A few minutes later, with just the one prompt, I had a new, transformed spreadsheet downloaded on my computer, one that had entirely new data while still communicating the key lesson.

Again, the wizard didn’t tell me the secret to its tricks, so I had to check the results over carefully. From what I saw, they seemed very good, preserving the lessons in a new context. I did spot a few issues in the formula and business modelling that I would do differently (I would have had fewer business days per year, for example), but that felt more like a difference of opinion than a substantive error.

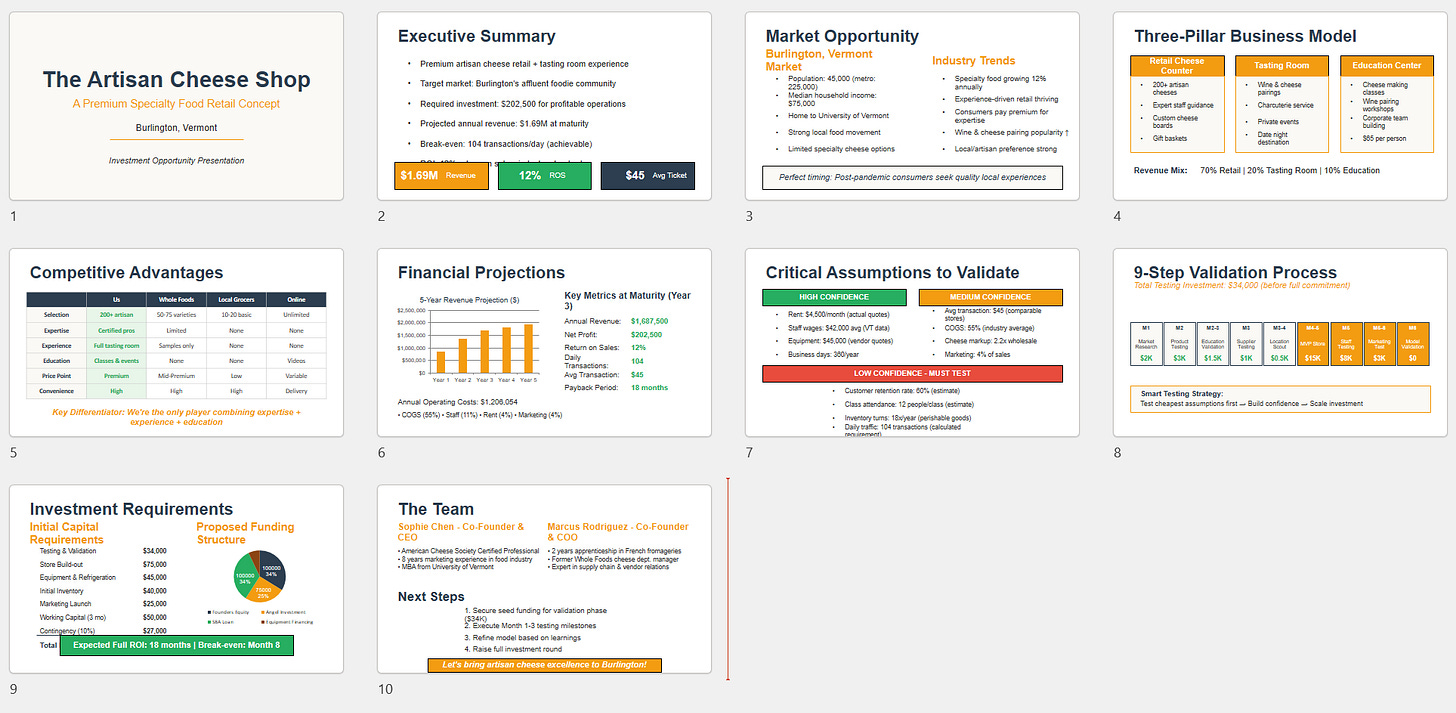

Curious to see how far Claude could go, and since everyone always asks me whether AI can do PowerPoint, I also prompted: “great, now make a good PowerPoint for this business” and got the following result.

This is a pretty solid start to a pitch deck, and one without any major errors, but it also isn’t ready-to-go. This emphasizes the jagged frontier of AI: it is very good at some things and worse at others in ways that are hard to predict without experience. I have been showing you examples within the ever-expanding frontier of AI abilities, but that doesn’t mean that AI can do everything with equal ease. But my focus is less on the expanding range of AI ability in this post, than about our changing relationships with AIs.

The Problems with Wizards

These new AI systems are essentially agents, AI that can plan and act autonomously toward given goals. When I asked Claude to change my spreadsheet, it planned out steps and executed them, from reading the original spreadsheet to coding up a new one. But it also adjusted to unexpected errors, twice fixing the spreadsheet (without me asking) and verifying its answers multiple times. I didn’t get to select these steps, in fact, in the new wave of agents powered by reinforcement learning, no one selects the steps, the models learn their own approach to solving problems.

Not only can I not intervene, I also cannot be entirely sure what the AI system actually did. The steps that Claude reported are mere summaries of its work, GPT-5 Pro provides even less information, while NotebookLM gives you almost no insights at all into its process in creating a video. Even if I could see the steps, however, I would need to be an expert in many fields - from coding to entrepreneurship - to really have a sense of what the AI was doing. And then, of course, there is the question of accuracy. How can I tell if the AI is accurate without checking every fact? And even if the facts are right, maybe I would have made a different judgement about how to present or frame them. But I can’t do anything, because wizards don’t want my help and work in secretive ways that even they can’t explain.

The hard thing about this is that the results are good. Very good. I am an expert in the three tasks I gave AI in this post, and I did not see any factual errors in any of these outputs, though there were some minor formatting errors and choices I would have made differently. Of course, I can’t actually tell you if the documents are error-free without checking every detail. Sometimes that takes far less time than doing the work yourself, sometimes it takes a lot more. Sometimes the AI’s work is so sophisticated that you couldn’t check it if you tried. And that suggests another risk we don't talk about enough: every time we hand work to a wizard, we lose a chance to develop our own expertise, to build the very judgment we need to evaluate the wizard's work.

But I come back to the inescapable point that the results are good, at least in these cases. They are what I would expect from a graduate student working for a couple hours (or more, in the case of the re-analysis of my paper), except I got them in minutes.

This is the issue with wizards: We're getting something magical, but we're also becoming the audience rather than the magician, or even the magician's assistant. In the co-intelligence model, we guided, corrected, and collaborated. Increasingly, we prompt, wait, and verify… if we can.

So what do we do with our wizards? I think we need to develop a new literacy: First, learn when to summon the wizard versus when to work with AI as a co-intelligence or to not use AI at all. AI is far from perfect, and in areas where it still falls short, humans often succeed. But for the increasing number of tasks where AI is useful, co-intelligence, and the back-and-forth it requires, is often superior to a machine alone. Yet, there are, increasingly, times when summoning a wizard is best, and just trusting what it conjures.

Second, we need to become connoisseurs of output rather than process. We need to curate and select among the outputs the AI provides, but more than that, we need to work with AI enough to develop instincts for when it succeeds and when it fails. We have to learn to judge what's right, what's off, and what's worth the risk of not knowing. This creates a hard problem for education: How do you train someone to verify work in fields they haven't mastered, when the AI itself prevents them from developing mastery? Figuring out how to address this gap is increasingly urgent.

Finally, embrace provisional trust. The wizard model means working with “good enough” more often, not because we're lowering standards, but because perfect verification is becoming impossible. The question isn't “Is this completely correct?” but “Is this useful enough for this purpose?”

We are already used to trusting technological magic. Every time we use GPS without understanding the route, or let an algorithm determine what we see, we're trusting a different type of wizard. But there's a crucial difference. When GPS fails, I find out quickly when I reach a dead end. When Netflix recommends the wrong movie, I just don't watch it. But when AI analyzes my research or transforms my spreadsheet, the better it gets, the harder it becomes to know if it's wrong. The paradox of working with AI wizards is that competence and opacity rise together. We need these tools most for the tasks where we're least able to verify them. It’s the old lesson from fairy tales: the better the magic, the deeper the mystery. We'll keep summoning our wizards, checking what we can, and hoping the spells work. At nine minutes for a week's worth of analysis, how could we not? Welcome to the age of wizards.

While reading this, a quote from Lord of the Rings sprang to mind which I hope isn’t foreshadowing something: “Do not meddle in the affairs of wizards, for they are subtle and quick to anger.” I’m also waiting for the day I question an output and the AI responds with, “Just trust me, bro.”

It seems a lot of the problem you are describing flows from system opacity, which is fundamentally a design choice. At the end of the day an LLM agent is just a loop of tool calls and such, which the designers can choose to expose to the user or not. The difficulty of verifying is directly proportional to deliberate system opacity. As you noted, some platforms choose to expose more than others.

Claude Code is a good example of this. Watch the reasoning and tool calls in real time. I frequently interrupt if I see Claude going down a wrong path, or banging its head against a problem because it missed something fundamental. Or most often I catch Claude struggling with a failing test and then just saying to itself "This isn't a big deal, I should just summarize what I've completed for the user" and then I'll be like, "so hey saw you didn't actually get that test to pass, what's up?"

When I'm building my dumbs little AI applications, transparency is always at the core of the app - exposing to the user what context the model was working with, what the model did, and the model's stated reasoning, specifically for verification purposes. This is a design choice.

Users can and should demand transparency, at least the option of transparency, from the AI systems they use. Otherwise how can people actually use these tools for real things. "Where did you come up with this number?" "Dunno, AI told me" that's just not going to fly for real world uses. But "AI had access to such and such data and it did x,y,z and I verified x and y, though I couldn't verify z directly the reasoning and result made sense" is far more acceptable (and less prone to risk exposure).