The Bitter Lesson versus The Garbage Can

Does process matter? We are about to find out.

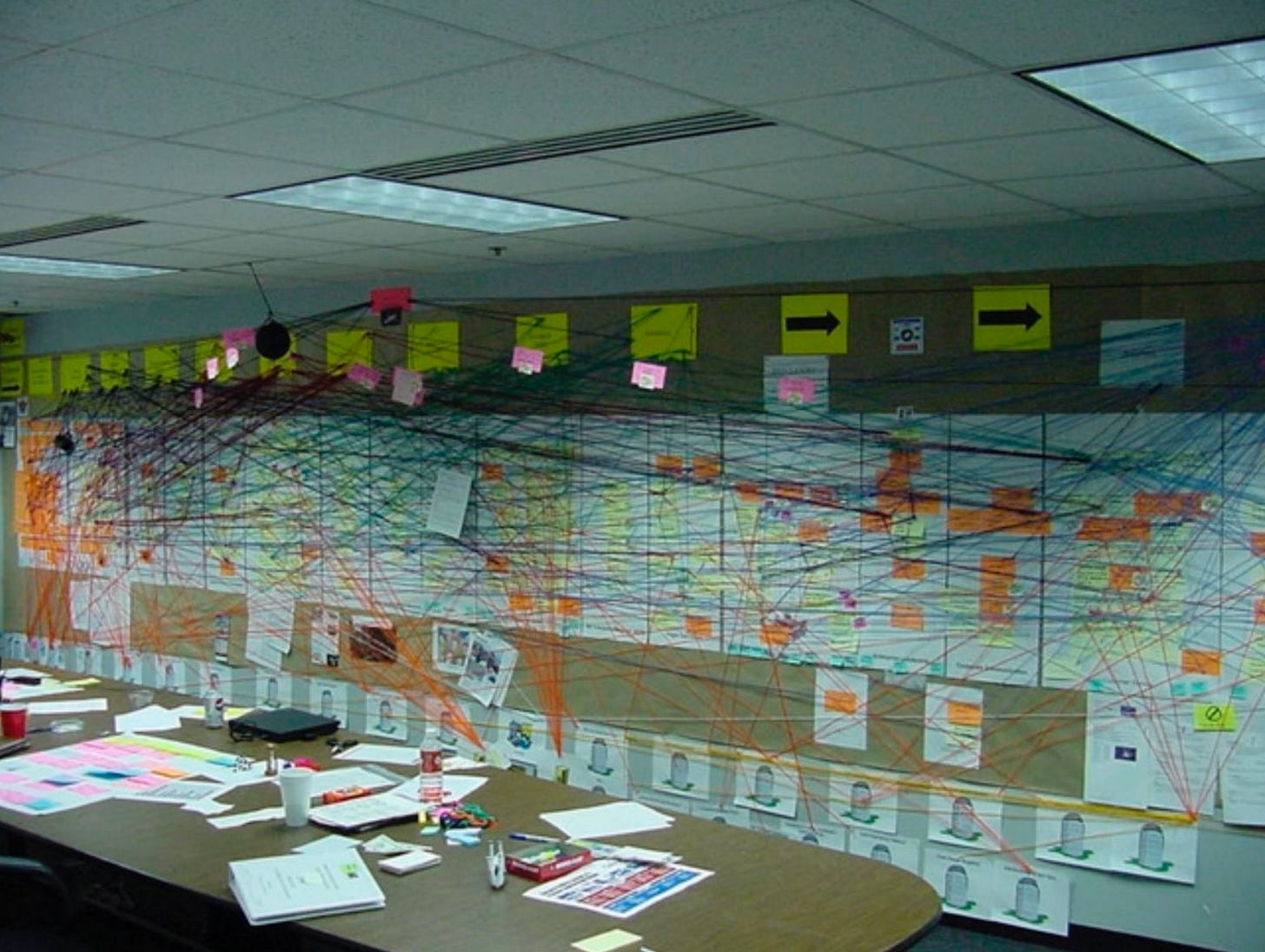

One of my favorite academic papers about organizations is by Ruthanne Huising, and it tells the story of teams that were assigned to create process maps of their company, tracing what the organization actually did, from raw materials to finished goods. As they created this map, they realized how much of the work seemed strange and unplanned. They discovered entire processes that produced outputs nobody used, weird semi-official pathways to getting things done, and repeated duplication of efforts. Many of the employees working on the map, once rising stars of the company, became disillusioned.

I’ll let Prof. Huising explain what happened next: “Some held out hope that one or two people at the top knew of these design and operation issues; however, they were often disabused of this optimism. For example, a manager walked the CEO through the map, presenting him with a view he had never seen before and illustrating for him the lack of design and the disconnect between strategy and operations. The CEO, after being walked through the map, sat down, put his head on the table, and said, "This is even more fucked up than I imagined." The CEO revealed that not only was the operation of his organization out of his control but that his grasp on it was imaginary.”

For many people, this may not be a surprise. One thing you learn studying (or working in) organizations is that they are all actually a bit of a mess. In fact, one classic organizational theory is actually called the Garbage Can Model. This views organizations as chaotic "garbage cans" where problems, solutions, and decision-makers are dumped in together, and decisions often happen when these elements collide randomly, rather than through a fully rational process. Of course, it is easy to take this view too far - organizations do have structures, decision-makers, and processes that actually matter. It is just that these structures often evolved and were negotiated among people, rather than being carefully designed and well-recorded.

The Garbage Can represents a world where unwritten rules, bespoke knowledge, and complex and undocumented processes are critical. It is this situation that makes AI adoption in organizations difficult, because even though 43% of American workers have used AI at work, they are mostly doing it in informal ways, solving their own work problems. Scaling AI across the enterprise is hard because traditional automation requires clear rules and defined processes; the very things Garbage Can organizations lack. To address the more general issues of AI and work requires careful building of AI-powered systems for specific use cases, mapping out the real processes and making tools to solve the issues that are discovered.

This is a hard, slow process that suggests enterprise AI adoption will take time. At least, that's how it looks if we assume AI needs to understand our organizations the way we do. But AI researchers have learned something important about these sorts of assumptions.

The Bitter Lesson

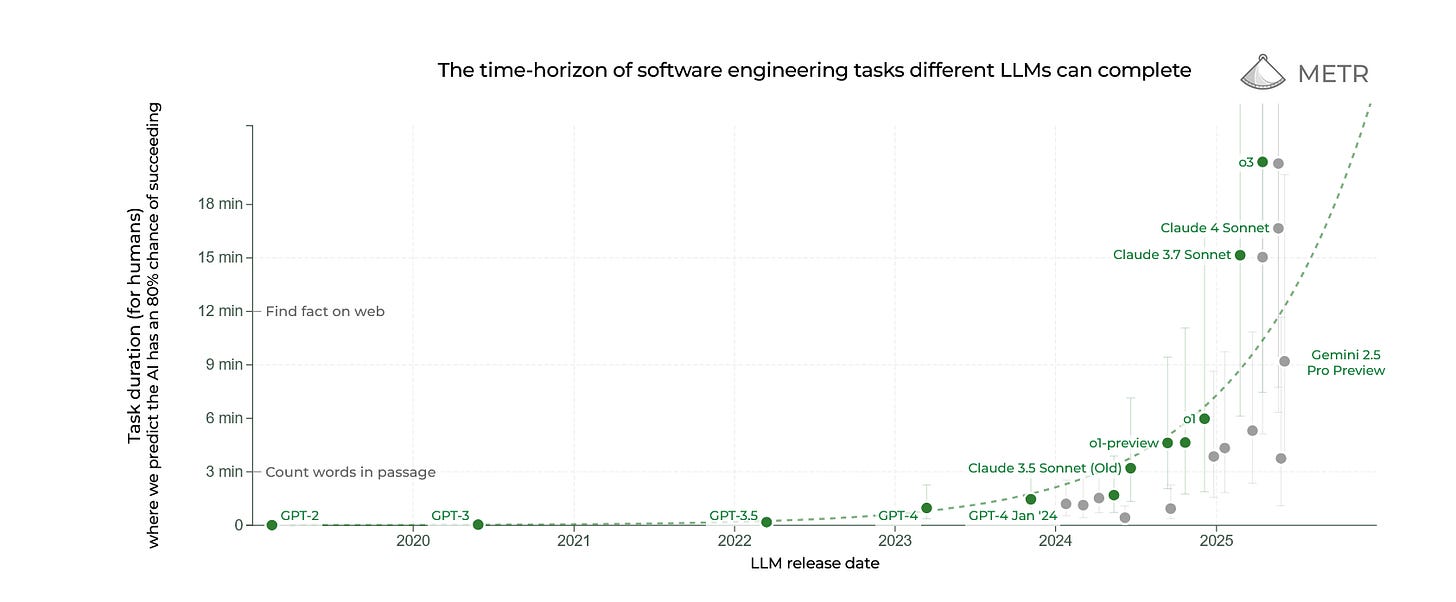

Computer scientist Richard Sutton introduced the concept of the Bitter Lesson in an influential 2019 essay where he pointed out a pattern in AI research. Time and again, AI researchers trying to solve a difficult problem, like beating humans in chess, turned to elegant solutions, studying opening moves, positional evaluations, tactical patterns, and endgame databases. Programmers encoded centuries of chess wisdom in hand-crafted software: control the center, develop pieces early, king safety matters, passed pawns are valuable, and so on. Deep Blue, the first chess computer to beat the world’s best human, used some chess knowledge, but combined that with the brute force of being able to search 200 million positions a second. In 2017, Google released AlphaZero, which could beat humans not just in chess but also in shogi and go, and it did it with no prior knowledge of these games at all. Instead, the AI model trained against itself, playing the games until it learned them. All of the elegant knowledge of chess was irrelevant, pure brute force computing combined with generalized approaches to machine learning, was enough to beat them. And that is the Bitter Lesson — encoding human understanding into an AI tends to be worse than just letting the AI figure out how to solve the problem, and adding enough computing power until it can do it better than any human.

The lesson is bitter because it means that our human understanding of problems built from a lifetime of experience is not that important in solving a problem with AI. Decades of researchers' careful work encoding human expertise was ultimately less effective than just throwing more computation at the problem. We are soon going to see whether the Bitter Lesson applies widely to the world of work.

Agents

While individuals can get a lot of benefits out of using chatbots themselves, a lot of excitement about how to use AI in organizations focuses on agents, a fuzzy term that I define as AI systems capable of taking autonomous action to accomplish a goal. As opposed to guiding a chatbot with prompting, you delegate a task to an agent, and it accomplishes it. However, previous AI systems have not been good enough to handle the full range of organizational needs, there is just too much messiness in the real world. This is why when we created our first AI-powered teaching games a year ago, we had to carefully design each step in the agentic system to handle narrow tasks. And though AI ability to work autonomously is increasing very rapidly, they are still far from human-level on most complicated jobs and are easily led astray on complex tasks.

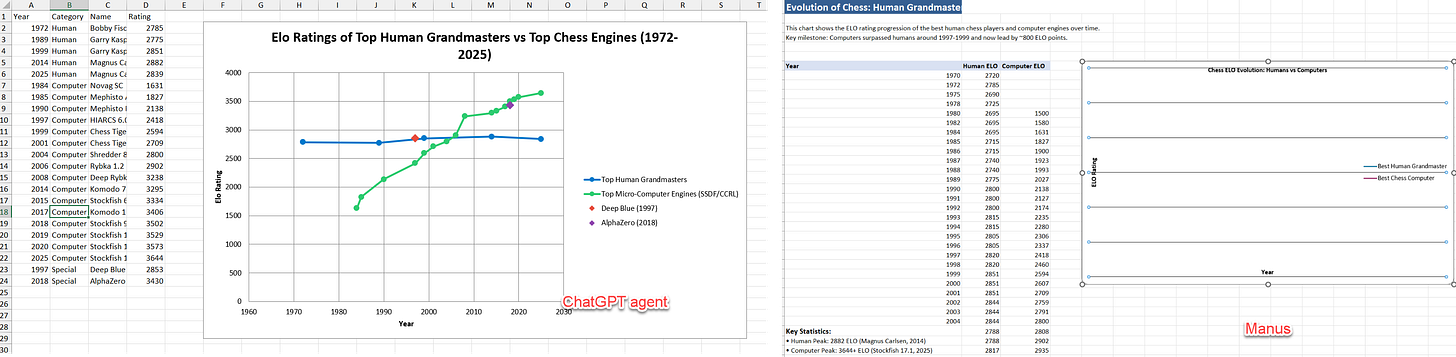

As an example of the state-of-the art in agentic systems, consider Manus, which uses Claude and a series of clever approaches to make AI agents that can get real work done. The Manus team has shared a lot of tips for building agents, involving some interesting bits of engineering and very elaborate prompt design. When writing this post, I asked Manus: “i need an attractive graph that compares the ELO of the best grandmaster and the ELO of the worlds best chess computer from the first modern chess computer through 2025.” And the system got to work. First, Manus always creates a to-do list, then it gathered data and wrote a number of files and, after some minor adjustments I asked for, finally came up with the graph you can see on the left side above (the one without the box around the graph).

Why did it do these things in this order? Because Manus was built by hand, carefully crafted to be the best general purpose agent available. There are hundreds of lines of bespoke text in its system prompts, including detailed instructions about how to build a to-do list. It incorporates hard-won knowledge on how to make agents work with today’s AI systems.

Do you see the potential problem? “Carefully crafted,” “bespoke,” “incorporates hard-won knowledge” — exactly the kind of work the Bitter Lesson tells us to avoid because it will eventually be made irrelevant by more general-purpose techniques.

It turns out there is now evidence that this may be possible with the recent release of ChatGPT agent (an uninspiring name, but at least it is clear, a big step forward for OpenAI!). ChatGPT agent represents a fundamental shift. It is not trained on the process of doing work; instead, OpenAI used reinforcement learning to train their AI on the actual final outcomes. For example. they may not teach it how to create an Excel file the way a human would, they would simply rate the quality of the Excel files it creates until it learns to make a good one, using whatever methods the AI develops. To illustrate how reinforcement learning and careful crafting lead to similar outcomes, I gave the exact same chess prompt to ChatGPT agent and got the graph on the right above. But this time there was no to-do list, no script to follow, instead the agent charted whatever mysterious course was required to get me the best output it could, according to its training. You can see an excerpt of that below:

But you might notice a few differences between the two charts, besides their appearance. For example, each has different ratings for Deep Blue’s performance because the ELO for Deep Blue was never officially measured. The rating from Manus was based off a basic search, we found a speculative Reddit discussion, while the ChatGPT agent, trained with the reinforcement learning approaches used in Deep Research, turned up more credible sources, including an Atlantic article, to back up its claim. In a similar way, when I asked both agents to re-create the graph by making a fully functional Excel file, ChatGPT’s version worked, while Manus’s had errors.

I don’t know if ChatGPT agent is better than Manus yet, but I suspect that it is far more likely to make gains faster than its competitor. To improve Manus will involve more careful crafting and bespoke work, to improve ChatGPT agents simply requires more computer chips and more examples. If the Bitter Lesson holds, the long-term outcome seems pretty clear. But more critically, the comparison between hand-crafted and outcome-trained agents points to a fundamental question about how organizations should approach AI adoption.

Agents in the Garbage Can

This returns us to the world of organizations. While individuals rapidly adopt AI, companies still struggle with the Garbage Can problem, spending months mapping their chaotic processes before deploying any AI system. But what if that's backwards?

The Bitter Lesson suggests we might soon ignore how companies produce outputs and focus only on the outputs themselves. Define what a good sales report or customer interaction looks like, then train AI to produce it. The AI will find its own paths through the organizational chaos; paths that might be more efficient, if more opaque, than the semi-official routes humans evolved. In a world where the Bitter Lesson holds, the despair of the CEO with his head on the table is misplaced. Instead of untangling every broken process, he just needs to define success and let AI navigate the mess. In fact, Bitter Lesson might actually be sweet: all those undocumented workflows and informal networks that pervade organizations might not matter. What matters is knowing good output when you see it.

If this is true, the Garbage Can remains, but we no longer need to sort through it while competitive advantage itself gets redefined. The effort companies spent refining processes, building institutional knowledge, and creating competitive moats through operational excellence might matter less than they think. If AI agents can train on outputs alone, any organization that can define quality and provide enough examples might achieve similar results, whether they understand their own processes or not.

Or it might be that the Garbage Can wins, that human complexity and those messy, evolved processes are too intricate for AI to navigate without understanding them. We're about to find out which kind of problem organizations really are: chess games that yield to computational scale, or something fundamentally messier. The companies betting on either answer are already making their moves, and we will soon get to learn what game we're actually playing.

As an organizational development consultant mainly for Non-Profits, I can’t help but wonder if some of the seemingly "nonsensical" processes in organizations are actually quite meaningful. What looks inefficient on the surface often reflects social cohesion, informal support, and the kind of relational intelligence that holds teams together – especially in mission-driven or nonprofit settings. F.E. A meeting might not be useful directly to produce something, but to strengthen the team spirit and for emotional well being.

Not all outputs in organisations are as clearly defined as “winning a chess game.” Goals like trust, participation, or social justice are hard to measure, often conflicting – but still essential. That’s what makes AI adoption so tricky: it needs to deal not just with outcomes, but with ambiguity, values, and context.

I'm fascinated by how AI navigates messy systems and invents new workflows to reach a goal. But I also believe we need human judgment, emotional intelligence, and trust in the wisdom of complex social dynamics. Otherwise, we risk only achieving the parts of our mission that are easy to describe – and losing sight of the rest.

Recent examples, like Anthropic’s experiments about agents starting blackmailing, show how hard it is for AI to handle conflicting goals. But that’s exactly the daily reality of organizations. That’s why we need to think of AI not only as a technical tool, but as something that must learn to operate within relationships, tensions, and shared responsibility.

Great piece. This all reminded me of "Chesterton's fence", defined by Wikipedia as "the principle that reforms should not be made until the reasoning behind the existing state of affairs is understood."

And ibid.: " The more modern type of reformer goes gaily up to it and says, "I don't see the use of this; let us clear it away." To which the more intelligent type of reformer will do well to answer: "If you don't see the use of it, I certainly won't let you clear it away. Go away and think. Then, when you can come back and tell me that you do see the use of it, I may allow you to destroy it.""

To me, that's the main value in any kind of outsider (whether a consultant, or a future advanced AI) making an effort to understand current processes, as opposed to simply the known inputs and the expected outputs, and then building from first-principles. There's often a good reason why the current process exists, and often it's not simply an accident of history.

The same is true when learning from biological processes and evolution