As we begin the second year of our AI Moment (it is still too early and dramatic to call it the AI Age), it is time to consider the future.

To be clear, nobody can tell you the future of AI accurately, except that AI development seems to be happening much, much faster than even experts expected. We can be confident about that because a new paper just came out surveying almost three thousand published AI researchers, following up on a similar paper published a year earlier. The average estimated date for when AI could beat humans at every possible task shifted dramatically, moving from 2060 to 2047—a decrease of 13 years—in just the past year alone! (And the collective estimate was that there was a 10% chance that it would happen by 2027).

With so much changing so quickly, we need to take predictions with a grain of salt, but that doesn’t mean we can’t say anything useful about the coming year in AI. To ground ourselves, we can start with two quotes that should inform any estimates about the future. The first is Amara’s Law: “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.” Social change is slower than technological change. We should not expect to see immediate global effects of AI in a major way, no matter how fast its adoption (and it is remarkably fast), yet we certainly will see it sooner than many people think.

While Amara’s Law speaks to aggregate change, real change often originates in smaller communities and pockets, among user innovators and those with extreme needs, or in the research being done in labs and universities. If we want to understand what AI will do, we need to look for these early effects. This brings us to our second quote, by William Gibson, who famously wrote “The future is already here - it is just unevenly distributed” - a point backed up by decades of research on user innovation.

So rather than predict the future by speculating, it is worth looking at the places where it is already occurring. Here is a rapid tour of the signs and portents that signal the future of AI.

AI is Already Impacting Work

There are now enough careful studies of the use of AI in real work to draw three conclusions about how GPT-4 level AIs impact work performance:

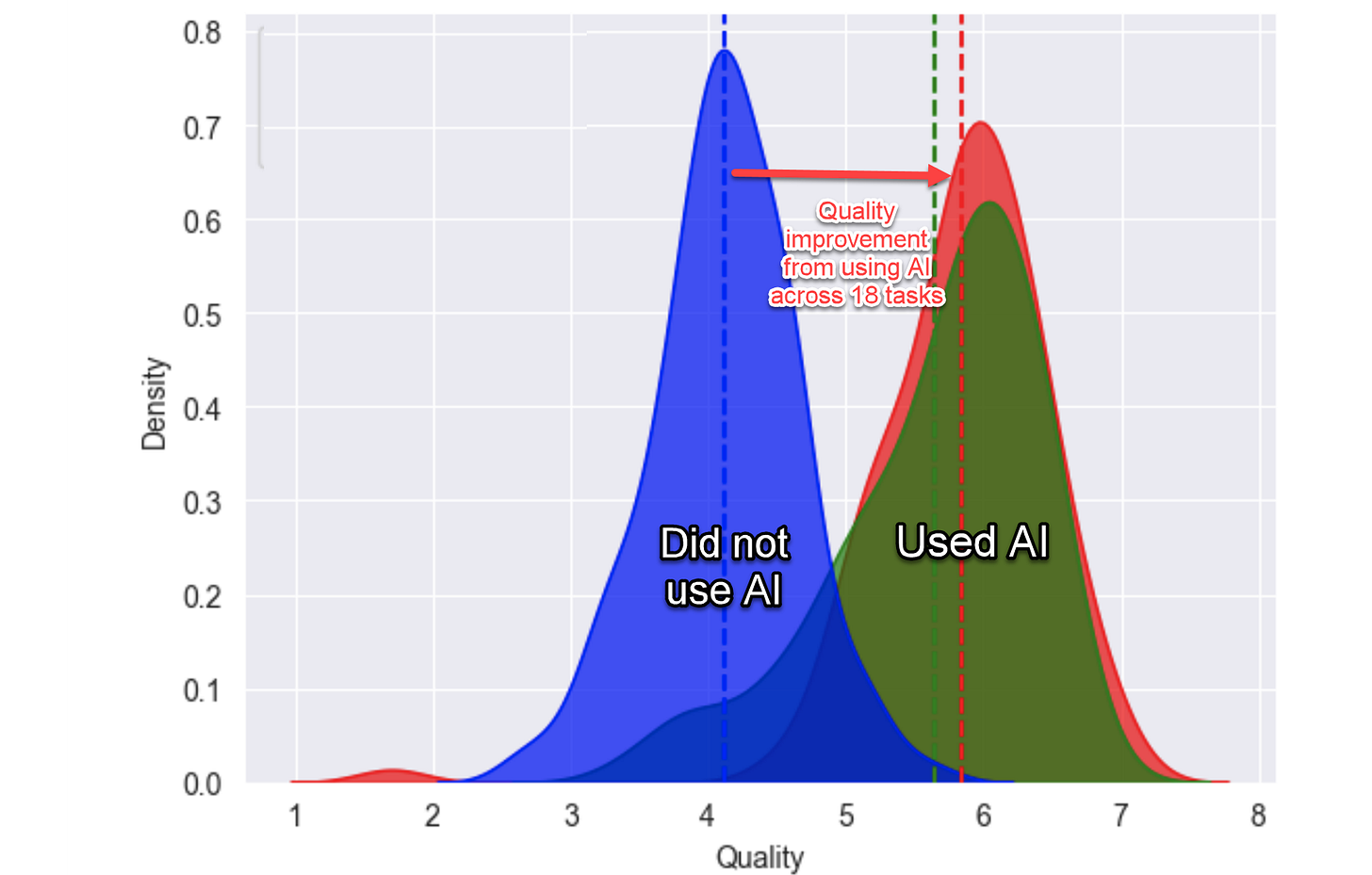

1) AI boosts overall performance at complex work tasks. In the large-scale controlled trial that my colleagues and I conducted at Boston Consulting Group we found consultants using the same version of GPT-4 everyone in the world has access to 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without the tool.

A new paper looking at legal work done by law students found the same results. As have studies on writing, programming, and innovation. All of these papers rely on chatbots, but the impact seems to persist even when AI is integrated into other software. Microsoft found large-scale performance improvements in meetings and emails from their GPT-4 powered Copilot AI, which is built into Office applications (though it is their product, so take it with a grain of salt). Overall, these are very consistent results across many studies. A 20%-80% improvement across wide ranges of tasks, without training or integration work, is unheard of. And it suggests that AI is going to play a big role in work.

2) The effects are largest for lower performers (for now). Another universal result (which you can see in the graphs from the legal paper above) is that AI acts as a leveler, helping low performers more than high performers. This may temporary. Current AIs are good enough that they essentially do solid work on their own, raising the level of lower performers who use them. In the long run, it may that AI ends up helping high performers more, or follows some other pattern entirely. We will learn a lot more about this in the coming year.

3) The Jagged Frontier: AI is better at some tasks than others. Multiple studies have identified what we refer to as the 'Jagged Frontier' - because AI is excellent at some tasks that seem hard to humans, and bad at some tasks that seem easy, it is hard to know what it is good at in advance. So, the only way to understand what AI can do is to use it and see what happens. Only with experience can you understand the shape of the frontier and learn to avoid relying on AI in cases where it does not operate well. Though the frontier of what AIs can do is constantly expanding.

Despite these early findings, and as you might expect from Amara’s Law, many companies have been slow to deploy AI. Actually, it is slightly worse than that: most companies have either ignored AI (though their employees are using it all the time) or decided to treat it as some sort of standard knowledge management tool, a task that LLMs are not actually that good at. This failure of imagination will likely continue, and continue to hurt companies, but more leaders are going to wake up to the nature of AI as transformational in the coming year.

I know this because anxiety in the C-suite is unevenly distributed, and there are already exceptions to the general AI complacency. For example, Eric Vaughan, the CEO of software company IgniteTech, saw the implications of AI quickly and made its use mandatory throughout the company this summer. He gave all employees access to a ChatGPT Plus subscription and some training, with the expectation that everyone in the company would engage in experimentation. Eric was extremely serious about the need to transform that company - he fired everyone who did not at least try to use AI by the end of the month, and gave cash prizes to people who came up with good prompts to improve their work - a much more dramatic embrace of AI than I have seen elsewhere. But other companies are considering transformation as well. Another organization I spoke to altered their hiring policy. Before a new hire is approved, the team has to spend a couple hours trying to automate the potential job as using AI. They can only post new openings after they see how much AI can supplement or replace the need for a new employee.

The experiences of these early adopters suggest that productivity improvements could significantly alter the nature of jobs. And, indeed, there are some troubling signs about the potential job market impact of AI on freelance workers. A new study of a major freelancing platform found that posting for jobs that could be done with AI declined 21% after ChatGPT was introduced, and a 17% drop in graphic design jobs after image-creating AIs were released. We are likely to see similar effects ripple through the economy, though a survey of economists still sees net job growth as a result of AI, there will certainly be many changes to individual jobs that policy makers need to consider.

Despite the potential for disruption, another common finding in studies is that people who use AI for work are happier with their jobs, because they outsource the boring work to the AI. Leaders and managers of organizations need to think hard about how to capitalize on the positive aspects of AI-driven change while avoiding the negative. A successful 2024 for AI’s role in work will be one in which considerable effort goes to thinking about how to transform organizations, taking advantage of the inherent power (and weirdness) of AI in order to help both workers and companies flourish.

AI is Already Altering the Truth

Way back in February, I wrote about how easy it was to create a deepfake of myself. In the last year, the technology has come so much further. To see what I mean, please watch this short video. It is less than a minute and illustrates the point much better than I can with words. It is the result of me recording a 30 second clip of video, and providing 30 seconds of my voice, to Heygen, one of many video AI startups. After a couple of minutes, I had an avatar that I could make say anything, in any language. It used some of my motions (like adjusting the microphone) from the source video, but created a clone of my voice and altered my mouth movements, blinking and everything else. And this took almost no time. You really can’t trust what you see or hear anymore.

In addition to voice and video, AI images have become incredibly convincing. Here is the result of a few minutes of my using Midjourney 6 (just released as well) to produce cellphone images of the Hello Kitty invasion of America. I picked an obviously fake topic, but realistic images of almost anyone and any scene are easy to make. They are already circulating, and you will certainly be seeing a lot more of this, especially with upcoming election years in many countries.

Almost every area of information security is going to be altered in the coming year. For example, AIs can now complete CAPTCHAS and send convincing emails to get people to click on malicious links.

These changes are now inevitable. Open source models, which are free to use and modify, can already fake voices, photos, emails and more - and they can run on a home computer. Even if we shut down AI development, the information landscape post-2023 will never be the same as it was before. I don’t think most people are ready for what that means for privacy, security (come up with a secret family password to prove your identity now!) and global politics.

AI is Already Effective at Helping Learning

If you have been reading this Substack, you know I am especially interested in the use of AI in education. I have written about our papers and prompts for teaching with AI, as well as classroom experiments. (I hope to have a really in-depth dive into this topic in my next post, including lots of new examples of prompts and approaches.) Many other instructors are also experimenting with AI and sharing what they are learning. But we haven’t had good data from experiments using AI in education until very recently.

Now we are starting to see some early results, and they suggest that the potential for AIs for teaching and mentoring is quite high. A new large scale, pre-registered controlled experiment using GPT-4 in tutoring found that practicing with the help of GPT-4 (especially when the AI was given a simple prompt to provide good explanations) significantly improved performance on SAT math problems. To be clear, GPT-4 is not yet a universal tutor, and the experiment was more about guided practice problems with AI help than a deep educational experience. However, given that billions of people around the world have free GPT-4 access through Bing (and will be able to get a similar quality AI through Google, whenever they release Gemini Ultra), this is a potentially very important finding.

Moving beyond the classroom, we also have our first findings on the real-world impact of AI mentoring. A fascinating new paper reports on a six-month long experiment using GPT-4 to provide advice to small business entrepreneurs in Kenya. They found that getting AI mentoring boosted the performance of the best entrepreneurs by 20% - a very large effect for an educational intervention. Interestingly, low performers actually did worse after getting AI mentoring help because they tended to ask questions that the AI was not good at answering or got advice they could not take (many of the low performers had businesses that were already in trouble). To me, this suggests that AI instruction can have real-world impacts, but we need to carefully design these tools to work for students of all ability levels.

Of course, there are also massive risks associated with AI in classrooms. The AI can basically do everyone’s homework (And remember, AI writing is undetectable). A lack of clear information means that there is confusion over how AI works and how to use it. And the biases and issues that AI brings to the classroom are still poorly understood. We are in the early days of exploring AI and education, but, given its wide-spread accessibility and potential for improving educational outcomes, this seems like an area where it is imperative that we actively experiment to find positive use cases. I expect to see a lot of progress this year.

Current Tech is Good Enough for Transformation… But More Tech is Coming.

One clear theme of recent academic work on practical uses of LLMs is that there is a lot of potential left in GPT-4. It is (still) best AI available, despite being a system that is already year old. Consider this paper showing a well-prompted GPT-4 beats the best specialized medical AI (and most doctors), or this paper finding that GPT-4 can help automate novel scientific research, or this one suggesting that GPT-4 can navigate web pages visually with the right tools. Even if we never exceeded GPT-4 level performance (very unlikely) we know that good prompting, connecting the LLM to other tools, and other simple approaches greatly expand the AI's capabilities. I believe that we have 5-10 years of just figuring out what GPT-4 (and the soon-to-be-released Gemini Ultra) can do, even if AI development stopped today. There are so many real-world tasks that are at least somewhat tractable by the current set of GPT-4 class LLMs with the right processes and tools.

But, of course, technology is not going to stand still. Open source models are coming out every week that exceed ChatGPT-3.5 levels of performance and can run for free on a gaming computer. New models that will beat GPT-4 are in development and likely to be released this year. AI is going to be integrated into your Google and Microsoft applications, with uncertain implications. And the specter of possible AGI — that potential machine smarter than a human — haunts us.

Among this broad acceleration, I think people who are worried about AI are often paying too much attention to scattered signals that AI might be hitting limits. The New York Times, for example, recently sued OpenAI over how it trained on Times data, including how it can, under some circumstances, reproduce copyrighted articles (and fake other articles the Times did not write). I am no legal expert, and cannot speak to the merits of the case, but I think it is extremely unlikely that any legal finding will do much to put the AI genie back in the bottle. There are already companies, like Adobe, that only train AI on data they have unambiguous legal rights to, so there are paths forward for large companies even if the Times suit is successful. Additionally, open source models, already released into the wild and being developed all over the world, cannot be stopped. And different legal rules in different countries (Japan appears to view copyright as not applying to training data) suggest that AI, as a global phenomenon, will continue.

Most likely, AI development is actually going to accelerate for a while yet before it eventually slows down due to technical or economic or legal limits. While how far AI comes this year is not yet clear, I do know that this may be the critical time to assert our agency over AI’s future. Managers, educators, and policy makers need to recognize that we are living in an AI-haunted world, and we need to both adjust to it, and shape it, in ways that increase its benefits and mitigate its harms.

We need to start now, because we are facing exponential change, and that means that even the signs and portents I have discussed in this post are quickly becoming prophecies of the past, rather than indicators of the future.

Speaking of the future, I have a book coming out on April 2: “Co-Intelligence: Living and Working with AI.” If you like this Substack, you may like it as well. More on the book later, but it is available for pre-order here.

On the topic of "Hearing is no longer believing", here is you narrating this entire post:

https://soundcloud.com/thomas-askwho-askew/signs-and-portents

I actually really like this, as I always read better with my ears, and the human quality of the current AI voices makes it so much easier to listen to than previous TTS.

Another brilliant and insightful post Ethan. I look forward to each one of these. Keep em' coming in 2024. You're an inspiration.