An Opinionated Guide to Which AI to Use: ChatGPT Anniversary Edition

A simple answer, and then a less simple one.

Update: March 8, 2024: There are now 3 GPT-4 class models to pick from: GPT-4, Google’s Gemini Advanced, and Claude 3. The arguments for using GPT-4 apply to them as well.

The world of generative AI seems very confusing, with tons of Large Language Models released in just the past few months, including a major new announcement from Google. So, given all of this, a lot of people ask me which AI they should actually try. I want to give you an answer from the perspective of an individual user who wants to experiment with AI, either to experience what AI is like, or because they want to put it to a specific use - teaching, boosting creativity, improving work performance, or just for fun.

The advice that I have for most people who want to use AI is incredibly simple. Of course, once I tell you the simple answer I will complicate it a bit, and then complicate it a bit more, but let’s start with the easy stuff: get GPT-4. And stop using free ChatGPT.

The Easy Answer

If you are at all interested in generative AI and understanding what it can do and why it matters, you should just get access to OpenAI’s GPT-4 in as unadulterated and direct way as possible. Everything else comes after that.

GPT-4 is at least a year old. But, in that year, despite billions of dollars of investment, no company has managed to match the general capabilities of this model, for reasons that are not completely clear. Google just announced that their new Gemini Ultra model beats GPT-4 (by relatively small margins), but it isn’t available yet, so I have not tried it.

Now, to be clear, this is not the free ChatGPT, which uses GPT-3.5. In the last year, many companies have caught up with GPT-3.5: Google’s new Bard that has actually been released, powered by a system called Gemini Pro, is around GPT-3.5 level. Elon Musk’s Grok is around GPT-3.5 level. Anthropic’s Claude 2.1 is a bit better than GPT-3.5, as is Inflection’s model which powers the Pi Chatbot. A number of open source solutions are close to that level as well. But none of them, as of right now, beat GPT-4. Why is that important? Because at the GPT-4 level, AIs start becoming truly extraordinary.

There are lots of ways of trying to describe the big gap between GPT-3.5-class AIs and GPT-4. As you can see below, GPT-4 is much better at test scores, but tests are a hard way to judge AI. So, consider that, at the level of GPT-4, AI has real-world impact: we found that GPT-4 boosts real work performance, and other researchers have shown that it generates better ideas than most humans. GPT-4 also just feels smarter, you are more likely to get the weird illusion of sentience from it than any other model. In many ways, that general vibe is actually the most important difference - using GPT-4, you can get a sense of the future of AIs.

It also helps that GPT-4 is the most full-featured model. It has access to web browsing (so it is no longer stuck with just the knowledge it had at training), it is multimodal (which means it can “see” and listen to speech), it can create images, it can code and do data analysis, and it can talk back to you (at least in the phone app). There are lots of other nice features, like the option for a privacy mode that doesn’t share your data. It also has enough memory (its “context window”) that it can do pretty complex tasks:

Look up the reasons why someone should choose GPT-4 compared to other LLMs. Take the information and summarize it in a poem, the first stanza in English and the second in Latin. Then make an inspirational poster about GPT-4. Then do something with code to demonstrate your capabilities. In the results, you can see how it browses the web (including citing sources), writes a poem in a couple of languages, makes a poster, and then writes and executes code, all in a prompt or two.

When I speak in front of groups and ask them to raise their hands if they used the free version of ChatGPT, almost every hand goes up. When I ask the same group how many use GPT-4, almost no one raises their hand. I increasingly think the decision of OpenAI to make the “bad” AI free is causing people to miss why AI seems like such a huge deal to a minority of people that use advanced systems and elicits a shrug from everyone else.

Use GPT-4.

The more complicated answer: how do I get GPT-4?

The ideal way to experiment with GPT-4 was pretty clear until a couple of weeks ago. You could pay $20 a month and buy access to ChatGPT Plus through OpenAI. That gave you access to the latest version of ChatGPT running GPT-4, including all of the features I discussed above. Once you had it, I suggested trying to use it for everything, from work tasks (ask it to help you innovate, summarize documents, write emails, give advice) to fun (use it instead of Google, write fun stories, play games). Using chat bots effectively is always a little weird, and there is no instruction manual, but I found that after around 10 hours of using ChatGPT people get it. You goal is to hit those 10 hours, and ChatGPT Plus was the easiest way to do it.

Three things have happened in the past couple of weeks: ChatGPT Plus subscriptions are temporarily not being sold, ChatGPT seems to be suffering from performance issues and frequent outages, and a bunch of new ways of using the system has become available. I would still recommend getting Plus, but you can’t.

Fortunately, there is a whole universe of ways of getting access to the GPT-4, but they all are a bit weird. The best way right now is free, and available in 169 countries around the world, and that is Microsoft Bing. Yes, the search engine from Microsoft.

Bing has a Chat mode that gives you direct access to GPT-4, but only if you pick the right option - you have to select Precise or Creative Mode. Balanced mode, which is both the default, and, as the CEO of Bing revealed, by far the most popular mode, does not use GPT-4. The other two do. Precise Mode produces less interesting, but more accurate answers that makes it good for search. If you are doing anything other than web search, just use Creative Mode - the purple one.

Bing has most of the features of GPT Plus (the final missing one, Code Interpreter, is coming soon), but it is also a little weird. In the effort to make it act like a search engine, the standard GPT-4 has been given some extra commands, limitations, and abilities by Microsoft. Sometimes that is good (it is quite solid at search), but it can make the AI experience a little more erratic and require some playing around to make it work well. Overall, though, this is the best option for most people. And, again, it is free, meaning billions of people around the world have access to the best AI on the planet - something I have written about before.

If you don’t want to use Bing, or you have specialized needs, there are a couple more ways to get GPT-4. If you want an AI optimized for search, a service called Perplexity offers GPT-4 access that costs $20/month. I have only used the service a bit, and found it to be pretty impressive for information gathering, but I have heard from many people who seem to like it. Another option is Poe, a service that offers access to multiple different AIs, including GPT-4, for, you guessed it, $20/month.

For most people, using Bing is the right choice, at least until ChatGPT Plus reopens.

The most complicated answer: what about everything else?

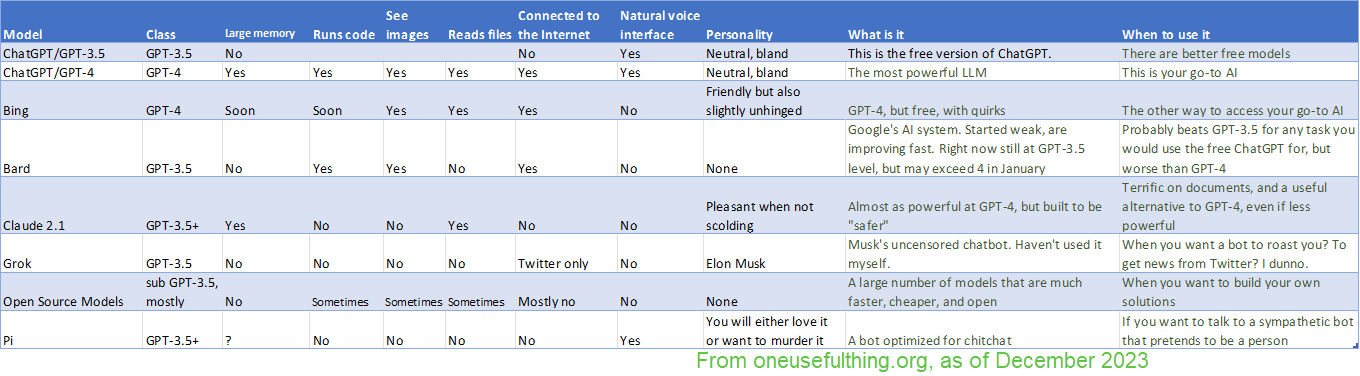

Of course, there are many other LLMs. I put together an opinionated cheat sheet about them below.

Despite all these options, the reason I suggest GPT-4 is that mostly, as an individual user, you want to use the “smartest” model you can get your hands on. The smartest models are also the largest models, thanks to a scaling law that currently suggests that the bigger your model is, the “smarter” it gets. And the “smarter” the model, the more it outperforms smaller, more specialized models - GPT-4 beats specialized medical models (and doctors) in medical tasks, even though GPT-4 was not built for medicine! These giant models are expensive to build and operate, as well as relatively slow in producing output. They are also proprietary, owned by a couple of companies that can afford to build and operate them.

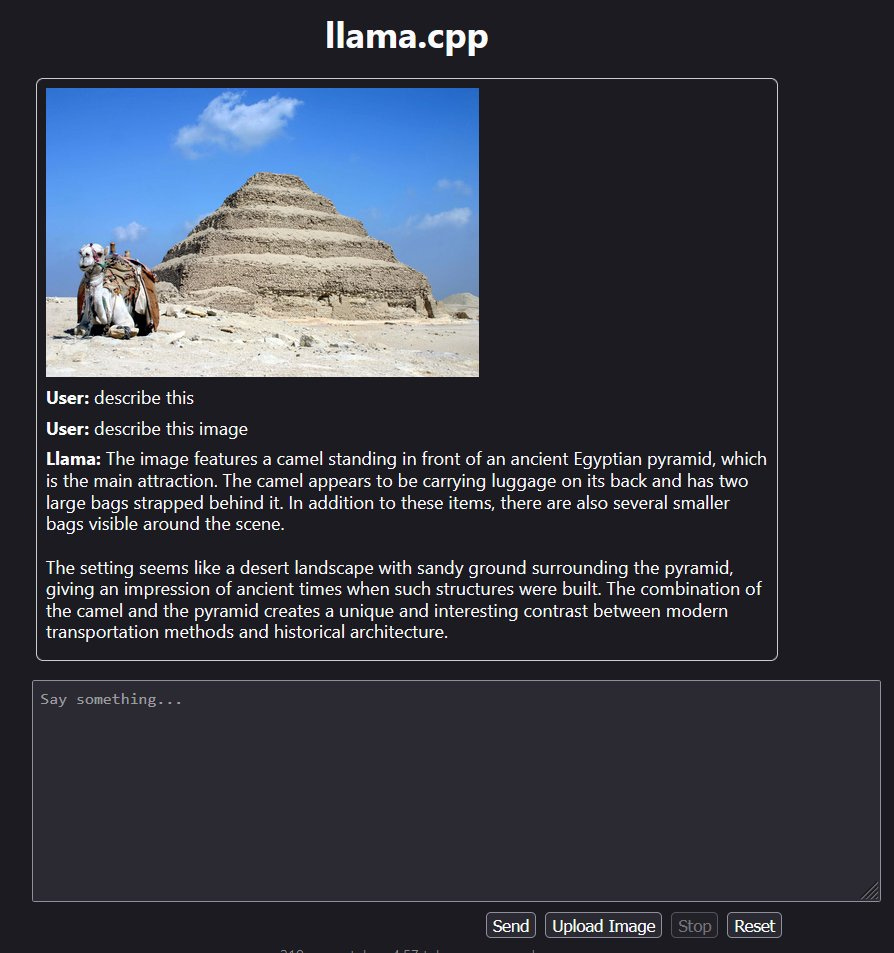

As an alternative, there are open source LLMs, which are free for anyone to use and are generally fast and cheap. They are also not as “smart” as the commercial models, though there is lots of debate over when, and if, they can catch. If you are considering building your own AI solution, these are worth trying. It is also somewhat amazing that anyone can download an LLM with one click and run a pretty solid AI with vision on their home computer, for free, forever, with no internet connection. I did this myself using llama.cpp, and you can, too.

Aside from Open Source, you may want to use other models in certain circumstances. Anthropic’s Claude 2.1 is especially interesting and offers some free access. Claude is most notable for having a very large context window - essentially the memory of the LLM. Claude can hold an entire book, or many PDFs, in memory. It has been built to be less likely to act maliciously than other Large Language Models, but otherwise works a lot like GPT-4, although it is slightly less “smart.” That makes it a good GPT-4 alternative to experiment with, and to use for a second opinion.

If you want to try a “social” AI, you should experiment with Pi, another free chatbot built by Inflection. Pi is optimized for conversation, and really, really wants to be your friend (seriously, try it to see what I mean). Be ready for emojis, but it is absolutely worth using at least once to experience what an AI optimized for chat is like, especially in voice mode.

I don’t have anything much to say about Elon Musk’s Grok. I haven’t used it, but it is currently a GPT-3.5 class model that is mostly notable for live access to Twitter and the fact that it is, ostensibly, less “censored” than other AIs. It is only available to Twitter users who pay extra.

Finally, lets talk Google. Google has been testing its own AI for consumer use, which they call Bard, but which is powered by a variety of AI models. Until recently that was an AI called PaLM 2, and it was bad. As of today, it has been replaced with a model called Gemini Pro, which I have been using. It is much more capable, but still around the level of ChatGPT. While the search interface and features of Bard are very exciting and slick, I would generally be careful with this model, since it does not perform as well as the GPT-4 powered Bing. Supposedly, in January, Google will release the first model to beat GPT-4, called Gemini Ultra. I will certainly write about that when it happens, but the current version of Gemini is perfectly fine, if not state-of-the-art.

A temporary state of affairs

Next time I write this guide, this are going to be different, because chatbots will not be the only way to access advanced AI. AI is creeping into applications (I already have early access to Microsoft Copilot, which brings AI to your Office apps, more on that soon) and applications are creeping into AI (Google’s Bard already connects to your email and other applications in interesting ways). AI will keep getting better, but there may be many ways to “speak” to them.

It is also entirely possible that, in the coming months, an AI will beat the level set by GPT-4 and Google Ultra. Every major AI company in the chart above is training new models, and, of course, so is OpenAI. We don’t know what a major advance past GPT-4 looks like, but I expect we may find out in 2024.

So, keep in mind that the AIs in this guide are the worst you will ever use, and start experimenting now so you can get a sense of the future when it arrives, very soon.

This post is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

It’s interesting for me that with all of google’s deep pockets and deep bench, the only things we got at yesterday’s announcement was a ChatGPT 3.5 level usable LLM and vaporware that claims to be at or slightly above ChatGPT 4 level. I disagree with Ethan a little about Bing, I forgot to renew my chatGPT 4 account and was stuck with Bing for a few days before I could get back to openAI, it was horrible, shocking really that Microsoft could take the full code and weights of ChatGPT 4 and mess it up as much as they did. I suppose they did it to lighten the model and make it less expensive to run on their side. But still, there’s something about giant companies that for now makes them less than the sum of their parts in this new exploding field.

There are rumors that LLMs are reaching a plateau, no less than Bill Gates recently said that, so maybe that explains why Gemini Ultra is at GPT 4 levels effectively a year after 4 was released. But I think even if the raw power is at some sort of plateau, there are plenty of little “tricks” left to keep progress going for years, just as we saw over the past 15 years as Moore’s Law collapsed but progress continued through multiple cores and the cloud etc. We can see the integration of video from the ground up into training sets, we can see multiple LLMs handing off to each other somewhat reminiscent of what has happened with multiple processors on single devices and the cloud. We can see bigger and persistent (as has already happened with customizable GPTs at openAI) context windows acting as memory. OpenAI has already gotten way better at integrating search and math stuff, with the same base model. And there are rumors they made a breakthrough in native math (and hence reasoning and planning) ability with a internal model called Q*

This is all super interesting for me and I’m an accelerationist, but I have a feeling that 5-10 years from now when the future I’m thinking of arrives, I will find myself deeply sad that many of the things I prided myself on as a person, will have been massively devalued and commodified.

A good summary! I’d also add Phind to the list which has beat GPT-4 on all “programming” benchmarks, is much faster and available for free. I use it often for debugging.

Coincidentally, I wrote a short blog on ChatGPT alternatives and like your list, Bard doesn’t make the cut.

https://www.harsh17.in/four-ai-chatbots-other-than-chatgpt/