Using AI Right Now: A Quick Guide

Which AIs to use, and how to use them

Every few months I put together a guide on which AI system to use. Since I last wrote my guide, however, there has been a subtle but important shift in how the major AI products work. Increasingly, it isn't about the best model, it is about the best overall system for most people. The good news is that picking an AI is easier than ever and you have three excellent choices. The challenge is that these systems are getting really complex to understand. I am going to try and help a bit with both.

First, the easy stuff.

Which AI to Use

For most people who want to use AI seriously, you should pick one of three systems: Claude from Anthropic, Google’s Gemini, and OpenAI’s ChatGPT. With all of the options, you get access to both advanced and fast models, a voice mode, the ability to see images and documents, the ability to execute code, good mobile apps, the ability to create images and video (Claude lacks here, however), and the ability to do Deep Research. Some of these features are free, but you are generally going to need to pay $20/month to get access to the full set of features you need. I will try to give you some reasons to pick one model or another as we go along, but you can’t go wrong with any of them.

What about everyone else? I am not going to cover specialized AI tools (some people love Perplexity for search, Manus is a great agent, etc.) but there are a few other options for general purpose AI systems: Grok by Elon Musk’s xAI is good if you are a big X user, though the company has not been very transparent about how its AI operates. Microsoft’s Copilot offers many of the features of ChatGPT and is accessible to users through Windows, but it can be hard to control what models you are using and when. DeepSeek r1, a Chinese model, is very capable and free to use, but is missing a few features from the other companies and it is not clear that they will keep up in the long term. So, for most people, just stick with Gemini, Claude, or ChatGPT

Great! This was the shortest recommendation post yet! Except… picking a system is just the beginning. The real challenge is understanding how to use these increasingly complex tools effectively.

Now what?

I spend a lot of time with people trying to use AI to get stuff done, and that has taught me how incredibly confusing this is. So I wanted to walk everyone through the most important features and choices, as well as some advice on how to actually use AI.

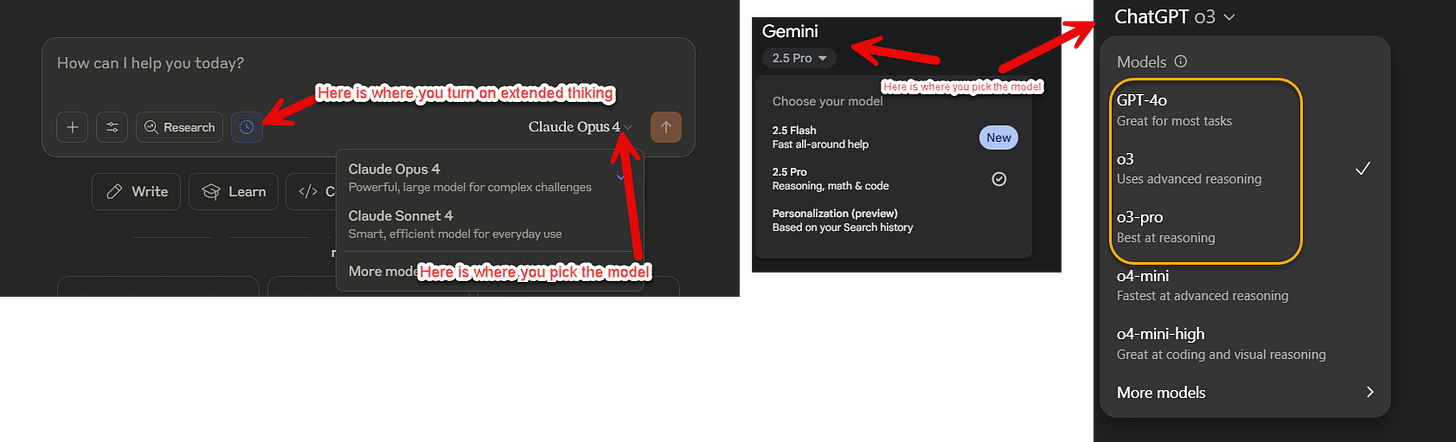

Picking a Model

ChatGPT, Claude, and Gemini each offer multiple AI models through their interface, and picking the right one is crucial. Think of it like choosing between a sports car and a pickup truck; both are vehicles, but you'd use them for very different tasks. Each system offers three tiers: a fast model for casual chat (Claude Sonnet, GPT-4o, Gemini Flash), a powerful model for serious work (Claude Opus, o3, Gemini Pro), and sometimes an ultra-powerful model for the hardest problems (o3-pro, which can take 20+ minutes to think). The casual models are fine for brainstorming or quick questions. But for anything high stakes (analysis, writing, research, coding) usually switch to the powerful model.

Most systems default to the fast model to save computing power, so you need to manually switch using the model selector dropdown. (Except for Gemini, the free versions of these systems do not give you access to the most powerful model, so if you do not see the options I describe, it is because you are using the free version)

I use o3, Claude 4 Opus, and Gemini 2.5 Pro for any serious work that I do. I also have particular favorites based on individual tasks that are outside of these models (GPT-4.5 is a really interesting model for writing, for example), but for most people, stick with the models I suggested most of the time.

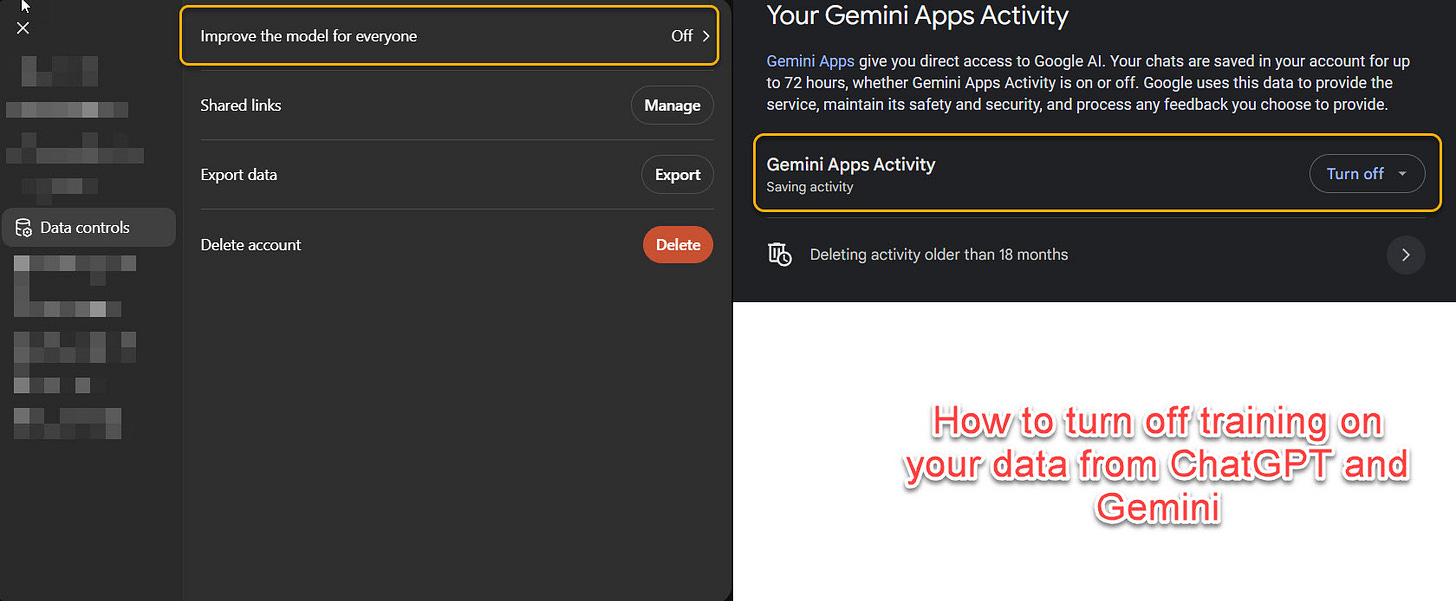

For people concerned about privacy, Claude does not train future AI models on your data, but Gemini and ChatGPT might, if you are not using a corporate or educational version of the system. If you want to make sure your data is never used to train an AI model, you can turn off training features easily for ChatGPT without losing any functionality, and at the cost of some functionality for Gemini. You may also want to turn on or off “memory” in ChatGPT’s personalization option, which lets the AI remember scattered details about you. I find the memory system to be too erratic at this point, but you may have a different experience.

Using Deep Research

Deep Research is a key AI feature for most people, even if they don’t know it yet. Deep Research tools are very useful because they can produce very high-quality reports that often impress information professionals (lawyers, accountants, consultants, market researchers) that I speak to. You should be trying out Deep Research reports in your area of expertise to see what they can do for you, but some other use cases include:

Gift Guides: “what do I buy for a picky 11-year-old who has read all of Harry Potter, is interested in science museums, and loves chess? Give me options, including where to buy at the best prices.”

Travel Guides “I am going to Wisconsin on vacation and want to visit unique sites, especially focusing on cheese, produce a guide for me”

Second opinions in law, medicine, and other fields (it should go without saying that you should trust your doctor/lawyer above AI, but research keeps finding that the more advanced AI systems do very well in diagnosis with a surprisingly low hallucination rate, so they can be useful for second opinions).

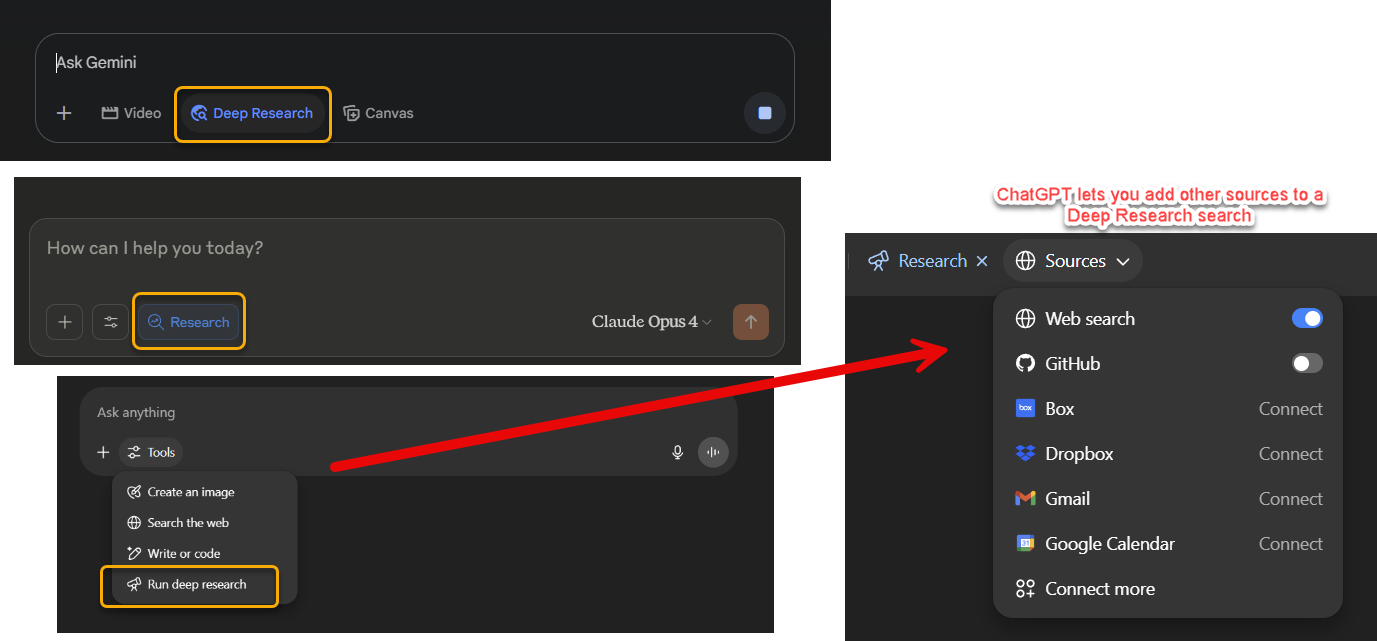

Deep Research reports are not error-free but are far more accurate than just asking the AI for something, and the citations tend to actually be correct. Also note that each of the Deep Research tools work a little differently, with different strengths and weaknesses. Turning on the web search option in Claude and o3 will get them to work as mini Deep Research tools, doing some web research, but not as elaborately as a full report. Google has some fun additional options once you have created a report, letting you turn it into an infographic, a quiz or a podcast.

An Easy Approach to AI: Voice Mode

An easy way to use AI is just to start with voice mode. The two best implementations of voice mode are in the Gemini app and ChatGPT’s app and website. Claude’s voice mode is weaker than the other two systems. What makes voice mode great is that you can just have a natural conversation with the app while in the car or on a walk and get quite far in understanding what these models can do. Note the models are optimized for chat (including all of the small pauses and intakes of breath designed to make it feel like you are talking to a person), so you don’t get access to the more powerful models this way. They also don’t search the web as often which makes them more likely to hallucinate if you are asking factual questions: if you are using ChatGPT, unless you hear the clicking sound at 44 seconds into this clip, it isn’t actually searching the web.

Voice mode's killer feature isn't the natural conversation, though, it's the ability to share your screen or camera. Point your phone at a broken appliance, a math problem, a recipe you're following, or a sign in a foreign language. The AI sees what you see and responds in real-time. I've used it to identify plants on hikes, solve a problem on my screen, and get cooking tips while my hands were covered in flour. This multimodal capability is genuinely futuristic, yet most people just use voice mode like Siri. You're missing the best part.

Making Things for You: Images, Video, Code, and Documents

ChatGPT and Gemini will make images for you if you ask (Claude cannot). ChatGPT offers the most controllable image creation tool, Gemini uses two different image generation tools, Imagen, a very good traditional image generation system, and a multimodal image generation system. Generally, ChatGPT is stronger. On video creation, however, Gemini’s Veo 3 is very impressive, and you get several free uses a day (but you need to hit the Video button in the interface)

All three systems can produce a wide variety of other outputs, ranging from documents to statistical analyses to interactive tools to simulations to simple games. To get Gemini or ChatGPT to do this reliably, you need to select the Canvas option when you want these systems to run code or produce separate outputs. Claude is good at creating these sorts of outputs on its own. Just ask, you may be surprised what the AI systems can make.

Working with an AI

Now that you have picked a model, you can start chatting with it. It used to be that the details of your prompts mattered a lot, but the most recent AI models I suggested can often figure out what you want without the need for complex prompts. As a result, many of the tips and tricks you see online for prompting are no longer as important for most people. At the Generative AI Lab at Wharton, we have been trying to examine prompting techniques in a scientific manner, and our research has shown, for example, that being polite to AI doesn’t seem to make a big difference in output quality overall1. So just approach the AI conversationally rather than getting too worried about saying exactly the right thing.

That doesn’t mean that there is no art to prompting. If you are building a prompt for other people to use, it can take real skill to build something that works repeatedly. But for most people you can get started by keeping just a few things in mind:

Give the AI context to work with. Most AI models only know basic user information and the information in the current chat, they do not remember or learn about you beyond that. So you need to provide the AI with context: documents, images, PowerPoints, or even just an introductory paragraph about yourself can help - use the file option to upload files and images whenever you need. The AIs can do some of these ChatGPT and Claude can access your files and mailbox if you let them, and Gemini can access your Gmail, so you can ask them to look up relevant context automatically as well, though I prefer to give the context manually.

Be really clear about what you want. Don’t say “Write me a marketing email,” instead go with “I'm launching a B2B SaaS product for small law firms. Write a cold outreach email that addresses their specific pain points around document management. Here's the details of the product: [paste]” Or ask the AI to ask you questions to help you clarify what you want.

Give it step-by-step directions. Our research found this approach, called Chain-of-Thought prompting, no longer improves answer quality as much as it used to. But even if it doesn’t help that much, it can make it easier to figure out why the AI came up with a particular answer.

Ask for a lot of things. The AI doesn’t get tired or resentful. Ask for 50 ideas instead of 10, or thirty options to improve a sentence. Then push the AI to expand on the things you like.

Use branching to explore alternatives. Claude, ChatGPT, and Gemini all let you edit prompts after you have gotten an answer. This creates a new “branch” of the conversation. You can move between branches by using the arrows that appear after you have edited an answer. It is a good way to learn how your prompts impact the conversation.

Troubleshooting

I also have seen some fairly common areas where people get into trouble:

Hallucinations: In some ways, hallucinations are far less of a concern than they used to be, as AI has improved and newer AI models are better at not hallucinating. However, no matter how good the AI is, it will still make errors and mistakes and still give you confident answers where it is wrong. They also can hallucinate about their own capabilities and actions. Answers are more likely to be right when they come from the bigger, slower models, and if the AI did web searches. The risk of hallucination is why I always recommend using AI for topics you understand until you have a sense for their capabilities and issues.

Not Magic: You should remember that the best AIs can perform at the level of a very smart person on some tasks, but current models cannot provide miraculous insights beyond human understanding. If the AI seems like it did something truly impossible, it is probably not actually doing that thing but pretending it did. Similarly, AI can seem incredibly insightful when asked about personal issues, but you should always take these insights with a grain of salt.

Two Way Conversation: You want to engage the AI in a back-and-forth interaction. Don’t just ask for a response, push the AI and question it.

Checking for Errors: The AI doesn’t know “why” it did something, so asking it to explain its logic will not get you anywhere. However, if you find issues, the thinking trace of AI models can be helpful. If you click “show thinking” you can find out what the model was doing before giving you an answer. This is not always 100% accurate (you are actually getting a summary of the thinking) but is a good place to start.

Your Next Hour

So now you know where to start. First, pick a system and resign yourself to paying the $20 (the free versions are demos, not tools). Then immediately test three things on real work: First, switch to the powerful model and give it a complex challenge from your actual job with full context and have an interactive back and forth discussion. Ask it for a specific output like a document or program or diagram and ask for changes until you get a result you are happy with. Second, try Deep Research on a question where you need comprehensive information, maybe competitive analysis, gift ideas for someone specific, or a technical deep dive. Third, experiment with voice mode while doing something else — cooking, walking, commuting — and see how it changes your ability to think through problems.

Most people use AI like Google at first: quick questions, no context, default settings. You now know better. Give it documents to analyze, ask for exhaustive options, use branching to explore alternatives, experiment with different outcomes. The difference between casual users and power users isn't prompting skill (that comes with experience); it's knowing these features exist and using them on real work.

It is actually weirder than that: on hard math and science questions that we tested, being polite sometimes makes the AI perform much better, sometimes worse, in ways that are impossible to know in advance. So be polite if you want to!

Great breakdown! One thing I always mention when people say they’re wary of using AI assistants because of hallucinations: the mindset needs to shift. These aren’t just Q&A robots. They can actually be your critical thinking partners.

The real value isn’t in asking “what’s the answer?” It’s in using these models to stress-test your thinking. They can:

1. Expand your ideas

2. Validate or poke holes in them

3. Surface POVs you may have completely overlooked

Yes, they’re great for answering simple questions but in doing so, they can also hallucinate too. The key is in how you engage with them.

Give o3 a thesis, for example a stock idea and your reasons for liking it. And give it a persona, like a skeptical hedge fund portfolio manager. Ask it for 10 reasons that support your case and 10 that challenge it. You’ll get new angles, risks you hadn’t considered, and potential counterarguments to prepare for. Now, the conversation isn’t about being right or wrong now. It’s about being more rigorous.

Bottom line: don’t use LLMs only as search bars. Start using them like strategic thought partners. Pick its brain so that it shares information that can sharpen your thoughts and help YOU make more informed decisions.

This is incredibly timely and useful. I get asked this all the time—and even some people who are paying for the good models (say, ChatGPT Plus) are not aware that they can switch to more powerful models, so they're missing out. A quick "please share your screen and tell me what you want to do" is often an hour very well spent for greater effectiveness in using AI.

I agree with all your points, but I have found Claude far less useful for writing than the other models. I did not see the leap toward Claude 4 (Opus or Sonnet) that I expected, not in writing and reasoning. In fact, not long ago I asked both Claude 4 and Gemini 2.5 Pro to quantify about three pages of data (quantitative and qualitative). The conclusions were so different that I gave each the answer the other had given. Claude apologized profusely and got it wrong again upon reanalysis. I also find that Gemini is writing better than the rest of the models. If someone wants to pay for a model, right now I would not recommend paying for Claude.

One more thing—what I just mentioned is something that I recommend to people who are willing to pay for at least two models. Make them converse! Give one model the answer the other gave you. This is generally a very fruitful exercise.