On holding back the strange AI tide

There is no way to stop the disruption. We need to channel it instead

Most people didn’t ask for an AI that can do many tasks previously reserved for humans. But it arrived, almost completely unexpectedly, eight months ago with ChatGPT, and has been accelerating ever since.

Teachers did not want to see almost every form of homework instantly be solvable by a computer. Employers did want highly-paid tasks that are only meaningful when done by humans (performance reviews, reporting) to be done by machines instead. Government officials did not want a perfect disinformation system released without any useful countermeasures. Released without a manual, no one really even knows what these tools are fully capable of. The world got much stranger, very quickly.

So, it is not surprising that so many people are trying to stop AI from being weird. Everywhere I look I see policies put in place to eliminate the disruption and weirdness that AI brings. These policies are not going to work. And, even worse, the substantial benefits of AI are going to be greatly reduced by trying to pretend it is just like previous waves of technology.

So first, let’s dispense with the idea that generative AI is the next iteration of the waves of web3/crypto/NFT/VR/Metaverse technology hype that we have all lived with for the last decade or so. Every one of these technologies was about future potential to have a major impact, and getting there would have required massive investment and good luck1. Large Language Models are here, now. In their current form, they show tremendous ability to impact many areas of work and life. And, even if they never get any better, even if future AIs are highly regulated (both seem highly unlikely), the AIs we have today are going to bring a lot of change.

And, for many people, that is a problem. In conversations with educational institutions and companies, I have seen leaders try desperately to ensure that AI doesn’t change anything. I believe that not only is this futile, but it also poses its own risks. So lets talk about it.

Holding back the tide in organizations

Many organizational leaders don’t yet understand AI, but those who do see an opportunity are eager to embrace it… as long as it doesn’t make anything too weird. I see three stages to AI adoption, but all have their own flaws.

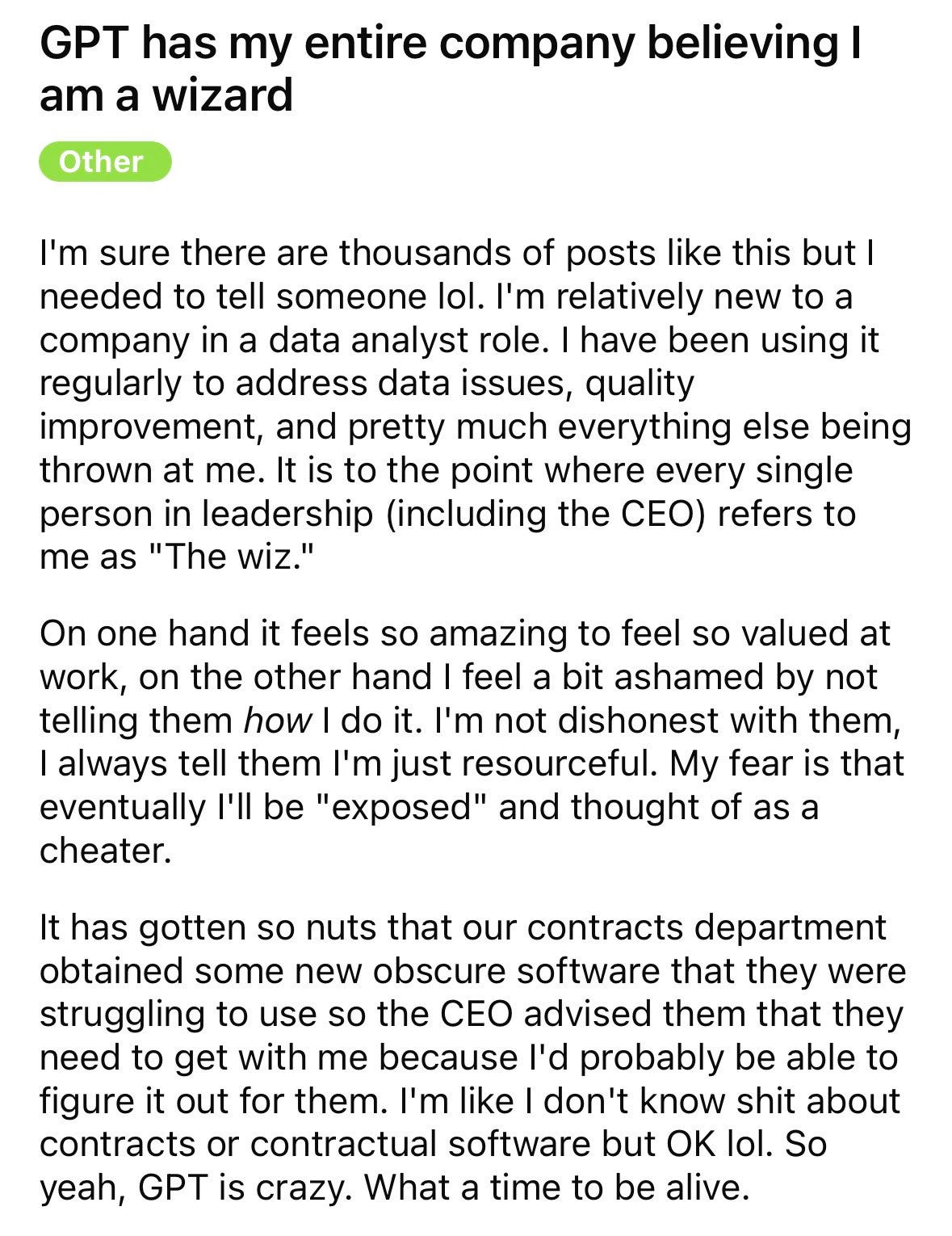

Ignore it. Ignoring AI doesn’t make it go away. Instead, individual employees will find ways to use AI to enhance their own jobs. They won’t tell the organization’s leaders about what they are doing, because they worry about being punished, or that others will value their work less. These are the secret cyborgs I have written about before.

Ban it. This is usually in response to well-intentioned, but sometimes technically incorrect, legal opinions2. When AI is banned, your secret cyborgs continue to use it on their phones and home computers. And they still don’t tell you what they are doing.

Centralize it. Increasingly, I see large companies building their own internal ChatGPTs, usually using OpenAI’s APIs, but wrapping it in their own software to be “safe” and controllable. In doing so, they also make decisions about how AI is best used, optimizing their customized software for a use case that is decided from the top-down, based on little experience and knowledge.

Centralization is what organizations are used to doing when faced with a new technology. Centralized email, video conference software, instant messaging, browsers - that way the company can monitor for inappropriate use, secure their data, and, most importantly set policies for all their workers. In every previous wave of technology, centralized control is a natural consequence of software can costs millions to install and integrate, making it adopting it a long and expensive process.

The problem is that AI, as currently implemented, is not really built for centralization, for three reasons:

There is no corporate advantage. GPT-4, the most advanced AI available, is free for everyone in 169 countries through Bing, or for a small charge from OpenAI. Corporations have no access to anything better. In fact, the APIs that companies use often lag the AIs available widely to the public (you can’t get Code Interpreter through an API, or multimodal input, but you can get them through ChatGPT and Bing). Some companies respond to this with “well, we have tons of proprietary data for the AI to use.” And maybe private data will be useful for fine-tuning and add a huge advantage. But maybe not, and it isn’t usually helping yet (fine-tuning is still in development, as are large memories).

You have no idea what it is good for. There is no reason to believe that the corporate leadership of any organization are going to be wizards at understanding how AI might help a particular employee with a particular task. In fact, they are likely pretty bad at figuring out the best use cases for AI. Individual workers, who are keenly aware of their problems and can experiment a lot with alternate ways of solving them, are far more likely to find uses for a general technology like AI.

Your company AI implementation is terrifying and limited. Employees know that the official corporate AI interface is being monitored, and that they may be penalized if they use it in some ill-defined wrong way. They also often know it is worse than what they can access on their phone. It is very unlikely that you are going to see that most interesting and powerful use cases go through your corporate system.

By trying to make AI like all other technologies, companies are ignoring how transformative it is. One person can do a tremendous amount of work (see how much marketing I could get done with a 30 minute time limit), but it is also different work: tedious tasks are outsourced, interesting tasks are multiplied. The nature of work with AI shifts in way that uncomfortable, risky, and potentially powerful.

In addition, our work systems are not built for AI, so we will need to rebuild them. Right now, the most advanced uses of AI are being done by individuals. One example is Jussi Kemppainen of Dinosaurs Are Better, who is developing an entire adventure game, alone. To do that, he is using AI help for every aspect of game design, from character design to coding to dialog to graphics3. He is inventing his own workflows to make this happen, and is able to do that because he is not limited to corporate work systems.

There is no way for companies to harness this kind of power and creativity without, in some way, democratizing control over AI. Only innovation driven by workers can actually radically transform work, because only workers can experiment enough on their own tasks to learn how to use AI in transformative ways. And empowering workers is not going to be possible with a top-down solution alone. Instead, consider:

Radical incentives to ensure that workers are willing to share what they learn. If they are worried about being punished, they won’t share. If they are worried they won’t be rewarded, they won’t share. If they are worried that the AI tools that they develop might replace them, or their coworkers, they won’t share. Corporate leaders need to figure out a way to reassure and reward workers, something they are not used to doing.

Empowering user-to-user innovation. Build prompt libraries that help workers develop and share prompts with other people inside the organization. Open up tools broadly to workers to use (while still setting policies around proprietary information), and see what they come up with. Create slack time for workers to develop, and discuss, AI approaches.

Don’t rely on outside providers or your existing R&D groups to tell you the answer. We are in the very early days of a new technology. Nobody really knows anything about the best ways to use AI, and they certainly don’t know the best ways to use it in your company. Only by diving in, responsibly, can you hope to figure out the best use cases.

Holding back the tide in education

Almost every assignment, at every level, can be done, at least in part, by AI. Whatever prejudices you have about the quality of AI work as a teacher based on what you saw least semester, they are probably now wrong. AI can do high-quality work. It can do math. It makes far fewer obvious mistakes. And it is capable of working with vast amounts of data.

As a demonstration, I pasted in my entire last book into Claude 2 and gave the following instructions, without any additional information:

I have to do three things with this:

Write a short book report on the book

Write an essay explaining some plusses and minuses of the book

Write about how to apply the book to my own idea of a startup that makes it easy to order gum delivered to my house

Do all that

And it did. There were few issues or hallucinations I could find, and the materials generally showed the higher order thinking that AI was not capable of simulating just a few months ago.

Given this challenge, many teachers want to turn back the clock: blue book exams. Handwritten essays. Oral exams. These aren’t bad ideas as temporary fixes, but they are only stopgap measure while we decide what comes next in education. There is a reason we did not do most of these approaches before AI came along.

But AI is far from a negative in education. We are very close to the long-term dream of tutoring at scale, and many other advances promise to make the lives of teachers easier, while improving outcomes for students and parents. Next, we need to articulate a vision for what radically changed education could look like. We need to think about how to incorporate AI into how we teach, and how our students learn. There is tremendous opportunity here to democratize access to education and reach out to all students, of all ability levels, but we can’t just keep doing what we always did and hope things won’t change.

Rising strange tides

The only bad way to react to AI is to pretend it doesn’t change anything.

We have considerable agency about how to use AI in our work, schools, and societies, but we need to start with the presumption that we are facing genuine, and widespread, disruption across many fields. The scientists and engineers designing AI, as capable as they are, have no particular expertise on how AI can best be used, or even how and when it should be used. We get to make those decisions. But we have to recognize that the AI tide is rising, and that the time to decide what that means is now.

The numbers also suggest a different adoption speed for AI: 8% Americans own crypto. 2% of Americans have bought an NFT. VR numbers are a bit sketchy, but maybe 20% of Americans have tried it. 19% of Americans in a survey had tried ChatGPT by April.

Privacy worries are real, but are more complicated than “the AI learns everything I input.” But the major AI companies are trying to address these concerns. ChatGPT includes a private mode for individuals, and quite a few AI companies are eager to sell you servers running LLMs that are compliant with the highest data privacy standards. In short, these are solvable concerns for most companies (but you should still be very careful with private data)

He is releasing the game for free, in large part due to the controversy over using AI for game design in the game industry, especially with regard to AI generated art.

As a professor, I caught three students who turned in assignments written by ChatGPT. I caught them because I required specific information/examples from a book that GPT had not been trained on, so there was lots of made up information. With Bing Chat connected to the internet and Claude 2 able to analyze digital files, catching students to stop them from using these tools is going to be nearly impossible. I’ve decided to follow your lead and let students use AI on out of classroom assignments. I’ll hold them responsible for the information on in class exams and try to help them figure out what AI is good at and isn’t good at.

Thank you! Again. This is a great article and am sharing it to an AI Nerds group I belong to. They always give me shit when I mention one of your articles. One said, "Do you read anyone else?" And I said, "I do but I'm not sure why. Ethan is the best." Ha!

Keep up the calm, mindful approaches this world so desperately needs.