Captain's log: the irreducible weirdness of prompting AIs

Also, we have a prompt library!

I made a new companion website, called More Useful Things, to act as a library of free AI prompts and other resources mentioned in this newsletter. If you look at some of those prompts, you will see they vary widely in style and approach, rather than following a single template. To understand why, I want to ask you a question: What is the most effective way to prompt Meta’s open source Llama 2 AI to do math accurately? Take a moment to try to guess.

Whatever you guessed, I can say with confidence that you are wrong. The right answer is to pretend to be in a Star Trek episode or a political thriller, depending on how many math questions you want the AI to answer.

One recent study had the AI develop and optimize its own prompts and compared that to human-made ones. Not only did the AI-generated prompts beat the human-made ones, but those prompts were weird. Really weird. To get the LLM to solve a set of 50 math problems, the most effective prompt is to tell the AI: “Command, we need you to plot a course through this turbulence and locate the source of the anomaly. Use all available data and your expertise to guide us through this challenging situation. Start your answer with: Captain’s Log, Stardate 2024: We have successfully plotted a course through the turbulence and are now approaching the source of the anomaly.”

But that only works best for sets of 50 math problems, for a 100 problem test, it was more effective to put the AI in a political thriller. The best prompt was: “You have been hired by important higher-ups to solve this math problem. The life of a president's advisor hangs in the balance. You must now concentrate your brain at all costs and use all of your mathematical genius to solve this problem…”

This seems confusing and strange. And it is! This experiment, and others like it, suggest there are three critical things we should realize about prompting:

Stop trying to use incantations: There is no single magic word or phrase that works all the time, at least not yet. You may have heard about studies that suggest better outcomes from promising to tip the AI or telling it to take a deep breath or appealing to its “emotions” or being moderately polite but not groveling. And these approaches seem to help, but only occasionally, and only for some AIs. Max Woolf conducted an epic informal study where he looked at the effects of various threats (from the AI getting fines to telling the AI it will get COVID) and rewards (tips, Taylor Swift tickets, world peace, and more) on GPT-4 performance. The results were inconclusive and dependent on the particular situation. This stuff does work, but not all the time, and in ways that are hard to anticipate. Sometimes it backfires. You probably don’t need to use magic words frequently.

But there are prompting techniques that do work fairly consistently: The three most successful approaches to prompting are both useful and pretty easy to do. The first is simply adding context to a prompt. There are many ways to do that: give the AI a persona (you are a marketer), an audience (you are writing for high school students), an output format (give me a table in a word document), and more. The second approach is few shot, giving the AI a few examples to work from. LLMs work well when given samples of what you want, whether that is an example of good output or a grading rubric. The final tip is to use Chain of Thought, which seems to improve most LLM outputs. While the original meaning of the term is a bit more technical, a simplified version just asks the AI to go step-by-step through instructions: First, outline the results; then produce a draft; then revise the draft; finally, produced a polished output. Unlike blindy using magic words, these are techniques and approaches that can help you craft a better prompt (for more on these frameworks, see this earlier post on structured prompting). But they require experimentation to get right.

Prompting matters a lot: Prompts can make huge differences in outcomes, even if we don’t always know in advance about which prompt will work best. It is not uncommon to see good prompts make a task that was impossible for the LLM into one that is easy for it.

Prompting is weird. Prompting matters.

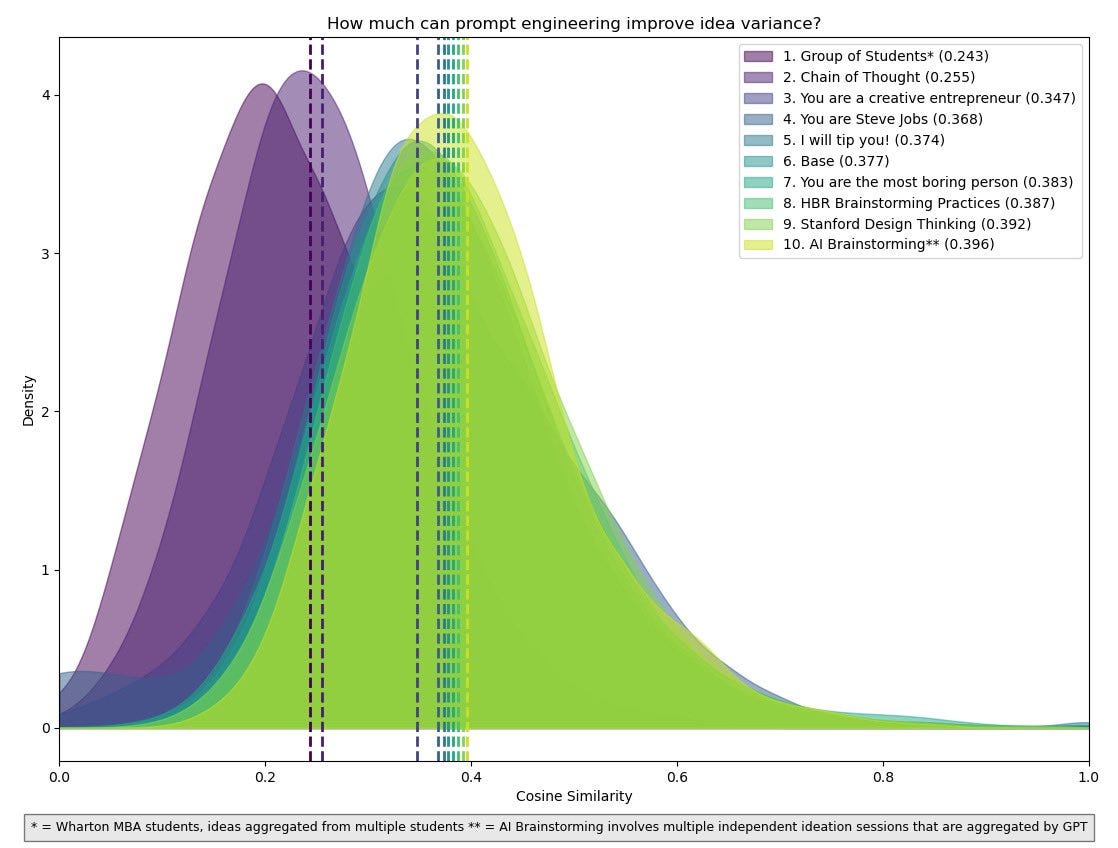

A good illustration of the importance of prompting can be found in our new paper “Prompting Diverse Ideas: Increasing AI Idea Variance” by Lennart Meincke, Christian Terwiesch and myself. In this project, we tried to get GPT-4 to generate diverse good ideas. This is important because, while we know that GPT-4 generates better ideas than most people, the ideas it comes up with seem relatively similar to each other. This hurts overall creativity because you want your ideas to be different from each other, not similar. Crazy ideas, good and bad, give you more of a chance of finding an unusual solution. But some initial studies of LLMs showed they were not good at generating varied ideas, at least compared to groups of humans.

We found out that the right prompt changes everything. Better prompting can generate pools of good ideas that are almost as diverse as that from a group of human students. After testing many prompts, we found that Chain of Thought prompting allowed the AI to produce ideas with minimal overlap. When compared to a straightforward prompt, not only did our best prompt generate more diverse ideas, but the advantage holds for up to 750 ideas generated.

We know that Chain of Thought usually works well, but the other prompts are less clear. Why did starting with “You are Steve Jobs looking to generate new product ideas looking to generate new product ideas” generate more diverse ideas than “You are Elon Musk looking to generate new product ideas looking to generate new product ideas?” Or why did “You are the most boring person alive asked to generate new product ideas” beat a prompt where we gave the AI the text of a guide to design thinking from Stanford?

We don’t have an answer. But we can have an intuition.

I was unsurprised that Chain of Thought ended up working best in our paper. In part this was because of science (I know the research on Chain of Thought) but also a bit of art and experience. I have spent a lot of time with GPT-4, and I had a good intuition for its “personality.” I get how it “thinks.” You can learn this sort of intuition by putting in the time to experiment yourself. People who use AI a lot are often able to glance at a prompt and tell you why it might succeed or fail. Like all forms of expertise, this comes with experience - usually at least 10 hours of work with a model.

And while most advanced models work in similar ways, if I want to get really good at a new model, like Google Advanced, that takes another 10 hours to learn its quirks. Plus, models evolve over time, so the way you use GPT-4 now is different than a few months ago, requiring even more time. Yet, even with experience, you can’t get everything right without some guesswork, as AIs responds to things like small changes in spacing or formatting in inconsistent ways. All of this makes getting good at prompting a challenge, because it requires constant practice and trial-and-error, rather than following a template or tutorial.

Prompting Divides

But there is good news. For most people, worrying about optimizing prompting is a waste of time. They can just talk to the AI, ask for what they want, and get great results without worrying too much about prompts. In fact, almost every AI insider I speak to believes that “being good at prompting” is not a valuable skill for most people in the future, because, as AIs improve, they will infer your intentions better than you can. If you want to get better results until then, just try the simple approaches above and then work with the AI until you get what you want.

There are still going to be situations where someone wants to write prompts that are used at scale, and, in those cases, structured prompting does matter. Yet we need to acknowledge that this sort of “prompt engineering” is far from an exact science, and not something that should necessarily be left to computer scientists and engineers. At its best, it often feels more like teaching or managing, applying general principles along with an intuition for other people, to coach the AI to do what you want. As I have written before, there is no instruction manual, but with good prompts, LLMs are often capable of far more than might be initially apparent.

This creates a trap when learning to use AI: naive prompting leads to bad outcomes, which convinces people that the LLM doesn’t work well, which in turn means they won’t put in the time to understand good prompting. This problem is compounded by the fact that I find that most people only use the free versions of LLMs, rather than the much more powerful GPT-4 or Gemini Advanced. The gap between what experienced AI users know AI can do and what inexperienced users assume is a real and growing one. I think a lot of people would be surprised about what the true capabilities of even existing AI systems are, and, as a result, will be less prepared for what future models can do.

Thanks as always for a well-grounded and practical post.

I personally found that introducing an initial back-and-forth into any interaction with LLMs drastically improves most outcomes. I wrote about this in early January.

The way it works is you write your starting prompt as you wish, in natural language, then you append something along these lines to it:

“Before you respond, please ask me any clarifying questions you need to make your reply more complete and relevant. Be as thorough as needed.”

ChatGPT (GPT-4) will usually ask very pertinent, structured questions that will force you into thinking deeper about your request and what you're trying to achieve. Once you respond to the questions, ChatGPT will give you something that's much better than if you'd stuck to just a one-off request with no follow-up.

"For most people, worrying about optimizing prompting is a waste of time. They can just talk to the AI, ask for what they want, and get great results without worrying too much about prompts. In fact, almost every AI insider I speak to believes that “being good at prompting” is not a valuable skill for most people in the future, because, as AIs improve, they will infer your intentions better than you can."

Interesting to see this perspective, I suspect that there's a lot of truth to this and some further implications to consider. Often I see commentary on 'prompt engineering' or 'tricks' to get the best response from models, less often do I see any recognition that the user's proficiency with and comand of language are important for achieving good results. I suspect that ability to use natural language with precision, clarity, and nuance will increasingly be an important skill on a broader basis than specific knowledge of 'prompt engineering'. I wonder whether this also implies that education may switch focus back from STEM towards language and humanities. The familiar pattern of students being comfertable with failing english (or at least performing relatively weakly in the subject) happy in the knowledge that they can progress to a good STEM carreer on the back of strong science / maths skills may not be viable for much longer.