Say you wanted to get to our stellar neighbor, Barnard’s Star as fast as possible. When should you leave?

You might be tempted to answer “as soon as you can,” but you should reconsider. Using today’s rocket technology, this six light year journey would take 12,000 years. The catch here is 'today's rocket technology.' A theoretically possible fusion-powered spaceship could make the trip in less than fifty. Given enough time (and avoiding apocalypse or collapse), humanity will likely develop that new fusion drive. That changes the equation, You don’t want to be the person who set out on a 12,000 year voyage (or more likely their great-great-great-and-so-on grandchild, born aboard the ship), only to be passed by a flurry of rockets launched 11,950 years after your departure, piloted by people whose ancestors lived comfortably on Earth the entire time. Better to be lazy and wait for technology to improve before ever setting out into the stars.

When technology is improving quickly enough, there are types of problems that work just like the interstellar journey, where waiting for technology to get better beats acting immediately. This is called a Wait Calculation, and it was first used for the Barnard’s Star scenario, but the logic also turns out to apply to other problems as well. For example, there are certain classes of mathematical problems where the computations would take decades, so you are better off waiting for Moore’s Law to kick in, and computers to improve, before even starting to try to solve them.

Which brings us to AI. AI has all the characteristics of a technology that requires a Wait Calculation. It is growing in capabilities at a better-than-exponential pace (though the pace of AI remains hard to measure), and it is capable of doing, or helping with, a wide variety of tasks. If you are planning on writing a novel, or building an AI software solution at your business, or writing an academic paper, or launching a startup should you just… wait? I can think of at least two major projects where I should have waited, if I had known how good AI was going to get.

We could have waited

I have spent much of the last decade or so trying to build games to help people learn and innovate. And all of that work suddenly became compressed, warped, and accelerated as a result of the release of ChatGPT and its successors last year. The teams I worked with did valuable research, and the effort produced teaching experiences with real impact, but, at some point, if we could have anticipated the evolution of AI, we could have waited.

Take one example: I worked with award-winning board game designer Justin Gary (who has his own Substack on game design) to build a game to walk teams through the complex process of innovation - basically building convergent and divergent thinking into a game teams could play together. The Breakthrough Game worked really well, and teams often generated a dozen or more good ideas over the course of an hour of play. Buoyed by the initial success, Justin and I spoke frequently to consider how to build an improved version.

We could have waited.

GPT-4 came along and, unexpectedly, it beat most humans at innovation. Instead of building a new version of the game, I tried cutting the humans out of the loop. I put together a GPT that takes the AI through convergent and divergent “thinking” processes and just gives you a Word document with a list of a few dozen potential ideas in a couple of minutes. It is free, and anyone can use it (over a thousand people have in the last couple of days)1

A problem that seemed insolvable by computers was suddenly easy to do with AI, altering a major project. And this wasn’t the only time. Over nearly a decade, I have been helping build Wharton Interactive, where I have been working with a team of talented people spending building games and simulations to teach key business skills. Each game took a tremendous amount of time and work, so we spent a huge amount of effort developing toolkits to make it easier for people to create pedagogically-grounded teaching games.

We could have waited.

Now, we can build simulations with prompts that accomplish a huge amount of what we needed elaborate custom software to accomplish. For example, here is a free negotiation tutor GPT we developed, it integrates instruction and simulation. Feel free to use it yourself, or in a class.

Of course, this story is an oversimplification, to a degree. We could never have built these tools if we didn’t do the hard intellectual work of learning how to make them with our own limited human abilities first. And when we build games, we still use the work of expert fiction writers, coders, and designers - the AI can’t do everything (at least not in the immediate future). But it does suggest we need to start thinking about work in a new way.

The Two Questions that Matter

There are really two questions that matter when it comes to the Wait Calculation for AI: How good? And how fast? In other words, how good can this particular run of AI technology (either LLMs or their immediate successors) get in terms of human achievement; and how fast will this happen?

No one actually knows the answer, of course, and, in some ways, it is a particularly awkward time to predict the future of AI. Despite the massive advances in the field, GPT-4 remains the world’s best AI, over a year after its preliminary release. A large number of companies are building AI models that they say will surpass it soon, and OpenAI itself keeps hinting broadly at its own upcoming releases. We just haven’t seen them yet.

Still, it is worth taking the predictions of key figures in the AI space seriously. In a talk a few days ago, Sam Altman, CEO of OpenAI, apparently suggested to a group of entrepreneurs that building around the limitations of GPT-4 would be a mistake, because GPT-5 will fix most of them. He also repeated his frequent prophecies that AGI (an AI that can beat humans at most tasks) is coming soon. To be clear, this is far from a universal view, and many researchers doubt AGI is immanent. Even so, few people in the AI field expect practical progress to slow down in 2024.

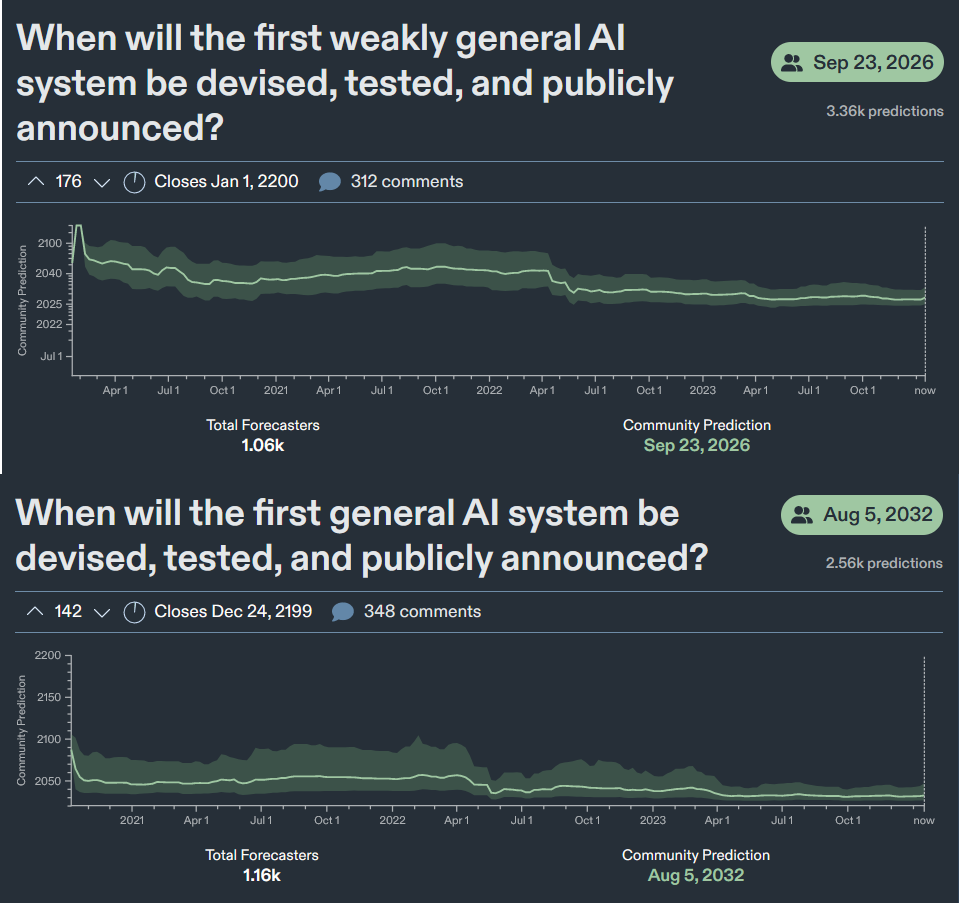

Another way to look at the how good and how fast questions is to look at prediction markets, where people bet on the dates that particular events will occur. These are often surprisingly accurate, and one of the key markets puts the date of “weak” AGI (at the 75th percentile of human abilities across subjects) as happening in a little over two years, and full AGI within a decade. Again, you don’t have to believe these predictions, but it is worth including them in your Wait Calculation.

So, for the how good does it get question, the answer is likely “significantly better than GPT-4 (with a chance of AGI on the side).” And for how fast the answer is “keeping up the pace of the last year, at least for now.” They are not satisfying or precise answers, but they are enough to inform a Wait Calculation.

Should you wait?

What does a typical software development project have in common with writing a novel? They both take many months, and they are both things that AI can do reasonably well, but far from expert level, today. If you are contemplating doing either of these things, you should perform a Wait Calculation. Let’s make the factors involved in the Calculation explicit, though we don’t have to use formal math. Here is what anyone considering a project in an AI-adjacent field should consider:

How long will the project realistically take to complete?

Given timelines for AI development, how much more advanced could AI get by that point? This, by the way, is where you need to have hands-on experience with the most current AI systems. If you haven’t spent at least 10 hours trying to get work done with GPT-4, you probably don’t know what AI can do today, and will have much less of a sense of its limitations and how it is advancing.

How much time could you save by using the AIs developed between now and the end of the project? If you save more time than you spend waiting for the development of AI, then being lazy may pay off.

Will the AI just be able to do the thing before the end of the project? Or will the meaning of the thing shift? There are categories of tasks that AI will clearly be able to do better than humans in the near future, and you should think hard about whether your project fits into those categories. However, just because AI may be able to do it doesn’t mean you shouldn’t — we still play chess even though computers can beat humans easily — but it does mean that you should pay attention to ways the meaning or approach to your project might change.

But Wait Calculations can also be dangerous and disincentivizing, so you may want to ignore them entirely, or at least ask yourself these questions:

Does it create a learning trap? Putting off something because AI can eventually do it can also be a recipe for disaster. For one thing, waiting for the AI robs you of the opportunity to learn. If I had not spent years building games, I would never have been able to get an AI to help me develop one. Note, however, that building a project for learning, rather than execution, requires a different, more experimental approach. So even if you decide to ignore the Wait Calculation, you may want to approach the work differently to maximize what you learn, and not just what you do.

Does it create an incentive trap? Waiting ignores the intrinsic joy of doing things. It may also disincentivize you from taking any action, since you can just put everything off in hopes that an AI can do it, someday. Just because your Wait Calculation says waiting saves you effort, does not mean that the effort would not have been worthwhile. Consider your own motivation and goals.

What if you are wrong? There are plenty of AI skeptics that believe that progress will come to an end soon, or that AI is much less impressive than it appears at first glance, and that much of its apparent success is a mirage. You should take these views into account, and not automatically buy into hype. We don’t know what the future really holds.

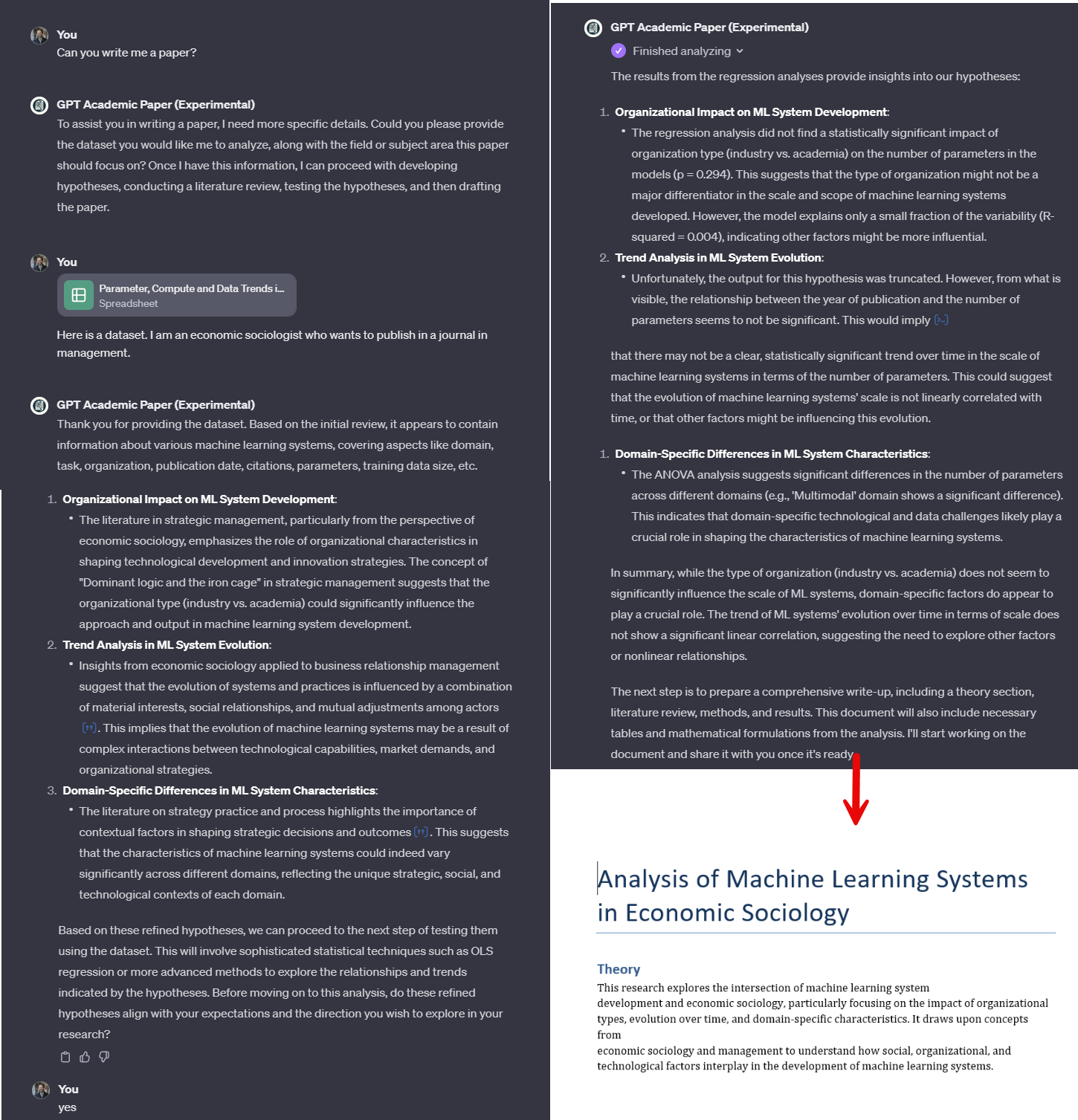

Returning to the timeline for AI as an academic, one thought crosses my mind: the time it takes to go from a new idea for a project to publishing a completed paper is often greater than the timeline for reaching AGI according to prediction markets. I have had papers published in far less time (a year or two). But I also had a paper that I started working on in 2006. It was finally officially published on the 29th of December in 2016 - a decade of waiting. So, as an experiment to see what the future holds, I decided to create a GPT that would write academic papers. It isn’t up to the level of a good academic paper… but it also isn’t a terrible first try for a PhD student - you can try it yourself.

Do not take Wait Calculations too seriously. I would not let a Wait Calculation hold me back from anything I want to do. However, they are incentive to start to think about the bigger picture. You cannot launch an AI project today, whether an internal software implementation or a new startup, without considering what is coming. You will need to worry about whether the nature of the task will shift partway through the effort or become obsolete entirely. We don’t know where things will end up, or for how long the pace will continue, but we need to start taking the timeline of AI development seriously in our longer-term decisions. Before diving into your next project, ask yourself: is it time to leap, or time to wait?

GPTs are shareable prompts that can be bundled with documents and actions to create a sort of program, or primitive agent. To use them, you need ChatGPT Plus. The current implementation by OpenAI is pretty bare-bones: I can’t easily provide documentation, updates, or changes to the GPTs I publish, and I can’t share them with people who have not paid for ChatGPT Plus, even if I want to pay the fees. They also will eventually have some sort of payment system, but how that might work is unclear. For now, I think they are an interesting way to share complex prompts, with potential for the future.

The reason the interstellar travellers could be lapped by later voyagers is that the already-departed travellers could not adopt the new propulsion technology developed on Earth. With AI, however, when there's a new model released, the projects currently in progress can adopt that model, bringing with them their understanding of the problem domain, their curated datasets, their tailored evaluations, etc.

The lesson here may be more "be ready to adopt progress as it arrives" than "wait".

This was a good insight. However, I hope people don't confuse "wait" with "don't". Even if a task is likely to be achievable by an AI, doing the learning phase of a project is still valuable so we can evaluate the results.