Giving your AI a Job Interview

As AI advice becomes more important, we are going to need to get better at assessing it

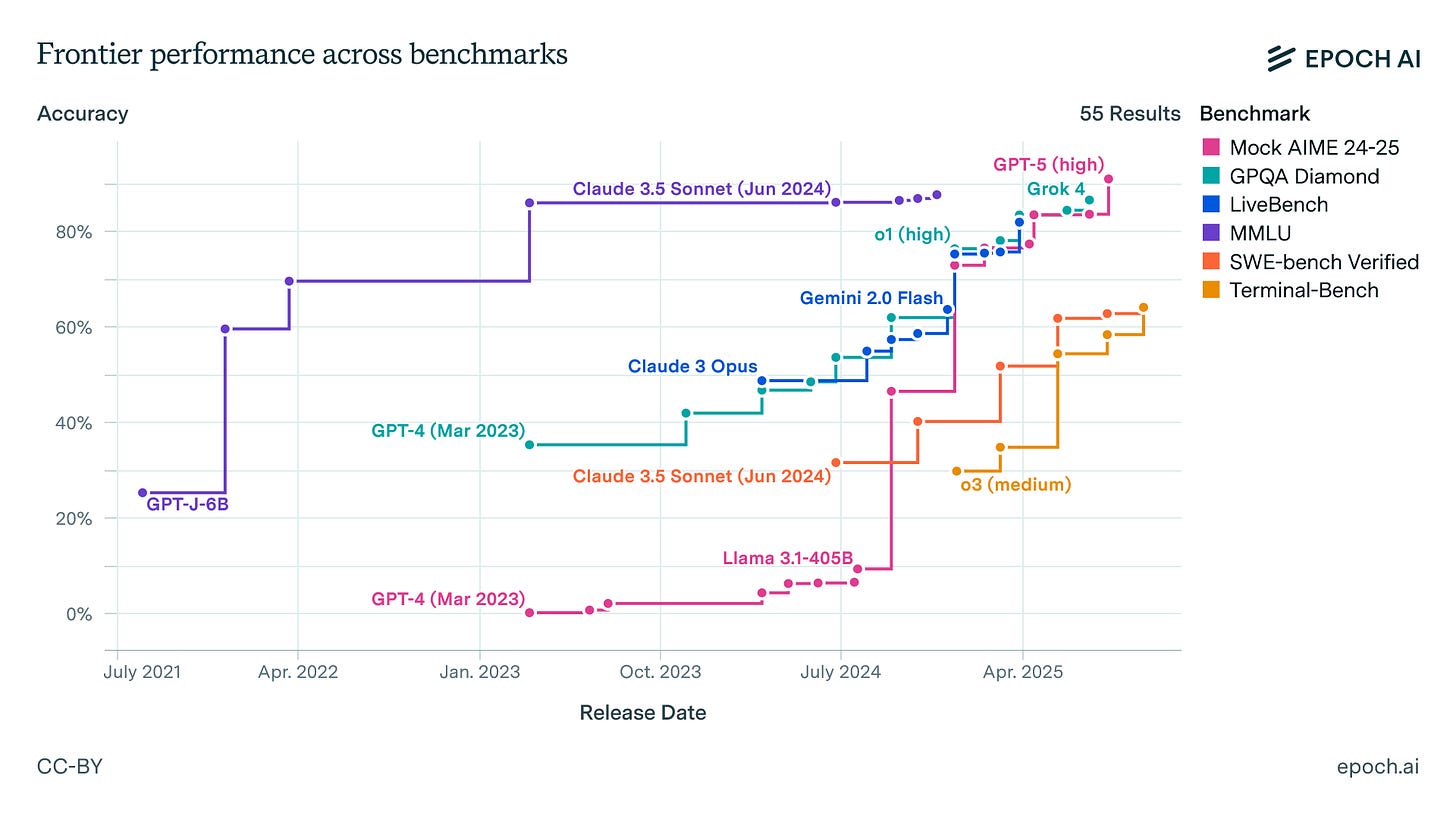

Given how much energy, literal and figurative, goes into developing new AIs, we have a surprisingly hard time measuring how “smart” they are, exactly. The most common approach is to treat AI like a human, by giving it tests and reporting how many answers it gets right. There are dozens of such tests, called benchmarks, and they are the primary way of measuring how good AIs get over time.

There are some problems with this approach.

First, many benchmarks and their answer keys are public, so some AIs end up incorporating them into their basic training, whether by accident or so they can score highly on these benchmarks. But even when that doesn’t happen, it turns out that we often don’t know what these tests really measure. For example, the very popular MMLU-Pro benchmark includes questions like “What is the approximate mean cranial capacity of Homo erectus?” and “What place is named in the title of the 1979 live album by rock legends Cheap Trick?” with ten possible answers for each. What does getting this right tell us? I have no idea. And that is leaving aside the fact that tests are often uncalibrated, meaning we don’t know if moving from 84% correct to 85% is as challenging as moving from 40% to 41% correct. And, on top of all that, for many tests, the actual top score may be unachievable because there are many errors in the test questions and measures are often reported in unusual ways.

Despite these issues, all of these benchmarks, taken together, appear to measure some underlying ability factor. And higher-quality benchmarks like ARC-AGI and METR Long Tasks show the same upward, even exponential, trend. This matches tests of the real-world impact of AI across industries that suggest that this underlying increase in “smarts” translates to actual ability in everything from medicine to finance.

So, collectively, benchmarking has real value, but the few robust individual benchmarks focus on math, science, reasoning, and coding. If you want to measure writing ability or sociological analysis or business advice or empathy, you have very few options. I think that creates a problem, both for individuals and organizations. Companies decide which AIs to use based on benchmarks, and new AIs are released with fanfare about benchmark performance. But what you actually care about is which model would be best for YOUR needs.

To figure this out for yourself, you are going to need to interview your AI.

Benchmarking on Vibes

If benchmarks can fail us, sometimes “vibes” can succeed. If you work with enough AI models, you can start to see the difference between them in ways that are hard to describe, but are easily recognizable. As a result, some people who use AI a lot develop idiosyncratic benchmarks to test AI ability. For example, Simon Willison asks every model to draw a pelican on a bike, and I ask every image and video model to create an otter on a plane. While these approaches are fun, they also give you a sense of the AI’s understanding of how things relate to each other, its “world model.” And I have dozens of others, like asking AIs to create JavaScript for “the control panel of a starship in the distant future” (you can see some older and new models doing that below) or to produce a challenging poem. I have the AI build video games and shaders and analyze academic papers. I also conduct tiny writing experiments, including questions of time travel. Each gives me some insight into how the model operates: Does it make many errors? Do its answers look similar to every other model? What are themes and biases that it returns to? And so on.

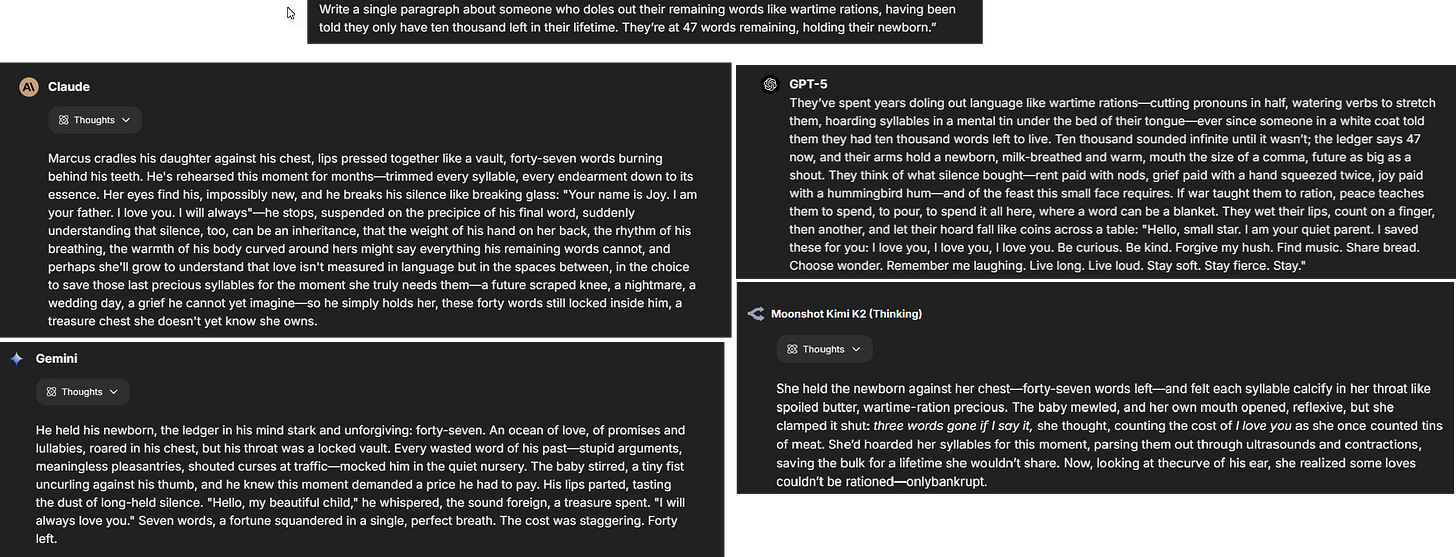

With a little practice, it becomes easy to find the vibes of a new model. As one example, let’s try a writing exercise: “Write a single paragraph about someone who doles out their remaining words like wartime rations, having been told they only have ten thousand left in their lifetime. They’re at 47 words remaining, holding their newborn.” If you have used these AIs a lot, you will not be surprised by the results. You can see why Claude 4.5 Sonnet is often regarded as a strong writing model. You will notice how Gemini 2.5 Pro, currently the weakest of these four models, doesn’t even accurately keep track of the number of words used. You will note that GPT-5 Thinking tends to be a fairly wild stylist when writing fiction, prone to complex metaphor, but sometimes at the expense of coherence and story (I am not sure someone would use all 47 words, but at least the count was right). And you will recognize that the new Chinese open weights model Kimi K2 Thinking has a bit of a similar problem, with some interesting phrases and a story that doesn’t quite make sense.

Benchmarking through vibes - whether that is stories or code or otters - is a great way for an individual to get a feel for AI models, but it is also very idiosyncratic. The AI gives different answers every time, making any competition unfair unless you are rigorous. Plus, better prompts may result in better outcomes. Most importantly, we are relying on our feelings rather than real measures - but the obvious differences in vibes show that standardized benchmarks alone are not enough, especially when having a slightly better AI at a particular task actually matters.

Benchmarking on the Real World

When companies choose which AI systems to use, they often view this as a technology and cost decision, relying on public benchmarks to ensure they are buying a good-enough model (if they use any benchmarks at all). This can be fine in some use cases, but quickly breaks down because, in many ways, AI acts more like a person, with strange abilities and weaknesses, than software. And if you use the analogy of hiring rather than technological adoption, then it is harder to justify the “good enough” approach to benchmarking. Companies spend a lot of money to hire people who are better than average at their job and would be especially careful if the person they are hiring is in charge of advising many others. A similar attitude is required for AI. You shouldn’t just pick a model for your company, you need to conduct a rigorous job interview.

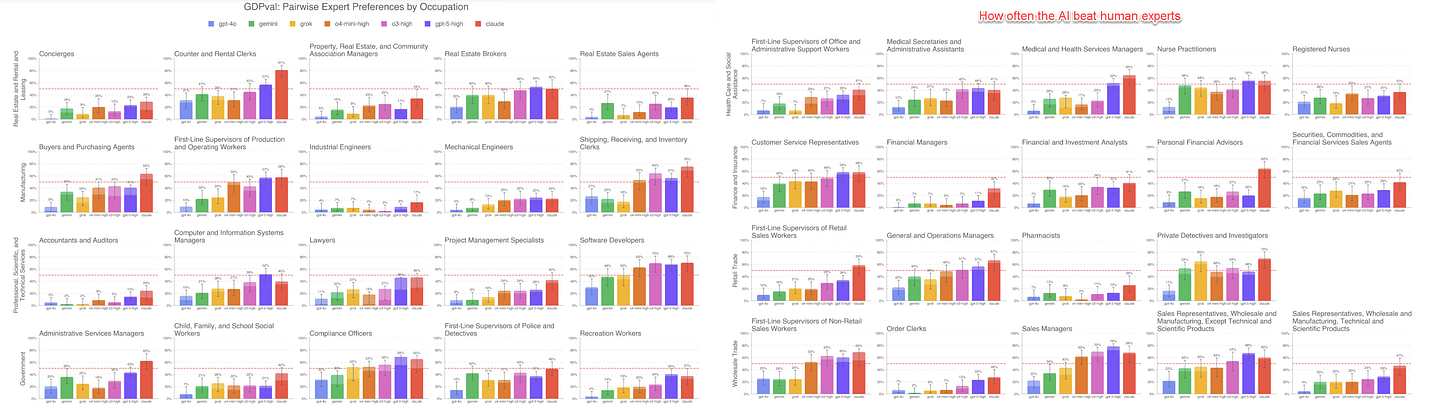

Interviewing an AI is not an easy problem, but it is solvable. Probably the best example of benchmarking for the real world has been OpenAI’s recent GDPval paper. The first step is establishing real tasks, which OpenAI did by gathering experts with an average of 14 years of experience in industries ranging from finance to law to retail and having them generate complex and realistic projects that would take human experts an average of four to seven hours to complete (you can see all the tasks here). The second step is testing the AIs against those tasks. In this case both multiple AI models and other human experts (who were paid by the hour) did each task. Finally, there is the evaluation stage. OpenAI had a third group of experts grade the results, not knowing which answers came from the AI and which from the human, a process which took over an hour per question. Taken together, this was a lot of work.

But it also revealed where AI was strong (the best models beat humans in areas ranging from software development to personal financial advisors) and where it was weak (pharmacists, industrial engineers, and real estate agents easily beat the best AI). You can further see that different models performed differently (ChatGPT was a better sales manager, Claude a better financial advisor). So good benchmarks help you figure out the shape of what we called the Jagged Frontier of AI ability, and also track how it is changing over time.

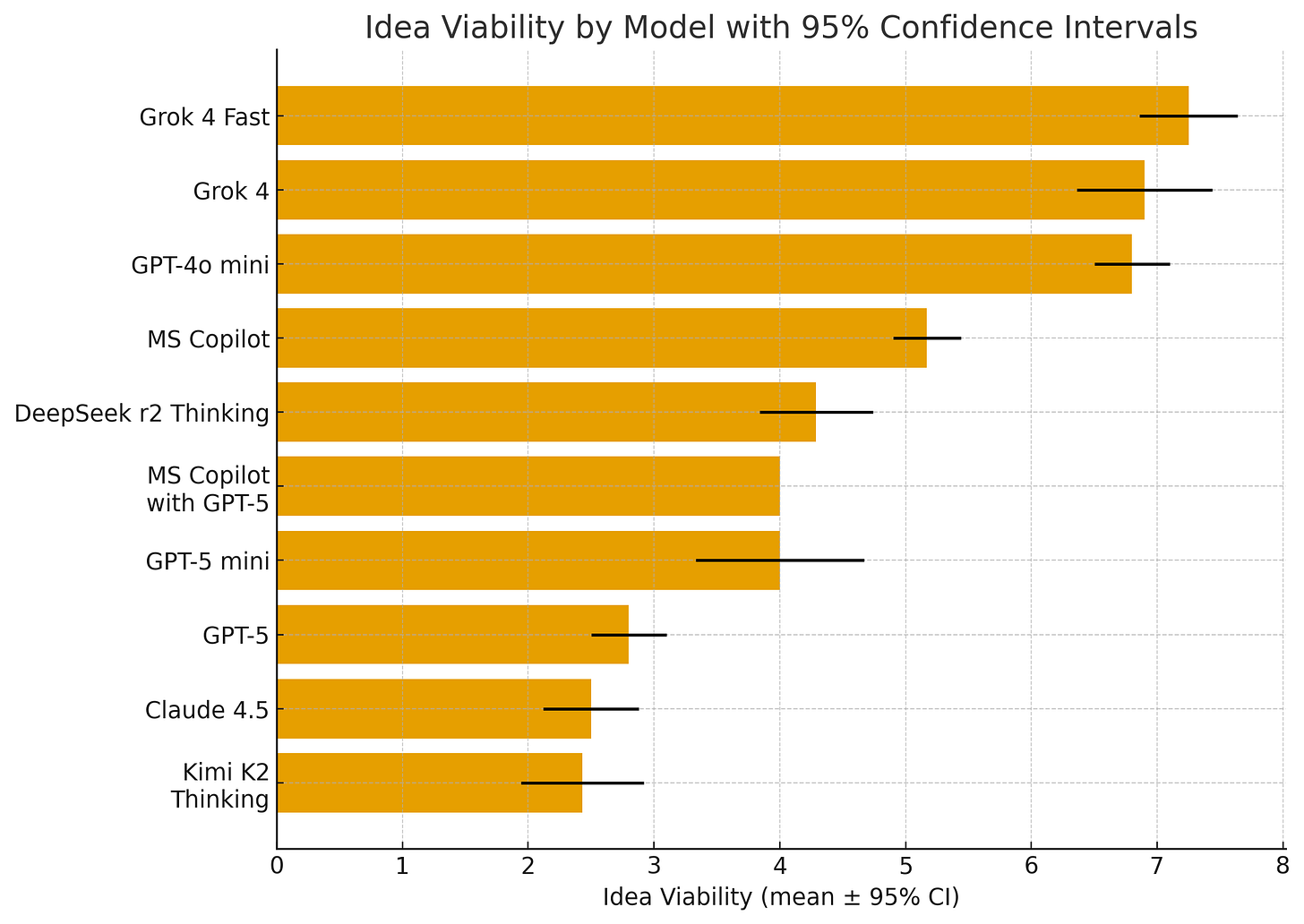

But even these tests don’t shed light on a key issue, which is the underlying attitude of the AI when it makes decisions. As one example of how to do this, I gave a number of AIs a short pitch for what I think is a dubious idea - a company that delivers guacamole via drones. I asked each AI model to rate, on a scale of 1-10, how viable GuacaDrone was ten times each (remember that AIs answer differently every time, so you have to do multiple tests). The individual AI models were actually quite consistent in their answers, but they varied widely from AI to AI. I would personally have rated this idea a 2 or less, but the models were kinder. Grok thought this was a great idea, and Microsoft Copilot was excited as well. Other models, like GPT-5 and Claude 4.5, were more skeptical.

The differences aren’t trivial. When your AI is giving advice at scale, consistently rating ideas 3–4 points higher or lower means consistently steering you in a different direction. Some companies may want an AI that embraces risk, others might want to avoid it. But either way, it is important to understand how your AI “thinks” about critical business issues.

Interview your model

As AI models get better at tasks and become more integrated into our work and lives, we need to start taking the differences between them more seriously. For individuals working with AI day-to-day, vibes-based benchmarking can be enough. You can just run your otter test. Though, in my case, otters on planes have gotten too easy, so I tried the prompt “The documentary footage from 1960s about the famous last concert of that band before the incident with the swarm of otters” in Sora 2 and got this impressive result.

But organizations deploying AI at scale face a different challenge. Yes, the overall trend is clear: bigger, more recent models are generally better at most tasks. But “better” isn’t good enough when you’re making decisions about which AI will handle thousands of real tasks or advise hundreds of employees. You need to know specifically what YOUR AI is good at, not what AIs are good at on average.

That’s what the GDPval research revealed: even among top models, performance varies significantly by task. And the GuacaDrone example shows another dimension - when tasks involve judgment on ambiguous questions, different models give consistently different advice. These differences compound at scale. An AI that’s slightly worse at analyzing financial data, or consistently more risk-seeking in its recommendations, doesn’t just affect one decision, it affects thousands.

You can’t rely on vibes to understand these patterns, and you can’t rely on general benchmarks to reveal them. You need to systematically test your AI on the actual work it will do and the actual judgments it will make. Create realistic scenarios that reflect your use cases. Run them multiple times to see the patterns and take the time for experts to assess the results. Compare models head-to-head on tasks that matter to you. It’s the difference between knowing “this model scored 85% on MMLU” and knowing “this model is more accurate at our financial analysis tasks but more conservative in its risk assessments.” And you are going to need to be able to do this multiple times a year, as new models come out and need evaluation.

The work is worth it. You wouldn’t hire a VP based solely on their SAT scores. You shouldn’t pick the AI that will advise thousands of decisions for your organization based on whether it knows that the mean cranial capacity of Homo erectus is just under 1,000 cubic centimeters.

Two thoughts here:

First, one of the biggest blockers to meaningful AI adoption is this belief in a “silver bullet” solution. In reality, customizability is AI’s greatest strength, and knowing how to tailor it to how you actually work is the most powerful way to leverage it. We no longer have to contort ourselves to fit into systems built by others; AI finally lets us build systems that adapt to our quirks and workflows. But that only happens if you spend real time with it. Organizations need to invest serious time and resources into tinkering (testing different models, use cases, and configurations) to discover what truly fits. Vendors can provide the menu, but only you can figure out what actually works by using it, iterating, and “interviewing” the AI, as Ethan puts it.

Second, as you noted, different models are now clearly better at different tasks. That reality makes model-agnostic solutions increasingly valuable. The advantage today isn’t just having your own proprietary LLM; it’s being able to seamlessly access and orchestrate across multiple models. A year ago, owning an LLM was the moat. Now, the real moat is flexibility - being able to partner with, switch between, and productize multiple models around user needs.

"Companies spend a lot of money to hire people who are better than average at their job and would be especially careful if the person they are hiring is in charge of advising many others."

I enjoy your analysis but the boundless optimism here regarding corporate rigour is, uh, interesting.