While reading a new paper on doctors using GPT-4 to diagnose disease, I saw a familiar problem with AI. The paper confirmed what many other similar studies have found: frontier Large Language Models are surprisingly good at diagnosis, even though they are not specifically built for medicine. You'd expect this AI capability to help doctors be more accurate. Yet doctors using AI performed no better than those working without it—and both groups did worse than ChatGPT alone. Why didn't the doctors benefit from the AI's help?

One reason is algorithmic aversion. We don’t like taking instructions from machines when they conflict with our judgement, which caused doctors to overrule the AI, even when it was accurate. But a second reason for this problem is very specific to working with Large Language Models. To people who aren’t used to using them, AI systems are surprisingly hard to get a handle on, resulting in a failure to benefit from their advice.

As a New York Times article on the paper reported: “they were treating [the AI] like a search engine for directed questions: ‘Is cirrhosis a risk factor for cancer? What are possible diagnoses for eye pain?’ It was only a fraction of the doctors who realized they could literally copy-paste in the entire case history into the chatbot and just ask it to give a comprehensive answer to the entire question.” This is not just an issue for doctors. In every classroom I teach in or organization I speak with, the vast majority of people have tried AI, but are often struggling with how to initially use it. And, as a result of that struggle, have not put in the 10 or so hours with AI that are required to really understand what it does.

There are many stumbling blocks: people treat AI like Google, asking it factual questions. But AI is not Google, and do not provide consistent, or even reliable, answers. Or people ask AI to write something for them and complain when it produces generic text. Or they can’t even figure out what to write at all, staring at a blinking cursor. In short, they can’t prompt the AI.

One common answer to these sorts of problems it that everyone should learn “prompt engineering,” the complex science (well, more of an art) of getting an AI to work in the ways you expect. There are a few problems with starting this way for most people, however1. First, the idea of a complex structure for prompting can be daunting and constraining. This is especially true because you do not need to be an expert in AI, or even know anything about programming or computers, to be an expert in using AI. Suggesting that complex upskilling is the place to start will discourage people from using AI. Second, prompt engineering implies that there is actually a clear science of getting AIs to act in the way that you want, when, in fact, researchers are still arguing over the most basic foundations of good prompting. This is because AIs are inconsistent and weird, and often have different results across different models. For example, they are sensitive to small changes in spacing or formatting; they get more accurate when you tell them to “read the question again;” they seem to respond better to politeness (but don’t overdo it); and they may get lazier in December, perhaps because they have picked up on the concept of winter break. Add to all of this the fact that getting good results out of AI without a lot of formal training is likely growing easier as larger models are less sensitive to changes in prompting technique than older AIs, and new techniques allow the AI to improve your prompt for you.

So, you can learn details of prompt engineering and the basics of how LLMs work, of course, but for most people, that is not a required starting place. You just need to use AI enough to get a feel for what you can use it for in your area of expertise. The most important thing to do is to get 10 or so hours of use with an advanced AI system. And to do that you just need to be a good-enough prompter to overcome the barriers that hold many AI users back. There are really two pathways to get started: good-enough prompting for tasks and good-enough prompting for thought.

Good Enough Task Prompting

One of the most useful ways to use AI is to help get things done. In my book, I talk about bringing the AI to the table, trying it out for all of your work tasks to see how well it does. I still think this is the right way to start. Often, you are told to do this by treating AI like an intern. In retrospect, however, I think that this particular analogy ends up making people use AI in very constrained ways. To put it bluntly, any recent frontier model (by which I mean Claude 3.5, ChatGPT-4o, Grok 2, Llama 3.1, or Gemini Pro 1.5) is likely much better than any intern you would hire, but also weirder.

Instead, let me propose a new analogy: treat AI like an infinitely patient new coworker who forgets everything you tell them each new conversation, one that comes highly recommended but whose actual abilities are not that clear. And I mean literally treat AI just like an infinitely patient new coworker who forgets everything you tell them each new conversation. Two parts of this are analogous to working with humans (being new on the job and being a coworker) and two of them are very alien (forgetting everything and being infinitely patient). We should start with where AIs are closest to humans, because that is the key to good-enough prompting

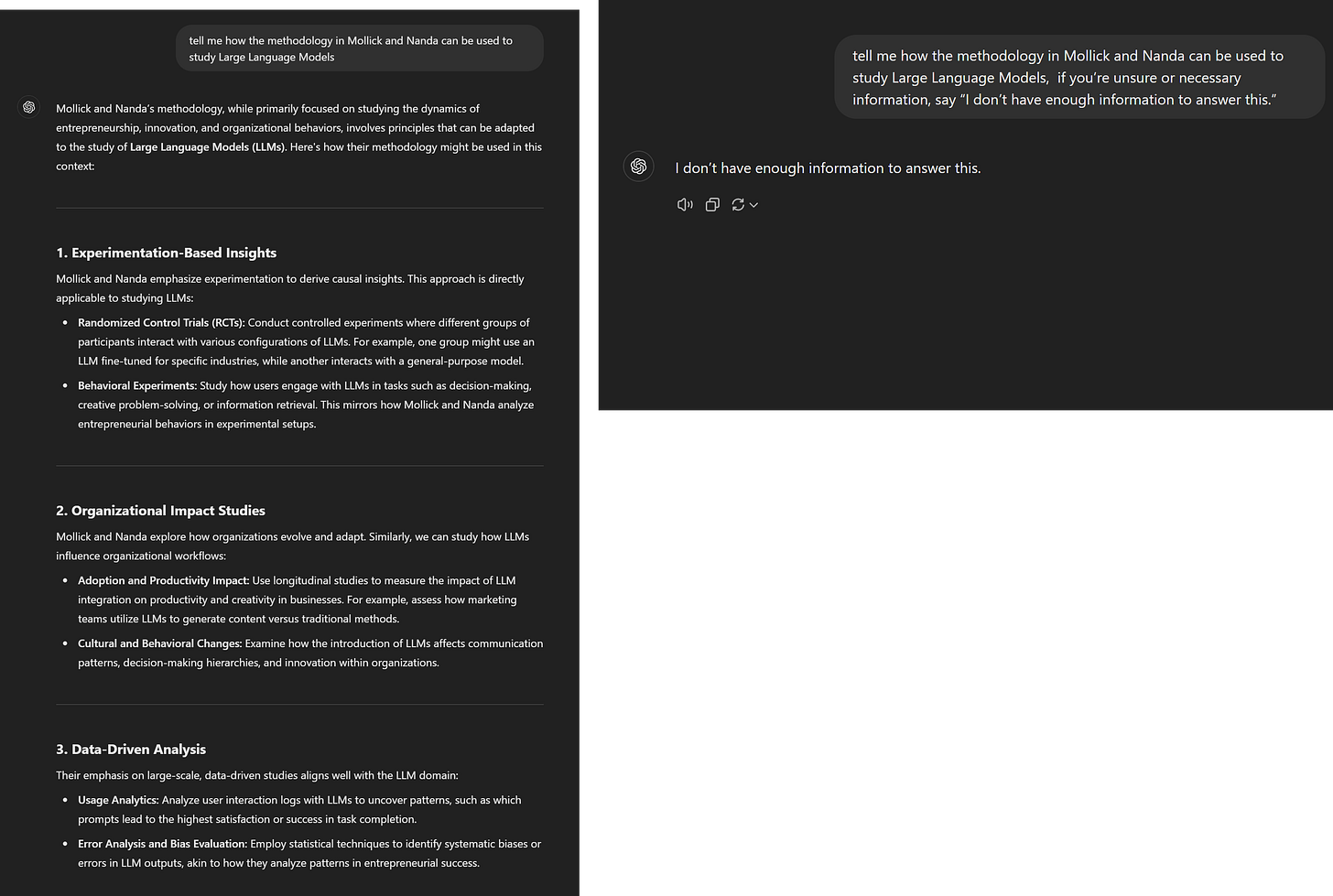

As it is a coworker, you want to work with it, not just give it orders, and you also want to learn out what it is good or bad at. Start by using it in areas of your expertise, where you are able to quickly figure out the shape of the jagged frontier of its ability. Because you are expert, you will be able to quickly assess where the AI is wrong or right. You do need to be prepared for it to give you plausible but wrong answers, but don’t let the risk of these hallucinations scare you off initially. Though hallucinations may be inevitable, you will learn where they are a big deal, and where they are not, over time. You can reduce the rate of hallucinations somewhat by giving the AI the ability to be wrong, for example, writing: if you’re unsure or necessary information, say “I don’t have enough information to answer this” can make a big difference.

Since the AI is new on the job, so you need to be very clear on exactly what you want. You don’t want a report on the pros and cons in remote learning, you want a report on the pros and cons in remote learning appropriate for a regional university in the Midwestern US and that might convince a business school Dean to fund a new remote learning program. Other ways to help give the AI clarity is by giving the AI examples of good or bad responses (called fewshot prompting) and giving it step-by-step directions of what you want to accomplish. You can also give it feedback just as you would another human being asking for improvement or just request that it ask you questions about anything that is unclear. Working with AI is a dialogue, not an order.

Now let’s talk about the less human aspects of AI, like its forgetfulness - the fact that each new conversation wipes the AI’s understanding of your particular situation. As a result, you also need to provide context. Context can be a role or persona (act like a marketer), but be careful with these, because while roles help the AI understand your context, they aren’t magical (they do not actually turn the AI into a marketer) and sometimes giving the AI a role can actually lower accuracy. Try roles out, but you don’t need to use them if they are not useful. You can also just give it whatever information you have lying around. Entire documents, instruction manuals, or even previous conversations are often helpful, just remember to pay attention to the AI’s memory, its context window.

Finally, we come to infinite patience, which is one of the least human traits of AI. In fact, one of the hardest things to realize about AI is that it is not going to get annoyed at you. You can keep asking for things and making changes and it will never stop responding. This introduces something new in intellectual life, abundance. You don’t need to ask for one email, ask for three in different tones to inspire you. You don’t need to ask for one way to complete a sentence, ask for 15 options and see if that unlocks your writing. Don’t ask for 5 ideas, ask for 30. In fact, our research found that GPT-4 can generate thousands of ideas before a large percentage of them start to overlap. Your job becomes one of pushing for variation (“give me ideas that are 80% weirder”), recombination (“combine ideas 12 and 16”) and expansion (“more ideas like number 12”), before selecting one you like.

Good Enough Thinking Prompting

In addition to getting a work product from the AI, you may just want to get advice, or a thinking partner or just someone to talk to. The reasons people want this may vary. Even if the AI advice isn’t that helpful, you can use it as a rubber duck - the popular idea in computer programming that, if you explain an issue to an inanimate rubber duck on your desk, you will work through the problem on your own by talking it out. As one example, I spoke to a quantum physicist who said AI helped him with physics. When I asked him whether or not the AI was a good physicist, he said it wasn’t, but it was curious and pushed him to think through his own ideas. The rubber duck at work. But the AI can actually provide useful guidance as well. For example, AI can provide good strategic or entrepreneurial advice if you are capable of executing on it. Beyond that, in controlled experiments, talking to an AI seems to reduce loneliness, but we do not know the full implications or risks of using AI for therapy or companionship, so I would urge caution.

For getting a thinking partner, the key to using the AI is to have a natural dialogue. Just talk to it. Most people find this easiest to do via voice on their phone. The current best voice model is GPT-4o, accessible via the ChatGPT or Copilot apps. The voice model for Google Gemini is a bit less sophisticated but can still work. Other models have voice modes coming soon.

Don’t make this hard

The single most useful thing you can do to understand AI is to use AI. There are lots of reasons people may decide to give up on using an AI quickly, from early hallucinations (the AI isn’t good enough) to existential discomfort (the AI is too good), but many of those initial reactions are tempered over time. Your goal is simple: spend 10 hours using AI on tasks that actually matter to you. After that, you'll have a natural sense of how AI fits into your work and life. You'll develop an intuition for effective prompting, and you'll better understand AI's potential. Don't aim for perfection - just start somewhere and learn as you go.

An important qualifier is “most people” - if you are building a prompt you expect other people to use, or which is being put into use at scale, or where accuracy is key, then prompt engineering is actually essential. I have a number of posts explaining some of these approaches, and Anthropic has a good prompt engineering guide as well. If you are a person using AI as a co-intelligence for a one-off conversation or task, it is much less important.

The observation about people treating AI like Google resonates with me deeply.

It seems like the real challenge isn’t just teaching prompt engineering but reframing how people think about AI entirely. What if AI education focused less on technical skills and more on developing an experimental mindset—treating AI as a partner to learn from through trial and error?

That could bridge the gap between what these models can do and how they’re actually used.

Very important point:

“people treat AI like Google, asking it factual questions. But AI is not Google, and do not provide consistent, or even reliable, answers."

Relevant to critiques I often hear from people