Becoming strange in the Long Singularity

A sudden increase in AI capability suggests a weirder world in our near future

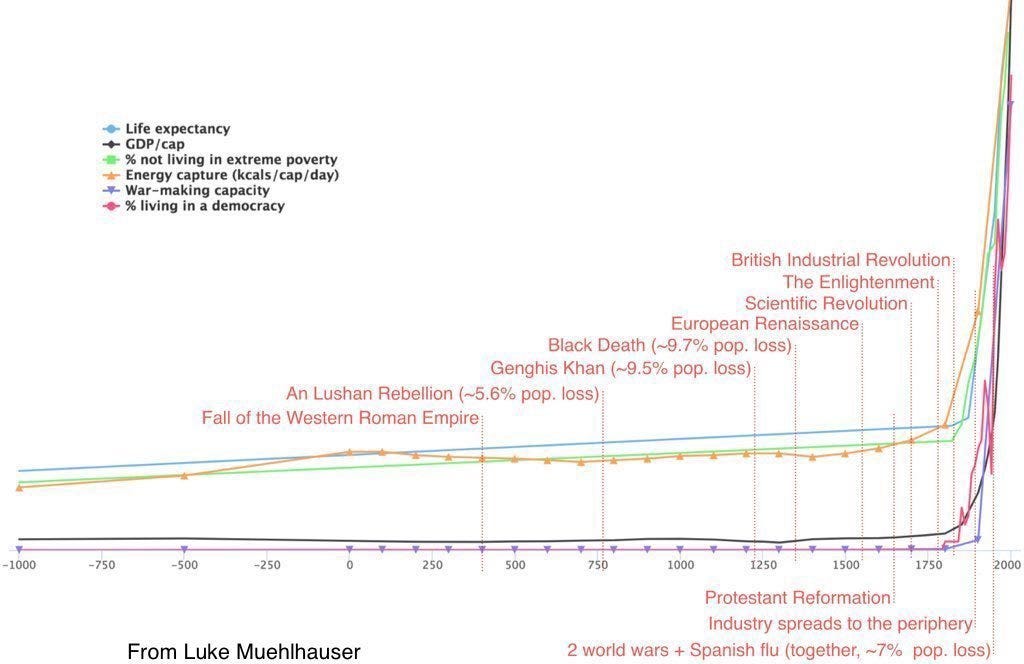

For the better part of 20,000 centuries of human history, nothing much happened. We spent about 19,960 of those centuries making slightly different variations of one tool made out of a slightly chipped rock before figuring out the whole metal thing. But, even then, nothing really happened until about two centuries ago. Then everything changed. (I mean, just look at the graph!)

There is a much debated concept that you may have heard of - the Singularity - referring to a moment where our technology (often specifically AI technology) accelerates suddenly and irrevocably. A moment where every graph of technological progress becomes vertical. At that point, proponents of this theory argue, the world changes in ways that are impossible to predict. Maybe the super-intelligent machines make even smarter machines that we can’t understand? Maybe humans are rendered obsolete? By definition, we literally cannot know what happens after the Singularity. Some very smart people worry about this a lot.

I don’t worry about the AI Apocalypse (yet), but I find the concept of a Singularity useful and interesting. I just see it a bit differently. As an academic who studies technology, I look at the graph above, and the the graph below of Moore’s Law1, and it seems impossible to ignore that we have been in some sort of technological singularity for at least a century. Call it the Long Singularity.

Consider: the first steam railway in America started in 1830. By 1890, 1 out of every 12 men was employed in rail transportation and 56% of all horsepower across all machines and factories was concentrated in the railroads. Only 66 years separated the first flight of an airplane and the moon landing. There were no smartphones before 2008, nine years later, two thirds of Americans had one. Progress has been profound.

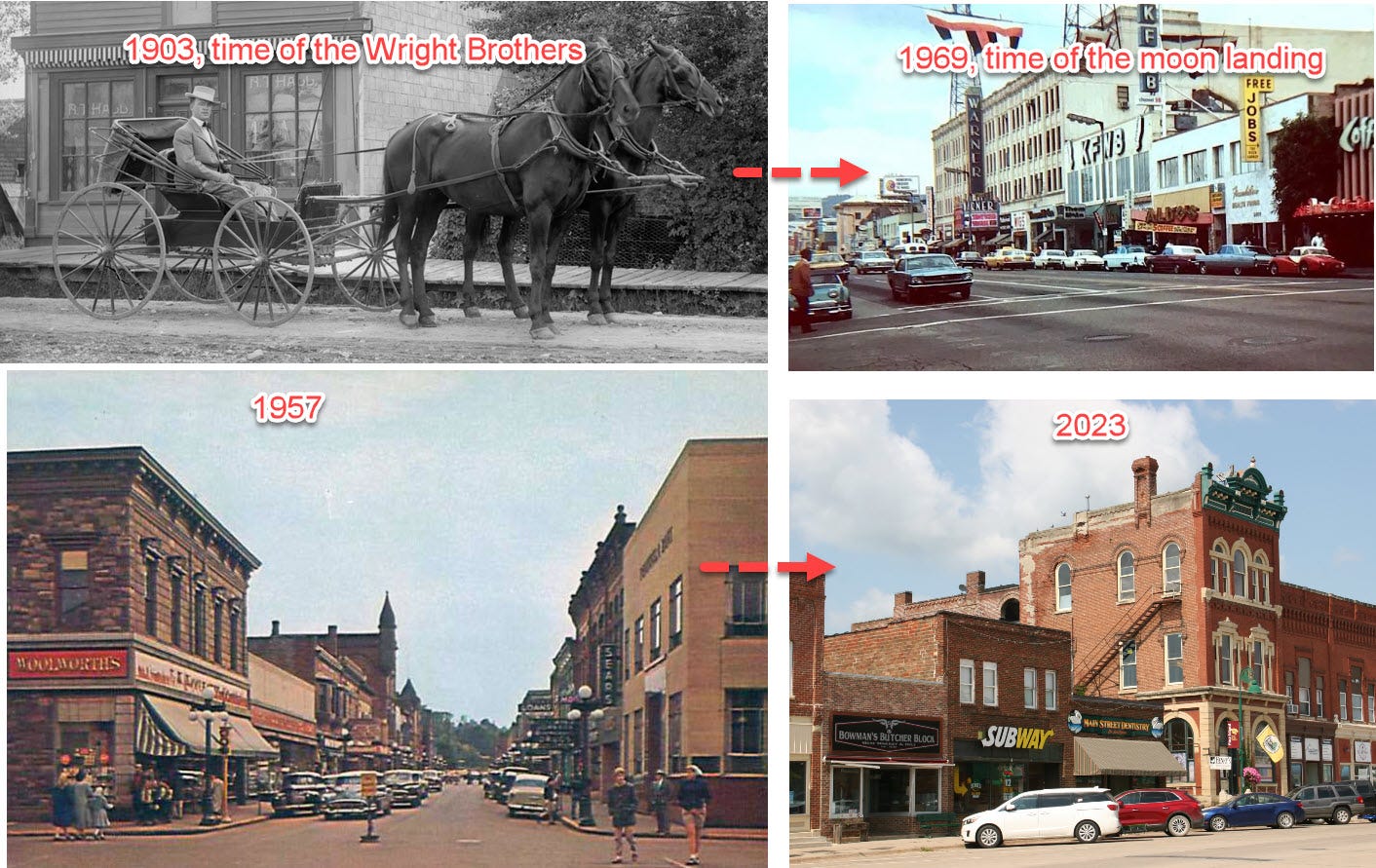

And yet, there is also evidence that things have slowed down in the last few decades. The twin engines of growth: productivity growth and scientific innovation, seem to be progressing more slowly. Someone born at the time of the Wright Brothers, when horse-drawn transport ruled, would be 66 years old when Neil Armstrong stepped on the moon in 1969. That is the equivalent of someone alive today being born in 1957. While there are many technological advances since 1957, it isn’t as clear that they have as profoundly affected our day-to-day life as much as the ones in the previous 66 year span. Compared to entire massive industries that appeared and dissolved in the prior 2/3 of a century, only one of the 270 jobs in the 1950 census has since been eliminated by technological advancement: elevator operator.

As a result, we may have gotten comfortable with the pace of the Long Singularity. But, this is, I think, likely to change as the upward curve of acceleration has steepened, as exponential curves do.

The most obvious place this is happening is in AI, where new innovations are appearing suddenly, and progress, but some estimates, is an order of magnitude a year, rather than the almost-lazy-by-comparison doubling every two years pace of Moore’s Law. There are other areas where sudden breakthroughs may be possible - fusion and life-extension come to mind - but those seem far less certain. AI is already here.

If you have read my Substack in the 6 weeks since the launch of ChatGPT, you know I have become somewhat obsessed over what AI can do. I have written pieces on how it could do parts of my job, how it can transform education, launch startups, and help write. And this is a free chatbot that is the first of many AI products to be released in the months ahead. We won’t have figured out the full capabilites of ChatGPT before a better model comes to market.

Yet when I show the range of capabilities of these current AI systems to students, many instantly see the excitement, and the danger. Some of them ask me what it means for their preferred careers (“Should I become a radiologist if AI can do a lot of the work?” “Will marketing writing still be a good job five years from now?”) and I honestly don’t know the answers. Because that is the thing about a singularity: it makes predictions very hard. Do I think AI will replace radiologists in ten years? No. Am I particularly confident in that prediction? Also, no. This is a level of uncertainty about the future of work that hasn’t existed in a century, when industrialization made and destroyed industries in short spans of time.

And it isn’t just the obvious trends and uses that we should be thinking about. This stage of the Long Singularity has the potential to get very strange. We have an AI that has both blown through the Turing Test and the Lovelace Test (a test for creativity) in the span of a month, and is now doing well on high-level exams. Schools are in an uproar over the future of writing based on ChatGPT. And, as of now, nobody fully understands its capabilities, or what systems it might change or disrupt in just the next couple years. And this is just one use of creative AI, others are coming.

Take, for example, Typical.me. It feeds an AI on Twitter feeds, and lets you interact with the resulting models. Basically, you can talk to anyone on Twitter. It is impressive but flawed in the way current large language models are flawed: the answers are stylistically correct, but full of realistic hallucinations. But it is surprisingly close. You can see an interaction between real-me and AI-me below. I had to actually Google the studies that AI-me cited to make sure they were fake because it seemed plausible that I had written about a real study like that. I failed my own Turing Test: I was fooled by an AI of me to think it was accurately quoting me, when in fact it was making it all up. (Yes, this is confusing. I told you things are getting strange)

I already know people who are training GPT on their own writings, and interrogating “themselves” to discover new insights. I have spoken to folks who are experimenting with how their AI model, interacting with someone elses, might be used to find points of agreement and conflict. Maybe these uses of AI are toys that will fade away, or maybe they will have a profound effect. I don’t know what this all means for the future, and neither does anyone else.

So what do we tell our students, our children, ourselves about our current progress in the Long Singularity? That flexibility is key. That everyone should be experimenting with these new tools to understand what they mean for us. That we should be prepared for a possible future that is very different than the past, in ways that are unknowable right now. People are flexible, and technological change usually gives more than it takes, but we need to be ready for a much stranger world.

Moore’s Law has held since the 1960s, and it say that the number of transistors on a chip doubles every couple years. It was also the subject of my first academic paper

This is a brilliantly mind expanding post.

Who do you know who has been training Chat-GPT3 on their own writings, and are they willing to talk about it?