In the last week, I managed to get an AI that is more powerful than the original ChatGPT to work on my home computer… and one slightly less powerful to run on my iPhone. The AIs ran entirely on my own equipment, no internet connection needed, and without anyone having access to my data. You can do it yourself (I used this tool to run the AI on my computer, and these instructions explain how to do it on a phone).

In both cases, these were open source AIs developed by Mistral, a French AI company, and one of many players rushing to develop these free models - players including tech giants like Microsoft and Meta. Because they are open source, no one owns them, and while there are differences in the finer points of how these models are made and how they can be used, once they are released in the wild anyone can modify them, or use them, in any way that they want.

The implications of these developments are pretty big:

The AI genie is out of the bottle. To the extent that LLMs were exclusively in the hands of a few large tech companies, that is no longer true. There is no longer a policy that can effectively ban AI or one that can broadly restrict how AIs can be used or what they can be used for. And, since anyone can modify these systems, to a large extent AI development is also now much more democratized, for better or worse. For example, I would expect to see a lot more targeted spam messages coming your way soon, given the evidence that GPT-3.5 level models works well for sending deeply personalized fake messages that people want to click on.

AI will be everywhere. I can already run the fairly complex Mistral model on my recent model iPhone, but, just a week ago, Microsoft released a model that just as capable, but only a third as taxing on my poor phone. Between increasing hardware speeds (thanks, Moore’s Law) and increasingly optimized models, it is going to be trivial to run decent LLMs on almost everything. Someone already has an AI running on their watch.

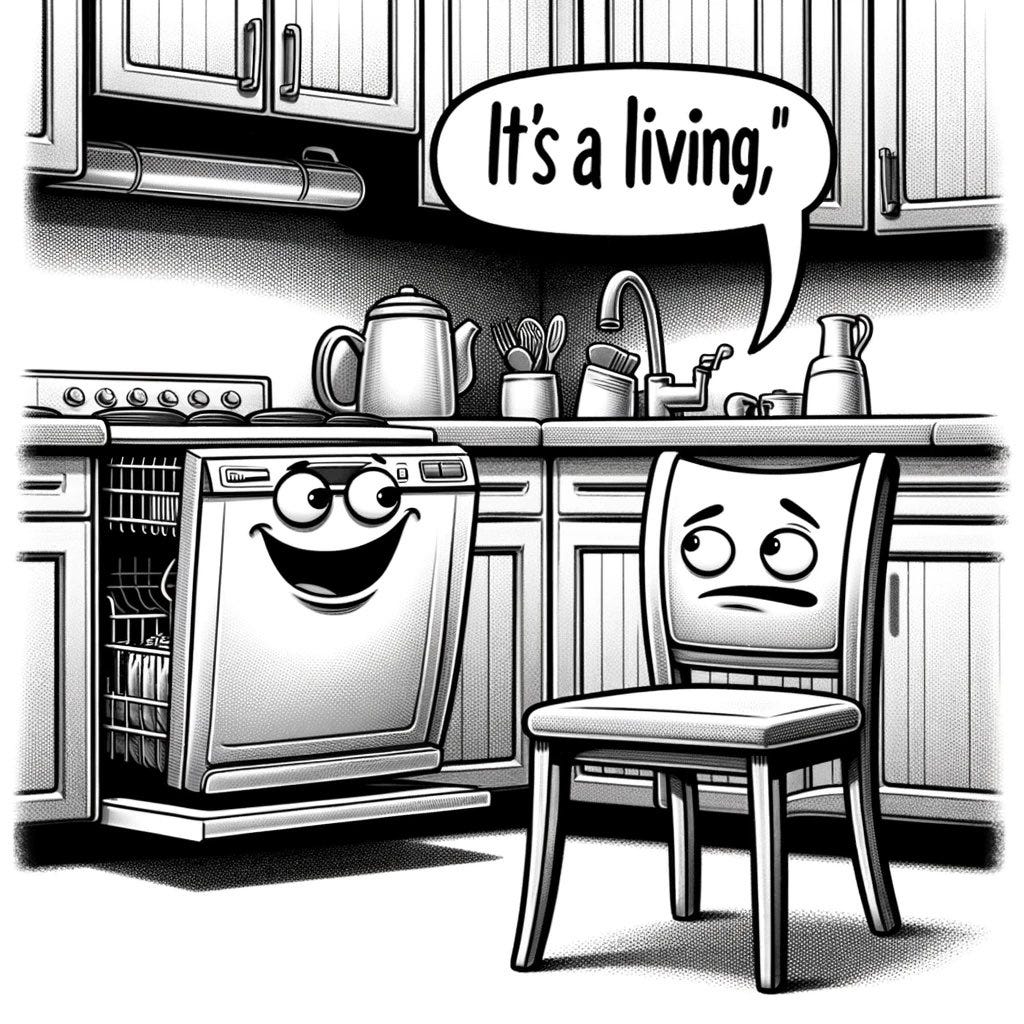

Since LLMs are capable of more complex “reasoning” than a simple Siri or Alexa and do not need to be connected to the internet, we will soon be in a world where a surprisingly large number of products have intelligence of a sort. And because it is incredibly cheap to modify an LLM (which already has a lot of underlying training) to understand a new situation, you don’t need a lot of software development effort to make anything “smart.” Your dishwasher can troubleshoot its own issues, your security system can figure out if something suspicious is happening in your house, and your exercise equipment can persuade you to work a little harder. They can even have personalities, if you want (and maybe even if you don’t want). In the longer term, get ready for an animist-meets-Flintstones future where everything talks to you.For companies, ChatGPT-level AI is going to be incredibly cheap, increasingly fast, and increasingly ubiquitous. There is already a race to the bottom in terms of pricing for cloud-hosted LLMs, as AIs that would have seemed miraculous in the fall of 2022 become widely available at low costs and in ways that are easy to deploy.

But that doesn’t mean that Google, OpenAI, and other companies building advanced LLMs don’t have their own major long-term advantages.

The Frontier

As I wrote in the last post, there is a huge gap between the performance of the two frontier GPT-4 class models (OpenAI’s GPT-4 and Google’s Gemini Ultra) and all of the GPT-3.5 class models in the world. While both Mistral and Meta have hinted at beating GPT-4 with open source models in the next year, the makers of these frontier models are not standing still. They are busy building even larger AIs.

And there seems to be something really important about those larger models. Generally, the bigger the AI model (trained on more data, and therefore requiring more expensive computing power to train), the “smarter” it is. You will almost always want to use the smartest possible model if you are trying to use AI to boost your personal productivity or to learn something, because you want every possible advantage. On top of that, frontier models are just better generalists: the “smartest” frontier model can often beat the best smaller, more specialized models at their own game. Microsoft, for example, demonstrated that, with good prompts, GPT-4 (which is not trained specifically on medicine) beats the best specialized medical LLM, called Med-PaLM 2, in answering medical questions. So, if you want an AI to act at all like the AI of movies and books - working on complex topics in sophisticated ways - you generally want to use a frontier model, even though these models are often slower and more expensive to run.

One way to think about this is that you have a range of AI interns (and no, they are not actually people, but it can help to think about them that way) available to you. Some of them are brilliant generalists (the frontier models), but they are expensive and careful workers whose time is valuable. You also have a variety of less capable interns (the many open source and GPT-3.5 class models). They mess up more, but you can be confident that they are pretty good at narrow tasks they are trained for, and, because they are less capable, their time is very cheap. You will probably use a mix of interns, depending on the problem you are facing. Human organizations are built in exactly this way, with a few expensive general managers and specialists at the high levels of companies, delegating work to many less expensive and less experienced workers near the bottom of the hierarchy.

An organization of AIs

It is likely, then, that the future will not involve just using one AI, but rather a hierarchy of them. You may work with expensive, smart, generalist AIs who delegate work to cheaper specialists. In fact, when I do analyses with GPT-4, it already tries to do this sometimes, using GPT-3 to help it solve problems, as you can see in the example below. Of course, OpenAI does not actually let the AI invoke another AI (yet!) so the process always breaks, but in the world of autonomous AI agents that it is building, you would expect to see this behavior - AIs calling on other AIs for help.

The process can run the other way, too. An overwhelmed open-source customer-service AI can call on a smarter model to handle a complex situation, the equivalent of “I have to get my manager.” At Wharton Interactive, the organization using AI and games for teaching that I help run, we are already using hierarchies of specialized AIs to do tasks. For example, one instance of GPT-4 roleplays characters in a teaching simulation while a separate one grades the students on their interactions. A number of open source AI projects, like AutoGPT, have already started to implement these ideas more broadly.

In the near future, AIs will work in their own hierarchies of intelligence, all communicating with each other, perhaps mostly autonomously. If you want to grab a bite to eat, it may be that your more “intelligent” premium AI assistant can guess what restaurant you might like based on reasoning about you and your recent behavior, and then delegate to a cheaper AI to actually make the reservation. Or you may ask your personal phone AI for help with the task first, and it can get advice from a frontier model on how to nail a tricky reservation, charging your account for the extra bit of intelligence-on-demand.

Some of the choice about whether we want this world where AI is ubiquitous has already been made by the release of open source LLMs. Using just the open source models available today, clever developers can continue to fine tune them to produce very effective AIs that can help with many specialized tasks that used to require complex programs. That means LLMs that can observe, and, act, on the world around them are going to become common and will be more integrated into our work and life.

We are going to live in an AI haunted world.

so I guess dumbbells will still be dumb

It all sounds incredibly tedious I have to say.