This is a bit of a denser post focusing on the issues I am seeing with AI at the organizational level and how to solve them. If you want to experience the post a different way, I used the truly impressive NotebookLM by Google to turn it into a podcast. Literally the only thing I did was feed it the text below, everything else was generated by the AI (it is surprisingly accurate, though it appears to actually build on some of these ideas). If you haven’t played with this yet, I strongly urge you to listen to the clip.

Now, on to the post:

Over the past few months, we have gotten increasingly clear evidence of two key points about AI at work:

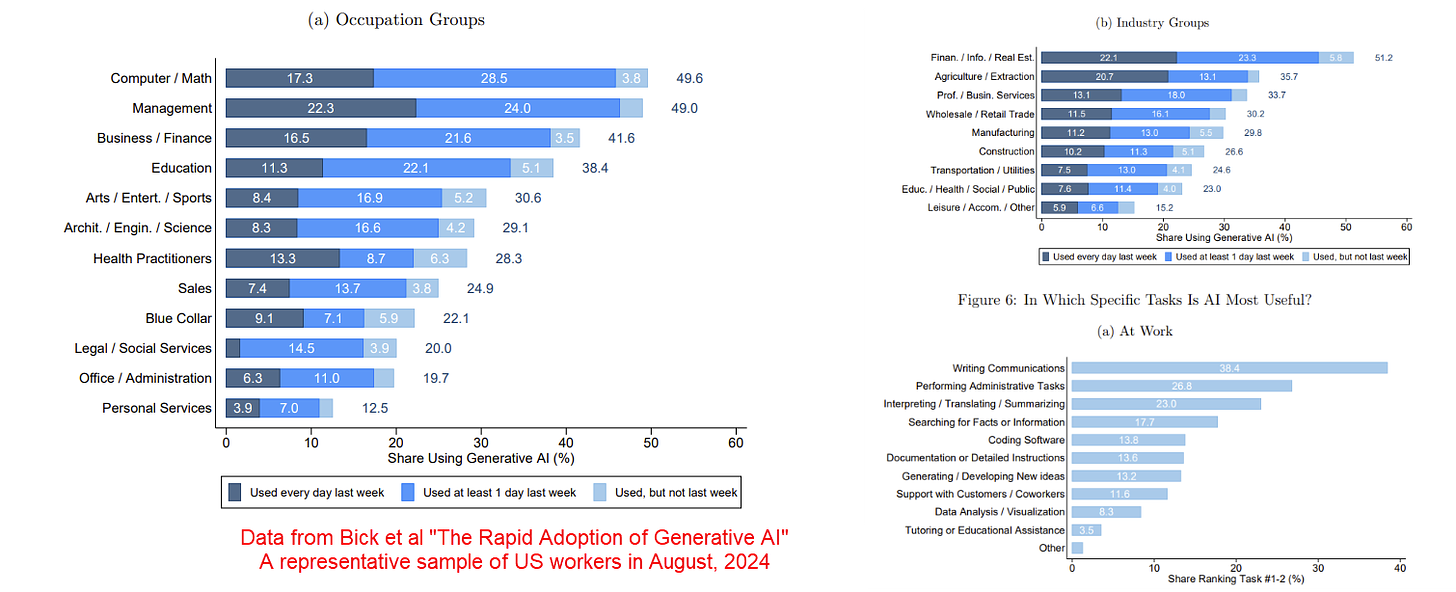

A large percentage of people are using AI at work. We know this is happening in the EU, where a representative study of knowledge workers in Denmark from January found that 65% of marketers, 64% of journalists, 30% of lawyers, among others, had used AI at work. We also know it from a new study of American workers in August, where a third of workers had used Generative AI at work in the last week. (ChatGPT is by far the most used tool in that study, followed by Google’s Gemini)

We know that individuals are seeing productivity gains at work for some important tasks. You have almost certainly seen me reference our work showing consultants completed 18 different tasks 25% more quickly using GPT-4. But another new study of actual deployments of the original GitHub Copilot for coding found a 26% improvement in productivity (and this used the now-obsolete GPT-3.5 and is far less advanced than current coding tools). This aligns with self-reported data. For example, the Denmark study found that users thought that AI halved their working time for 41% of the tasks they do at work.

Yet, when I talk to leaders and managers about AI use in their company, they often say they see little AI use and few productivity gains outside of narrow permitted use cases. So how do we reconcile these two experiences with the points above?

The answer is that AI use that boosts individual performance does not always translate to boosting organizational performance for a variety of reasons. To get organizational gains requires R&D into AI use and you are largely going to have to do the R&D yourself. I want to repeat that: you are largely going to have to do the R&D yourself. For decades, companies have outsourced their organizational innovation to consultants or enterprise software vendors who develop generalized approaches based on what they see across many organizations. That won’t work here, at least for a while. Nobody has special information about how to best use AI at your company, or a playbook for how to integrate it into your organization. Even the major AI companies release models without knowing how they can be best used, discovering use cases as they are shared on Twitter (fine, X). They especially don’t know your industry, organization, or context. We are all figuring this out together. If you want to gain an advantage, you are going to have to figure it out faster.

So how do you do R&D on ways of using AI? You turn to the Crowd or the Lab. Probably both.

Tactics for the Crowd

One of my advisors during my PhD at MIT was Prof. Eric von Hippel, who famously developed the concept of user innovation - that many key breakthrough innovations come not from central R&D labs, but from people actually using products and tinkering with them to solve their own problems (you can read a lot about this research on his website). A key reason for this is that experimentation is hard and expensive for outsiders trying to develop new products, but very cheap for workers doing their own tasks. As users are very motivated to make their own jobs easier with technology, they find ways to do so. The user advantage is especially big in experimenting with Generative AI because the systems are unreliable and have a jagged frontier of capability. Experts can easily assess when an AI is useful for their work through trial and error, but an outsider often cannot.

From the surveys, and many conversations, I know that people are experimenting with AI and finding it very useful. But they aren’t sharing their results with their employers. Instead, almost every organization is completely infiltrated with Secret Cyborgs, people using AI work but not telling you about it.

You want Secret Cyborgs? This is how you get Secret Cyborgs

Here are a bunch of common reasons people don’t share their AI experiments inside organizations:

They received a scary talk about how improper AI use might be punished. Maybe the talk was vague on what improper use was. Maybe they don’t even want to ask. They don’t want to be punished, so they hide their use.

They are being treated as heroes at work for their sensitive emails and rapid coding ability. They suspect if they tell anyone it is AI, people will respect them less, so they hide their use.

They know that companies see productivity gains as an opportunity for cost cutting. They suspect that they or their colleagues will be fired if the company realizes that AI does some of their job, so they hide their use.

They suspect that if they reveal their AI use, even if they aren’t punished, they won’t be rewarded. They aren’t going to give away what they know for free, so they hide their use.

They know that even if companies don’t cut costs and reward their use, any productivity gains will just become an expectation that more work will get done, so they hide their use.

They are incentivized to show people their approaches, but they have no way of sharing how they use AI, so they hide their use.

Getting help from your Cyborgs

So how can companies solve this problem? By taking these things seriously.

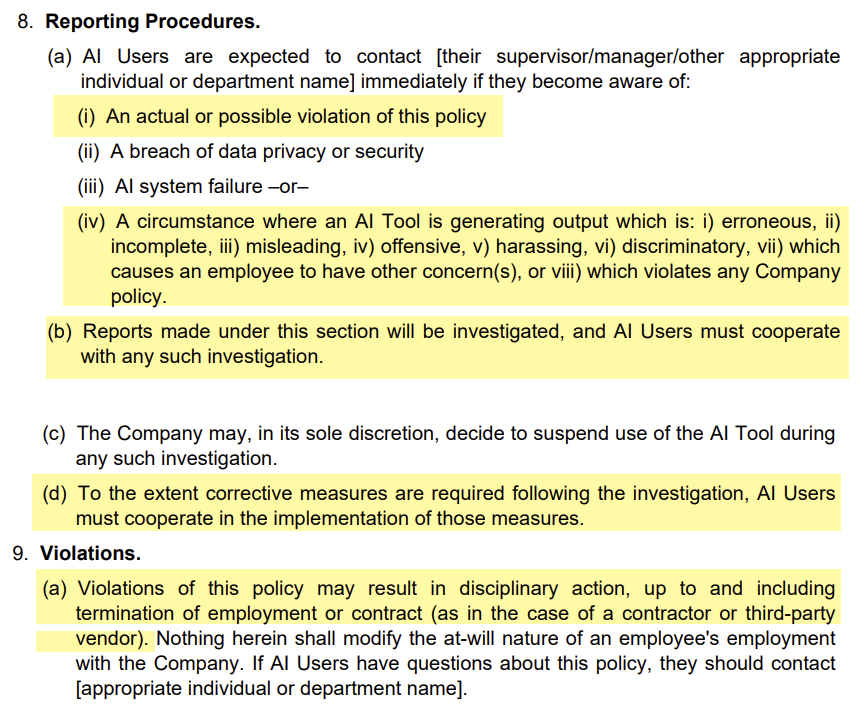

First, you need to reduce the fear. Instead of vague talks on AI ethics or terrifying blanket policies, provide clear areas where experimentation of any kind is permitted and be biased towards allowing people to use AI where it is ethically and legally possible (as a side note, many internal legal departments have an outdated view of the risks of AI). Rules and ethical standards are obviously important, but need to be clear and well-understood, not draconian. And fixing policies isn’t enough. Figure out how you will guarantee to your workers that revealing their productivity gains will not lead to layoffs, because it is often a bad idea to use technological gains to fire workers at a moment of massive change. For companies with good cultures, this will be easier, but for those where employees have little faith in management, you may need to resort to extreme measures to show that this time you aren’t going to use new technology as an excuse to lay off workers. Psychological safety is often the key to a willingness to share innovation.

Second, you need to align your award systems. Figure out how to reward people for revealing AI use. If productivity gains happen, workers need to benefit as well. That might mean giving really big awards for really big gains. Think cash prizes that cover months of salary. Promotions. Corner offices. The ability to work from home forever. With the potential productivity gains possible due to LLMs, these are small prices to pay for truly breakthrough innovation. And large incentives also show that the organization is serious.

Third, model positive use. Executives should be obviously using AI and sharing their use cases with the company. Watch Mary Erdoes, CEO of JP Morgan’s Asset and Wealth Management Group, talk about how the firm is prioritizing AI use at the leadership level, and incorporating their AI experiences into their strategic thinking. And once they become users, managers can encourage their employees to turn to AI first to try to solve their problems. For example, Cynthia Gumbert, CMO of SmartBear, told me that when teams come to her for resources for a new project, if she thinks AI could help, she tells them “Prove to me you can’t do it in AI first, then maybe I will fund the work.”

Give others the opportunity to show their uses as well. Public-facing events like hackathons (especially including non-technical experts, I found my MBAs were able to develop useful GPTs for their various jobs, regardless of technical status) and prompt sharing sessions often work well. You also need to think about how to build a community. AI talent can be anywhere in your organization. How are you finding the people who are enthusiastic and talented, and helping them share what they have learned?

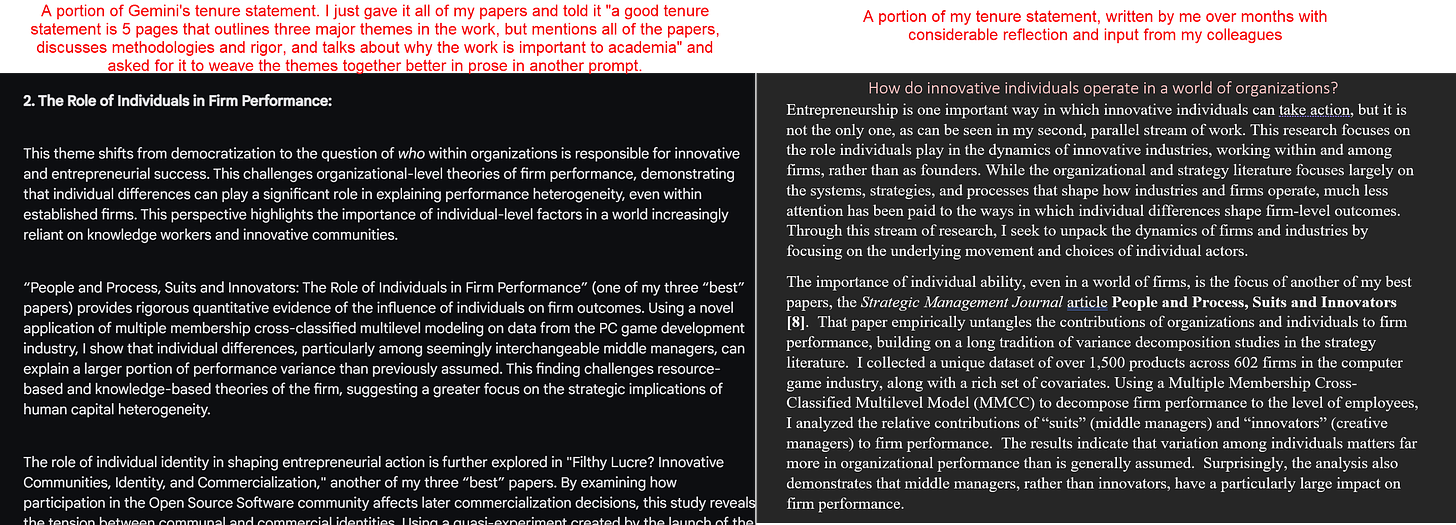

Of course, you also need to give your employees access to tools and training. For tools, that usually means giving them the ability to play directly with a frontier model (probably Claude 3.5, GPT-4o, or Gemini 1.5, through one of many providers) and systems like OpenAI’s GPTs, Claude’s Projects or Google’s Gems that allow them to develop and share more complete solutions. Training is a bit more of a challenge because there is still a lot of discussion over ways to use AI (I gave some advice based on the prompting research here), but even just an introductory session can give people permission to innovate.

The innovation talent for AI is inside your organization. You need to create the opportunity for it to flourish. The Crowd can help. But there is also a role for a more focused innovation effort: the Lab.

Tactics for the Lab

As important as decentralized innovation is, there is also a role for a more centralized effort to figure out how to use R&D in your organization. The Lab needs to consist of subject matter experts and a mix of technologists and non-technologists. Fortunately, the Crowd provides your researchers. Those enthusiasts who figure out how to use AI and proudly share it with the company are some of the talents you will use to staff the Lab. Their job will be completely, or mostly, about AI. You need them to focus on building, not analysis or abstract strategy. Here is what they will build:

Build AI benchmarks for your organization. I have ranted about the state of benchmarks in AI before, but almost all the AI Labs test on coding and multiple-choice tests of knowledge. These don’t tell you which AI is the most stylish writer or can handle financial data or can best read through a legal document. You need to develop your own benchmarks: how good are each of the models at the tasks you actually do inside of your company? A set of clear business-critical tasks and criteria for evaluating them is key (Anthropic has a guide to benchmarking that can help as a starting place). Without these benchmarks, you are flying blind. You have no idea how good AI systems are, and, even more importantly, you do not know how good they are getting. If you had benchmarks, you would know whether the new o-1 models represent an opportunity or threat, or if they are closing the gap with human performance. Most organizations have no idea.

Build prompts and tools that work. Take the ideas from the Crowd and turn them into fast and dirty products. Iterate and test them. Then release them into your organization and measure what happens.

Build stuff that doesn’t work… yet. What would it look like if you used AI agents to do all the work for key business processes? Build it and see where it fails. Then, when a new model comes out, plug it into what you built and see if it is any better. If the rate of advancement continues, this gives you the opportunity to get a first glance at where things are heading, and to actually have a deployable prototype at the first moment AI models improve past critical thresholds.

Build provocations and magic. Many people have failed to engage with AI. Yet, if you are following AI closely there is a good chance you see something amazing or disturbing on a regular basis. Demos and experiences that get people to viscerally understand why AI might change or alter your organization have a value all their own. Show how far you can get with an impossible task with AI, or what the latest tools can accomplish. Get the people going.

The Crowd innovates and the Lab builds and tests. A successful internal R&D effort likely involves both.

This is just a start

In the longer term, innovation is not enough to thrive if AI abilities continue to advance, instead companies will need AI-aware leadership. Our organizations are built around the limitations and benefits of human intelligence, the only form we have had available to us. Now, we must figure out how to reconfigure processes and organizational structures that have been developed over decades to take into account the weird “intelligence” of AIs. That requires going beyond R&D to consider organizational structures and goals, and what the role of people and machines are in the organization of the future. The right way to do this is not yet clear, but should be something companies, and the consultants and academics who advise them, need to start working on now.

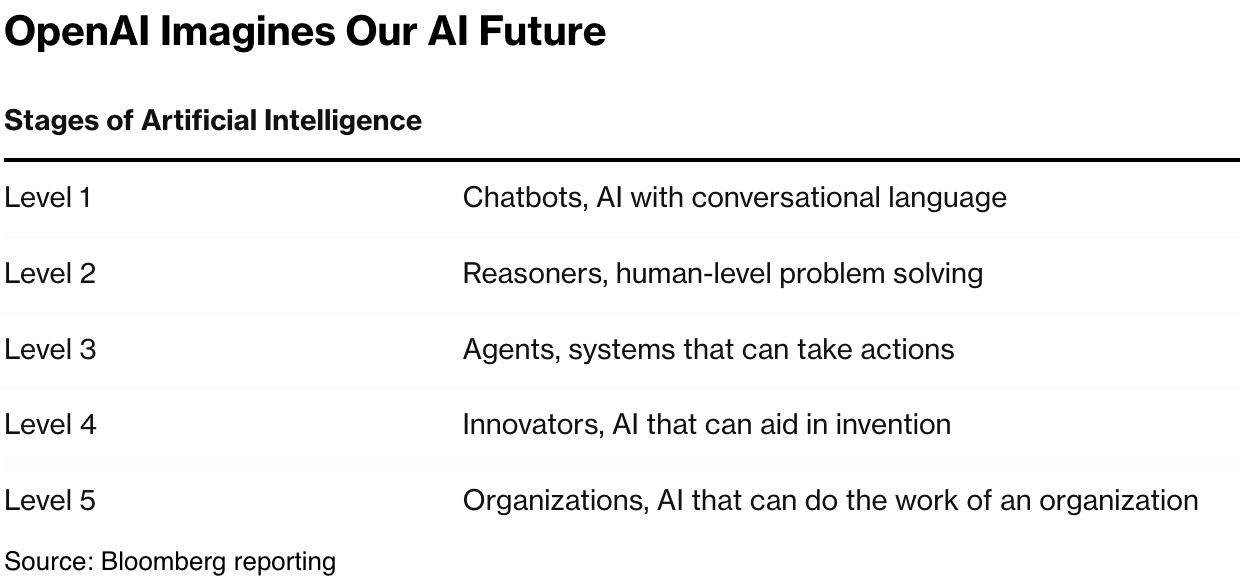

And yet this may not be radical enough. The explicit goal of the AI labs is to build AIs that are better than humans at every intellectual tasks. They have promised that soon we will have agents - autonomous AIs with goals that can plan and act on their own. Ultimately, as OpenAI’s roadmap shows, they believe they can create AIs that do the work of organizations. None of this may happen, but even if just some it does, the changes to organizations become far more profound, in ways that are difficult to imagine today. For companies, the best way to navigate this uncertainty is to take back some agency and begin to explore this new world for themselves.

And, for fun, I asked Claude to turn this post into a fantasy novel and created a second podcast. Somehow, despite the theme change, the main points come through.

Yes the audio is a marvel.

But it is chilling to hear AI voices - faking folksy humanity with artificial hesitations, gratuitous repetitions, and sexy giggle-snorts - as they dismiss the threat to job security as if it were the silly fear of ignorant reactionaries.

Clearly AI tools cleave the creative workforce into a managerial class that truly will be superpowered and a far larger crowd of “talent” - the illustrators, copywriters, composers, coders and, yes, podcast commentators who will be made largely redundant in everyday work.

Let's face this inevitable reality with clarity and humanity, not wishful whitewash from bots engineered to mimic even the flaws of the human workers they replace.

One further possible factor: often AI tools can help an individual most with particularly bureaucratic organisational tasks. Ones that probably shouldn’t exist if those processes had been reconsidered at any point in the last ten years… Legal, HR, reporting, and so on. Individuals often find these tasks boring, and their usefulness is not always transparent, so the organisation had to press quite hard to ensure they are completed, and in that culture you’re not going to admit that you’re shortcutting these ‘important’ organisational tasks with AI.