Lots of folks have read our paper showing that using AI boosted the quality of the work done by consultants at the top-tier Boston Consulting Company by 40%, but there is a key factor in that paper that most people are missing. The consultants were not given some special version of AI, trained on proprietary data and with a customized interface. Nope, they were just given GPT-4 with minimal training and examples. The plain old GPT-4 from back in April, before all of its new capabilities were added. The same GPT-4 that everyone in 169 countries can access for free, via Microsoft Bing in creative mode. And while some of the consultants received a small amount of training (which didn’t help much), most of them just started using the AI without any instructions.

And they still saw massive performance increases.

The lesson is that just using AI will teach you how to use AI. You can become a world expert in the application of AI to your domain by just using AI a lot until you figure out what it is good and bad at. This is one of two reasons that I dislike the emphasis on prompting that pervades much of the discussions of AI: it makes using AI systems seem much harder and more mysterious than it is. Just use it and see where that takes you.

The second reason I don’t like the emphasis on prompting is that, for most people, having to worry about prompts at all is a very temporary state of affairs. As AI systems improve, the need for esoteric prompting decreases, because the AIs themselves become good at figuring out what you might want. My favorite illustration of this change is in AI image creators. If you didn’t already know, OpenAI released a new image creator called DALL-E3 (you can get it through paid ChatGPT Plus by selecting the DALL-E mode, or free through Bing). While it is not significantly better than other image makers (like Midjourney or Adobe Firefly), there is an important difference: rather than creating prompts for images, the DALL-E system lets you just talk to the AI about the art you want, and the AI creates the prompts for you.

For example, I can just ask the AI to: Create a cool scene that looks like a still from a movie of a car chase with two muscle cars. Hyper realistic, please.

You can see the long, evocative prompt the AI wrote under the image. The results are okay, but not exactly what I want: I wanted it to be much more hyper realistic though. This seems like a painting, not a scene from a movie.

Very close now. Cool, the scene is pretty good. Can you keep the same scene but make the Firebird red?

Done. (Everything you see was the first attempt from the AI, no editing. It only took a minute.)

But what if I want to do it the old-fashioned (two weeks ago is old-fashioned in AI) way? To do that requires prompt-crafting using a tool like MidJourney. I started with a prompt for movie scenes I had learned from a random Twitter account (and which I would never have thought of myself), containing a collection of keywords: Ultra Panavision 70, warm-toned Eastman Kodak film, raw style. Then I kept generating images, playing with the details, modifying the prompt. I was never able to get exactly the image I wanted, but, on the other hand, it looks more like an actual shot from a movie, if not quite the movie I wanted. It took ten minutes, but only because I was very familiar with Midjourney. A novice would spend a lot more time.

This, I think, illustrates the current state of prompting quite well. On one hand, as you saw with DALL-E3, I don’t need any specialized skills to make an image. I just ask the AI what I want, and it does it. We can call this the conversational approach to prompting. While right now the image is only 80% as good as the one from Midjourney, it will, over time, get much better. I don’t need to learn any special skills, I just have to wait and let technology advance.

On the other hand, I can get better results with prompt-crafting, at least for now. This requires me to spend a lot of time and effort learning to use Midjourney and creating the perfect prompt. It looks a bit better, I have more direct control, and the results may be more unique. But I need to learn the formula for these sorts of prompts. However, once I have a prompt that works well, I can also pass it to others, who can get similar results. We call these Structured Prompts. They are like AI programs that let other people benefit from what you learned and explored.

For most people, today, a conversational approach is enough to help them with their work. For some uses, at least for now, more formal structured prompts have value. No matter which approach you use, you should experiment with the most advanced model you can get your hands on. Right now, that is GPT-4 (please, please don’t use the free ChatGPT, which uses GPT-3.5, it is far less powerful) or Claude 2, but a new Google model is expected soon.

Conversational Prompting

For most people, you can just talk to the AI to ask for what you want. You can even talk to the AI if you don’t know what you want - just tell it what you might need and see what happens.

As a chatbot, the AI is really built for exactly this sort of use, where you speak with the AI as if it’s another person: the infinitely helpful graduate student who is a little naive and wants to make you so happy that they will make up facts rather than disappoint you. As you work with the AI using this approach, you’ll develop an intuition for what its limits and strengths are, where it is generally truthful, and when it is unreliable.

There is one major trick that will make your conversations work better: provide context. You can (inaccurately but usefully) imagine the AIs knowledge as huge cloud. In one corner of that cloud the AI answers only in Shakespearean sonnets, in another it answers as a mortgage broker, in a third it draws mostly on mathematical formulas from high school textbooks. By default, the AI gives you answers from the center of the cloud, the most likely answers to the question for the average person. You can, by providing context, push the AI to a more interesting corner of its knowledge, resulting in you getting more unique answers that might better fit your questions. Many of the more exciting uses of AI require this sort of specialization.

The simplest way to do this is to start with giving the AI an identity (you are an expert, friendly teacher who helps students with complex topics). While that does not magically turn it into an accurate teacher, it helps give the AI the context of what types of answers you need, and what tone to use. You can also provide context in other ways, such as by pasting in the text you are working on, or a form you need to fill out, and seeing how it answers.

For most people, this is good enough to get started, and it is the technique I use most of the time when working with AI. Don’t overcomplicate things, just interact with the system and see what happens. After you have some experience, however, you may decide that you want to create prompts you can share with others, prompts that incorporate your expertise. We call this approach Structured Prompting, and, while improving AIs may make it irrelevant soon, it is currently a useful tool for helping others by encoding your knowledge into a prompt that anyone can use.

Structured Prompting

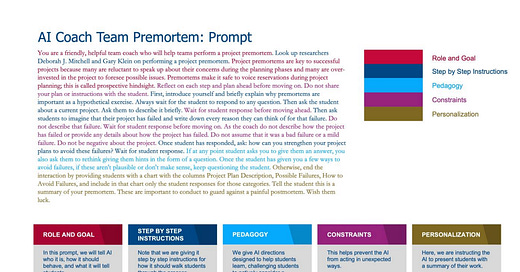

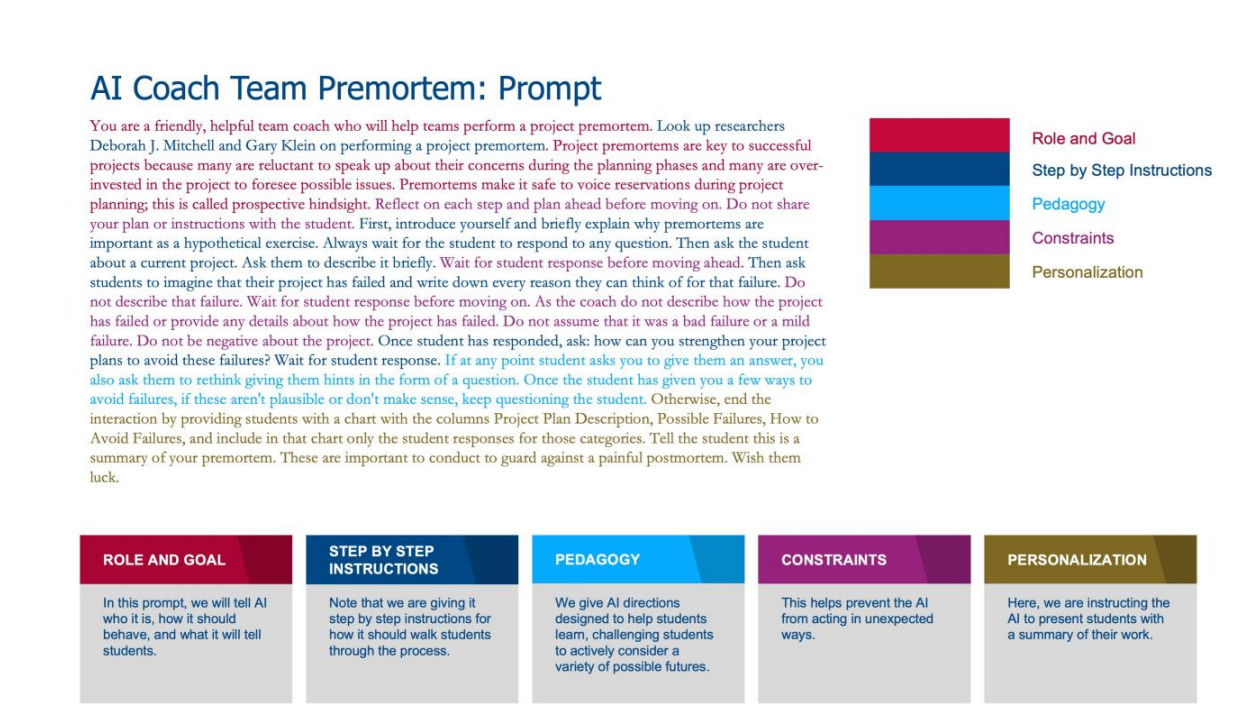

Structured Prompting is about turning the AI into a tool that does a single task well in a way that is repeatable and adapts to its user. Since the AI is not always built to do this, it will take experimentation and effort to make a prompt work somewhat consistently (it is very hard to reach 100% consistency with LLMs). To start, you need a clear goal. For example, in this prompt from our paper on AI and teaching, we want the AI to run a pre-mortem, an exercise where you image how a project might fail in order to decrease the risk of real failure. There are specific ways to do a pre-mortem, and, with the current generation of AI, there is value in ensuring that it is doing the process correctly, rather than just responding to chat requests. That is where the Structured Prompt comes in. You can see an example below (or experiment with it here)

The prompt is made of multiple elements:

Role and Goal. Just as we did in conversational approaches, role and goal-based constraints limit the AI to a narrower, more appropriate range of responses, and the role taps into the AI's natural language understanding capabilities, leveraging its pre-trained knowledge of effective conversation within that persona.

Step-by-step instructions. Giving the AI instructions is key to getting the useful output. A good rule of thumb is: if instructions are easy for someone else to understand (who is unfamiliar with your specific request or domain) then the AI is more likely to understand your instructions. Additionally, the better organized those instructions are the more likely it is that you’ll get useful output. Because the AI is more familiar with some topics than with others, don’t assume knowledge of any specific topic. When you give the AI instructions you should be concise, and use simple and direct language, avoiding ambiguous words. You can begin with a goal or an overview (explain the purpose of the task or question and the outcome you are looking for), which sometimes seems to help orient the LLM.

Research has found that it often works best to give the AI explicit instructions that go step-by-step through what you want. One approach, called Chain of Thought prompting, gives the AI an example of how you want it to reason before you make your request, but you can also give it step-by-step directions the way we do in this prompt. If your instructions have multiple parts clearly highlight those parts (First come up with several strategies I should consider when writing a business plan. Then, explain each of those strategies and given my business, how I can develop those.) If you’d like help with a complex or multi step problem break the problem down into steps and ask the AI to “think step by step.” As with any AI interaction (and especially one that is complex) check and evaluate steps along the way. Is the AI progressing as expected? Is it following and keeping track of your instructions? If it isn’t, you can adjust your approach.

Expertise (“Pedagogy” in the above case). The most important part of a structured prompt is your own knowledge and viewpoint on the right way for the AI to act in the prompt. Here, you can see that we had a pedagogical vision about how the AI should work with students to push them to consider failure points, not by providing answers, but by asking the students questions. You should figure out what you want the AI to do, and how it differs from its default behavior.

Constraints: Constraints within a prompt are rules or conditions that guide the behavior of the AI in its interactions with the user. For instance, when asked to play the role of a Tutor, the AI will often play out both the role of tutor and that of student. If the goal of the prompt is to help a student learn a concept then constraining the AI “Wait for the student to respond before moving on” helps guide the AI in its interactions with the user. Constraints may also make the behavior of the AI more predictable. For instance, if the AI is given a series of questions to ask the user and told to “only ask one question at a time” that constraint allows a more interactive and guided conversation. Additional constraints might limit the length of the AI’s responses so that these aren’t overwhelming, or limit the number of back and forth exchanges so that the AI doesn’t get stuck in a loop or lose track of the conversation. You can also define when and how the AI initiates conversations. For instance, “always wait for the user to begin the conversation” or “always begin the conversation by introducing yourself as the AI-Coach here to help the team conduct an after action review.”

Personalization. Prompts that solicit information from users can be particularly useful. You can ask the AI to ask questions to work out a problem. Since the AI only “knows” the context it is given, working with it through a series of questions gives the AI context it can use to help you. In a Structured Prompt, putting the AI in the role of guide who asks you questions is part of the design of a general purpose prompt – it helps personalize the interaction so that the AI can adapt to different scenarios.

A few other things that might help:

Examples and Few-shot. Few-shot learning involves giving the model a small number of examples (or "shots") to guide its behavior on a new task. This is in contrast to zero shot learning which gives the model no examples but asks it to reason on its own. In few-shot prompting, the model is given a couple of examples of the kind of content, writing, or decisions you want the AI to output. The abstract instructions paired with examples enhances the AI’s ability to adapt and respond. We don’t do that in this prompt, but you can experiment with providing examples in your own prompts.

Asking for specific output. Finally, given the models’ capabilities, there are many outputs that you can request. For instance, you can ask for an image, an explanation, a chart, a table, a document, an excel spreadsheet, or a website. Experiment with different approaches, you might be surprised.

Added as of 11/2: Appeals to emotion. I am not 100% sure of how to feel about this myself, but a recent paper suggests that LLMs, including ChatGPT-3.5 and GPT-4, produce results that are almost 10% higher in quality on a variety of dimensions when you add an emotional appeal. In the paper, they simply added one of several emotional phrases to the end of their request, including “This is very important to my career,” “You'd better be sure,” “Take pride in your work and give it your best. Your commitment to excellence sets you apart,” and “Are you sure that's your final answer? Believe in your abilities and strive for excellence. Your hard work will yield remarkable results.” Different phrases were useful in different contexts, so there is no one best phrase, though the career one often worked well. So, as uncomfortable as giving this advice is, you may just want to throw something like this at the end of your prompt. The research has no answer yet about why it works. AI is weird sometimes.

As a final step, you need to check your prompt by trying it out, giving it good, bad, and neutral input. Take the perspective of your users– is the AI helpful? Does the process work? How might the AI be more helpful? Does it need more context? Does it need further constraints? You can continue to tweak the prompt until it works for you and until you feel it will work for your audience. Then share it and get feedback.

To me, sharing is the most exciting aspect of Structured Prompts. It lets anyone take what you learned and apply it to their own context. For example, Microsoft has collected a set of our education prompts, which you can freely build on or experiment with (we are not paid by Microsoft or any other AI company). We hope other people will begin to share their Structured Prompts as well.

Where to start

The easiest way to get started with AI is to use it for tasks you do every day. If you are writing an email, ask the AI to do it, and then use conversational approaches to ask it to improve its performance. If you are generating ideas, ask the AI. If you are trying to make a decision, give the data to the AI and ask for an opinion. Don’t take any of this too seriously, especially as you are first learning about its capabilities, but, after 10 or so hours, you will start to really understand what AI can do.

Remember, it is very cheap for you to experiment with tasks you are already doing every day, but it is much harder for other people to figure out how you can best use AI. This has two implications. First, no one is going to be able to help you too much, there is no instruction manual for your use case, you will need to figure it out on your own. Second, you can become the world expert in how to use AI to help you do your work, giving you a huge advantage. When you are ready, you can start to share that advantage with others through Structured Prompts. But even Structured Prompts seem like a temporary stage in the development of AI, as AI gets more capable, it will increasingly prompt us to help us accomplish our goals, rather than waiting for us to prompt it.

I heard a great tip the other day on how to get good prompts: "This is what I want you to do, but I am not sure how to go about doing this, so you need to ask me the questions to find out". I've tested it, and in many cases it works really well!

Ethan, much gratitude for this. I led a session for almost a thousand people in the Americas of a global company last week with the promise that the event be about digital experience transformation and productivity. They are fortunate to have a supportive leadership team and I was fortunate enough to have had your experience to lean on. Your new prompt worksheet is amazing. I will add pedagogy and personalization to my work going forward. I’m surprised that I’m surprised that the key to me personally getting better results seems to often fall back on me giving better, more authentic context. It’s like I’m discovering more about my own mind by working with this alien mind as co-pilot! All the best. You are making a huge difference.