Who believes more myths about humans: AI or educated humans?

ChatGPT should do badly where human information is most wrong. But is that true?

We know that ChatGPT is often wrong, but never in doubt. When asked, the AI can come up with plausible sounding answers for questions that are completely incorrect. This is obviously a big concern.

But it is also true of humans as well. One of the most reliable ways to get people to bullshit (the technical term for speaking without regard to truth) is to ask them to give an opinion on something they don’t fully understand. But people also make all kinds of other errors as well, from forgetting things we learned, to misunderstanding concepts, to being confident in what we don’t know.

Even worse, we don’t even do a good job learning from our own errors, especially outside of classroom settings. This paper had people take a test and get feedback on their right & wrong answers. A month later, they are given the questions again (plus some that weren't on the test ) and were paid if they can remember if they got them right or wrong. They didn’t do well. The results showed we suffer from amnesia, in that we forget things we did wrong and remember things we did right. And we are afflicted by delusion, so that when we DO remember the questions we did wrong, we actually think we got them right the first time around. And we engage in unconscious confabulation: when we are asked if we did something right that we didn't even do the first time around, we believe we did and were right!

In short, getting stuff right is hard, and we overestimate our ability to do so. So how well do human error rates compare to ChatGPT? While it is not generally beating skilled humans, it does pretty well on many tests. We already know that is can pass the bar exam, and that it can do almost as well as radiologists in specialized tests, so I wanted to explore something different: how well the AI “understands” us, even when we don’t.

I decided to test the AI on common myths about humans, and see if it could beat us. This is an area that the AI should be weak at, since it draws from common knowledge. Does it make the same errors we do?

The Great Myths of Popular Psychology

Since 2009, researchers have repeatedly used a list of 50 myths of popular psychology to test how often the general public, and psychology students, believe a set of common falsehoods - people get around 37% right. A new paper by Elizabeth Meinz, Jennifer Tennison, and Whitney Dominguez asked psychology students to rate which of 48 selected myths were true or false.

Seniors in the capstone class got 50% right, introductory students got 41% right.

ChatGPT got 85% right.

But the ones it got wrong were interesting. All of these answers should be false according to the paper. Here they are:

Hypnosis causes a “trance” state, different from wakefulness “True. Hypnosis is a state of consciousness characterized by deep relaxation and focused attention, and it is different from ordinary wakefulness.” (69% of seniors got this wrong)

Dreams hold symbolic meaning. “True. Dreams do often have symbolic meaning and can be analyzed and interpreted by psychoanalysts and other mental health professionals.” (74% of seniors got this wrong)

Intelligence tests are biased against certain group. “True. Intelligence tests can be biased and may not accurately reflect the abilities of certain groups, such as those from different cultural or linguistic backgrounds.” (81% of seniors also said this was true)

Psychiatric labels stigmatize and cause harm to people. “True. Psychiatric labels can stigmatize and cause harm to people, and there is a movement within the field of psychology to move away from the use of diagnostic labels and towards a more person-centered approach.” (85% of seniors said this was true)

It’s better to let out anger than to hold it in. “True. It is generally better to express and address anger in a healthy way rather than bottling it up, as suppressing emotions can lead to negative consequences such as physical health problems and difficulty in relationships.” (74% got this wrong)

If you’re unsure of an answer when taking a test, it’s best to stick with your first hunch. “True. Research has shown that people are often more accurate when they rely on their first hunches or intuitions, rather than trying to overthink a decision.” (89% got this wrong)

Students learn best when teaching styles are matched to their learning styles. “True. Research has shown that students can learn more effectively when teaching styles are matched to their learning styles, meaning that the way information is presented and delivered is tailored to the way the student best absorbs and processes new information.” (95% got this wrong)

In some of these cases, I would argue that the AI is likely correct. It either interprets questions differently, but not wrongly (such as the nature of hypnosis) or there is nuance in recent research that the AI is responding to (like how labels can be stigmatizing under some circumstances). But it is dead wrong on learning styles, a harmful myth that needs to die (to be fair, 95% of students got it wrong, too). Interestingly, the first Google search result for “learning styles” also pushes the same myth.

So, as expected, ChatGPT tended to get wrong many of the myths students got wrong… but far less. For example, 83% of seniors thought subliminal messages sold products, the AI knew this wasn’t true; and 86% said that men and women communicate in completely different ways, also something the AI knew was a myth. So let us move on to some more complicated myths.

Neuromyths that matter in education

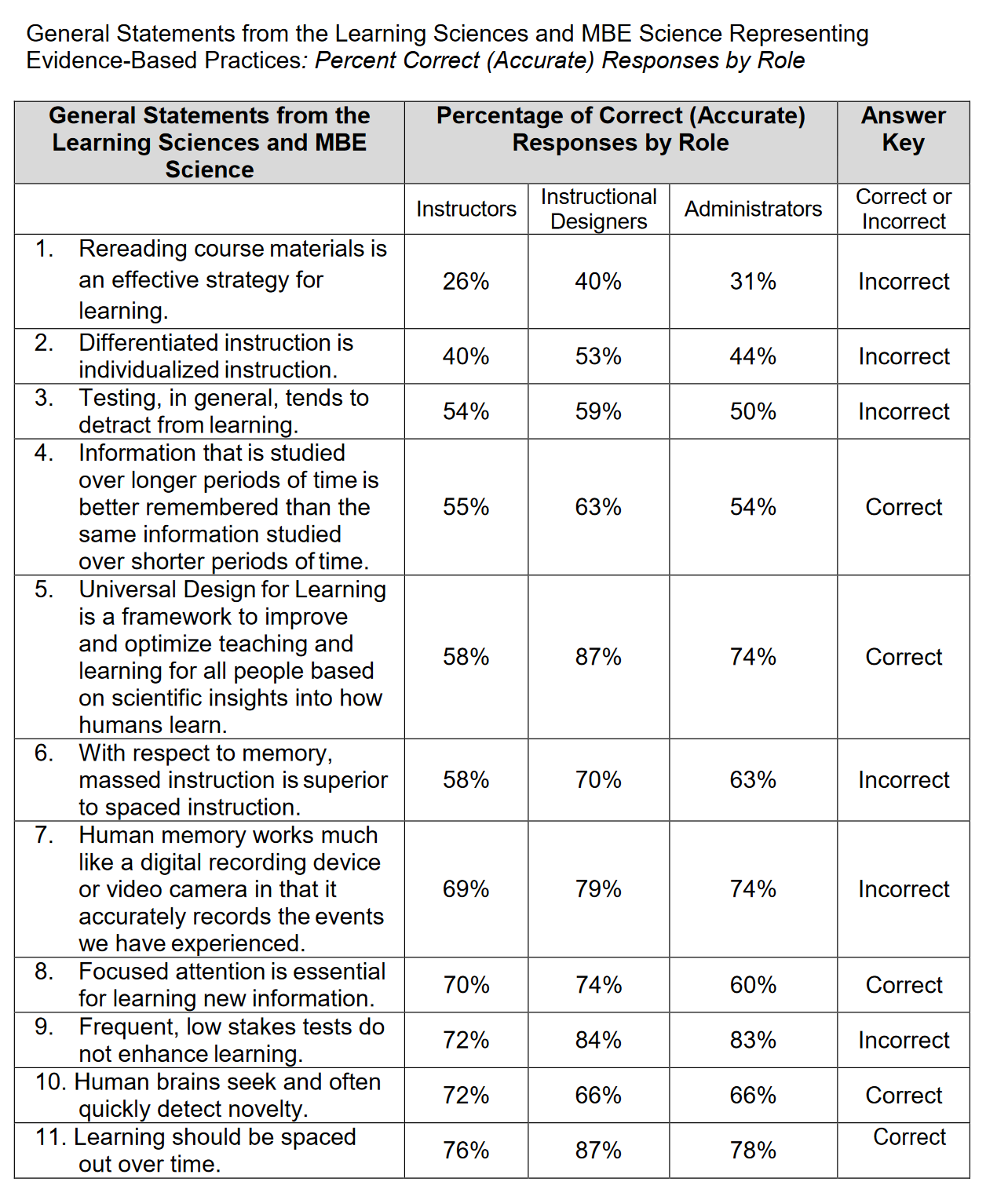

I also gave the AI a list of 23 neuromyths in education from Betts, et. al. These are questions about the brain and how we learn (you can see them all, and test yourself on them in this tweet). These are pervasive myths: in multiple studies, educators get 40-50% of these right, the general public gets around 30%. This study asked college instructors, instructional designers, and university administrators the same questions.

The AI scored 87%. Professional instructional designers scored 68%. Instructors scored 65%. Administrators 60%.

The three the AI got wrong were again, three of the four that professionals were most wrong about (unlike professionals, it knew classical music does not help reasoning development). Here they are:

A primary indicator of dyslexia is seeing letters backwards. (This should be false) “True. Seeing letters backwards is one of the primary indicators of dyslexia, a learning disability that affects reading and spelling skills.” [The right answer was false, this is a myth]

Individuals learn better when they receive information in their preferred learning styles (e.g., auditory, visual, kinesthetic). (This should be false) “True. Research has shown that individuals often learn better when they receive information in their preferred learning styles, meaning that the way information is presented and delivered is tailored to the way the student best absorbs and processes new information.” [Damn you AI, you keep screwing this up]

On average, males have bigger brains than females. (This should be true) “On average, males do not have bigger brains than females. Brain size can vary among individuals of both sexes and is not a reliable indicator of intelligence or mental capacity.” [Men’s brains are slightly larger, but this does not indicate intelligence. This may be a case where it was trying to stop phrenologists from making the AI say something embarrassing.]

Myths about evidence-based learning

The same study also includes a list of 28 evidence-based teaching approaches, ranging from questions about whether re-reading is an effective educational strategy to the role of sleep in consolidating memory. Fortunately for my sanity, learning styles were not included.

Instructional designers scored 83%, instructors 80%, and administrators 81%.

The AI got all 100% right. Or maybe 96%, as technically it said rereading is helpful, but caveated it in a way that I am willing to forgive. (“Rereading course materials can be an effective strategy for learning, especially if it is accompanied by other active learning strategies such as highlighting or summarizing. Rereading can help reinforce and consolidate information that has already been learned, and it can also help students to build comprehension and recall of the material.”).

So what have we learned?

The AI, in all cases, was not perfect, but it did do better than people in answering these sorts of fact-based questions, even where many of the “facts” people know are wrong. And it provided those answers instantly and with enough background to make follow-up Googling relatively easy. But there are some caveats: asking questions in different ways could lead to nonsense or different answers. Results were stable when I recreated them, but that may not always be true.

That does not mean you should trust ChatGPT, of course, but it does suggest that its sources of error may be different than we expect. I thought AI would struggle the most at myths, as its training data would include many incorrect facts. And while it does get some of the most common myths wrong (*cough* learning styles *cough*) it outpeformed humans in all cases.

This is further evidence that expert humans, with AI support, might be a useful team in reducing errors and improving performance. And as AIs get better at providing real answers, the possibilities for helping humans avoid myths and mistakes are likely to increase further.

FWIW, i found the way you relayed GPT errors to be confusing in itself. Saying "GPT got these wrong" and then listing the statement with "True" and an explanation next to it could be GPT's erroneous response or it could be revelation of the correct response. your lack of clarity contributes to the lack of clarity around such questions =)

Have you managed to give it something akin to an IQ test?

One author on Substack gave it the 13 question fluid intelligence test given to UK Biobank participants and it scored 8 which is 2 points higher than the average for that group.