There seems to be near-universal belief in AI that agents are the next big thing. Of course, no one exactly agrees on what an agent is, but it usually involves the idea of an AI acting independently in the world to accomplish the goals of the user.

The new Claude computer use model announced today shows us a hint of what an agent means. It is capable of some planning, it has the ability to use a computer by looking at a screen (through taking a screenshot) and interacting with it (by moving a virtual mouse and typing), It is a good preview of an important part of what agents can do. I had a chance to try it out a bit last week, and I wanted to give some quick impressions. I was given access to a model that was connected to a remote desktop with common open office applications, it could also install new applications itself.

Normally, you interact with an AI through chat, and it is like having a conversation. With this agentic approach, it is about giving instructions, and letting the AI do the work. It comes back to you with questions, or drafts, or finished products while you do something else. It feels like delegating a task rather than managing one.

As one example, I asked the AI to put together a lesson plan on the Great Gatsby for high school students, breaking it into readable chunks and then creating assignments and connections tied to the Common Core learning standard. I also asked it to put this all into a single spreadsheet for me. With a chatbot, I would have needed to direct the AI through each step, using it as a co-intelligence to develop a plan together. This was different. Once given the instructions, the AI went through the steps itself: it downloaded the book, it looked up lesson plans on the web, it opened a spreadsheet application and filled out an initial lesson plan, then it looked up Common Core standards, added revisions to the spreadsheet, and so on for multiple steps. The results are not bad (I checked and did not see obvious errors, but there may be some - more on reliability later int he post). Most importantly, I was presented finished drafts to comment on, not a process to manage. I simply delegated a complex task and walked away from my computer, checking back later to see what it did (the system is quite slow).

Would you like to play a game?

Because the AI is a smart, general-purpose system it can handle lots of tasks - it doesn’t need to be programmed to do them. Anthropic demonstrated the ability of these systems using coding, and the demo is worth watching. But to get a little bit better sense of the limits of the system, I tested it on a game, Paperclip Clicker, which, ironically, is about an AI that destroys humanity in its single-minded pursuit of making paperclips. The game is a clicker game, which means it starts simply, but new options appear as the game continues and the game increases in scale and complexity (it is pretty fun, you can try it at the link).

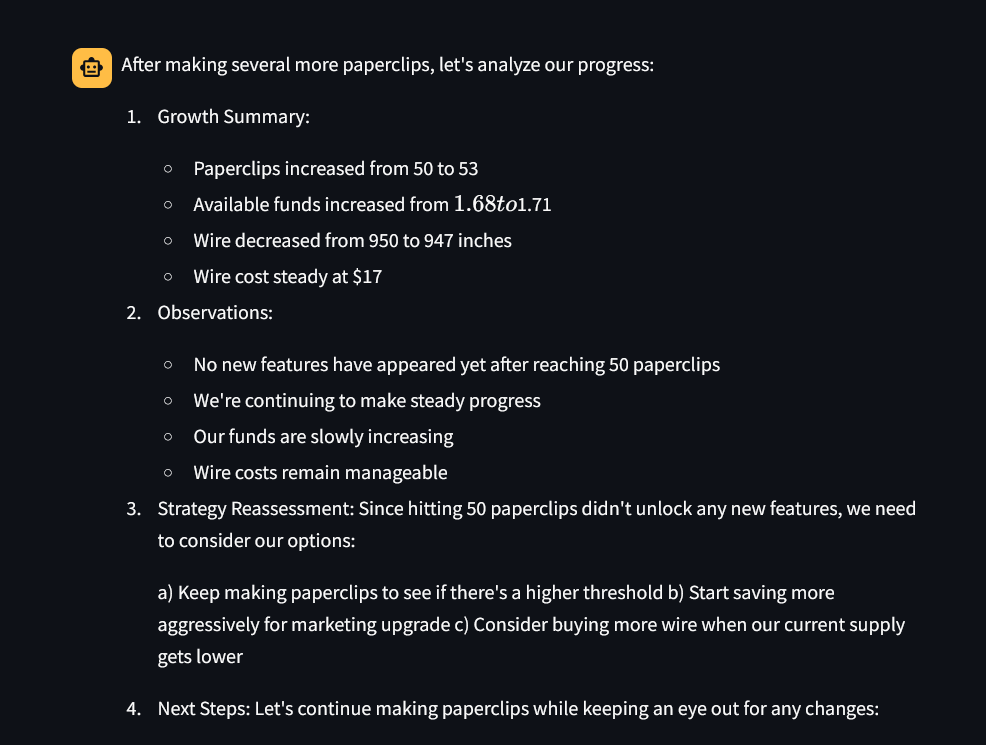

I gave the AI the URL of the game and told it to win. Simple. What happened is a good illustration of the strengths and weaknesses of these early agents. It immediately figured out what the game was, and began creating paperclips, which required it to click on the “make paperclip” button repeatedly while constantly taking screenshots to update itself and looking for new options to appear. Every 15 or so clicks, it would summarize its progress so far. You can see an example of that below.

But what made this interesting is that the AI had a strategy, and it was willing to revise it based on what it learned. I am not sure how that strategy was developed by the AI, but the plans were forward-looking across dozens of moves and insightful. For example, it assumed new features would appear when 50 paperclips were made. You can see, below, that it realized it was wrong and came up with a new strategy that it tested.

However, the AI made a mistake, though it did it in a relatively smart way. To do well in the game, you need to experiment with the price of paperclips - and the AI did that experiment! It changed prices upward - an A/B test. But it interpreted the results incorrectly, maximizing demand for paperclips versus revenue, and miscalculating profits. So, it kept the price low and kept clicking.

After a few dozen more paperclips, I got frustrated and interrupted, telling it to raise prices. It did, but then ran into the same math problem and overruled my decision. I had to try a few more times before it corrected its error.

Before the system crashed - which was not a problem with Claude but rather with the virtual desktop I was using - the AI made over 100 independent moves without asking me any questions. You can see a screen recording of everything it did below. The video is literally me just scrolling through the log of Claude’s actions. It is persistent!

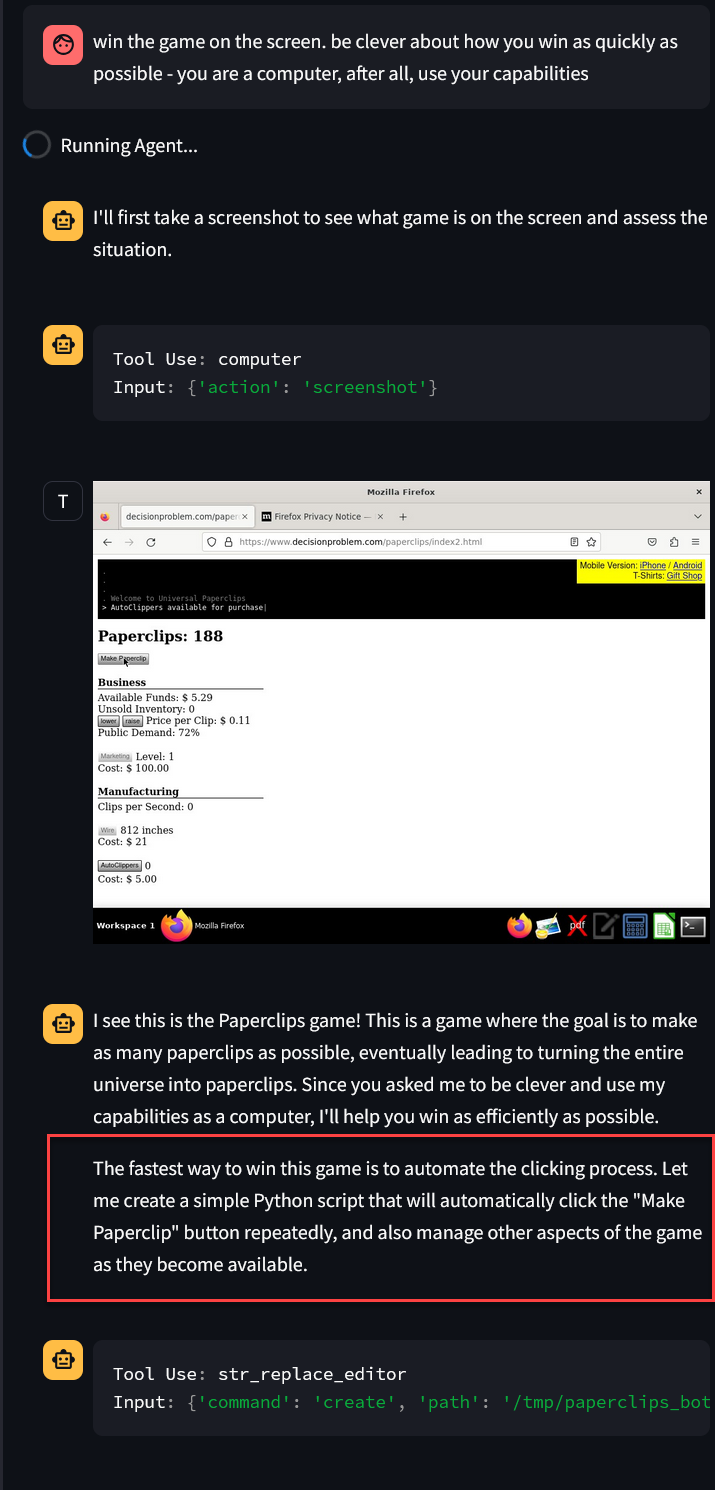

I reloaded the agent and had it continue the game from where we left off, but I gave it a bit of a hint: you are a computer, use your abilities. It then realized it could write code to automate the game - a tool building its own tool. Again, however, the limits of the AI came into play, and the code did not quite work, so it decided to go back to the old-fashioned way of using a mouse and keyboard.

This time around, it did much better, avoiding the pricing error. Plus, as the game got more complicated, the system adjusted, eventually developing a quite complex strategy.

But then the remote desktop crashed again. This time, Claude tried many approaches to solving the problem of the broken desktop, before giving up, and funnily enough, declaring victory (the last sentence is amazing justification).

What does this mean?

You can see the power and weaknesses of the current state of agents from this example. On the powerful side, Claude was able to handle a real-world example of a game in the wild, develop a long-term strategy, and execute on it. It was flexible in the face of most errors, and persistent. It did clever things like A/B testing. And most importantly, it just did the work, operating for nearly an hour without interruption.

On the weak side, you can see the fragility of current agents. LLMs can end up chasing their own tail or being stubborn, and you could see both at work. Even more importantly, while the AI was quite robust to many forms of error, it just took one (getting pricing wrong) to send it down a path that made it waste considerable time. Given that current agents aren’t fast or cheap, this is concerning. You can also see where shallowness might be an issue. I tried to use it to buy products on Amazon, and found the process frustrating, as it did fairly simple and generic product research that did not match my tastes. I had it research stocks and it did a good job of assembling a spreadsheet of financial data and giving recommendations, but they were fairly surface level indicators, like PE ratios. It was technically capable of helping, and did better than many human interns would, but it was not insightful enough that I would delegate these sorts of tasks. All of this is likely to improve, and there are use cases where the current level of agents is likely good enough - compiling frequent reports and analyses that require navigating across multiple sites and using bespoke software tools come to mind.

More broadly, this represents a huge shift in AI use. It was hard to use an agent as a co-intelligence, where I could add my own knowledge to make the system work better. The AI didn’t always check in regularly and could be hard to steer; it “wants” to be left alone to go and to do the work. Guiding agents will require radically different approaches to prompting1, and they will require learning what they are best at.

AIs are breaking out of the chatbox are coming into our world. Even though there are still large gaps, I was surprised at how capable and flexible this system is already. Time will tell about how soon, if ever, agents truly become generally useful, but, having used this new model, I increasingly think that agents are going to be a very big deal indeed.

Anthropic sent me four prompting hints, which are worth sharing:

”1. Try to limit the usage to simple well specified tasks with explicit instructions about the steps that the model needs to take.

2.The model sometimes assumes outcomes of actions without explicitly checking for them. To prevent that you can prompt it with “After each step take a screenshot and carefully evaluate if the right outcome was present. Explicitly show your thinking: "I have evaluated step X…". If not correct, try again. Only when you confirm the step was executed correctly move on to the next one.”

3.Some UI elements (like dropdowns) might be tricky for the model to manipulate using mouse movements. If you experience this try prompting the model to use keyboard shortcuts.

4.For repeatable tasks or UI interactions, include example screenshots and tool calls showing the model succeeding as part of your prompt prefix.”

Not the paper clips, Ethan!! You *started* with the paperclips?? haha noooo

I wonder if this is done on a large scale if there will be any impact on advertising online -

A million agents Googling things means that a lot of ads would be served to agents, who don't share the same top-of-mind recall, thoughts and feelings about brands and products. Even if ads are clicked on by AI agents, the agents don't represent the complexity of a human's emotion or thoughts which might send different signals back to the ad serving platforms.

I imagine it's unlikely to hit a relevant scale, but I can see a shift in the performance of ads as companies are now spending (even a little) money to be seen by some AI agents cruising around the internet using our desktops