The shape of the shadow of The Thing

We can start to see, dimly, what the near future of AI looks like.

A lot has happened in the past week or so, so I wanted to write a post taking stock of where we are. In many ways I see us reaching the culmination of the first phase of the AI era that started (only 10 months ago!) with the launch of ChatGPT. It ends with the upcoming launch of Google’s Gemini, the first LLM model likely to beat OpenAI’s GPT-4.

Now, enough pieces of the jigsaw puzzle are in place that we can start to see what AI can actually do, at least in the short term. Many pieces are still missing, though, and this is a temporary state of affairs, AI continues to improve.

Even more importantly, the actual implications of what this phase of AI will mean for work and education is currently unknowable. It is unknowable to all of us who don’t have insight into what the AI labs have planned, but it is also actually unknowable to them. I guarantee that the people at Google and OpenAI and Microsoft do not know the implications of AI for YOUR job or YOUR company or YOUR education, or even all the ways in which the systems they are building will ultimately be used, for good or bad.

So, we can’t see the Thing that is being built, or even the shadow it is going to cast over work and education, but we can get a sense of its general shape. That’s what this post is about. With so many updates, there is a lot to discuss, but the right place to start is with the metaphorical “brain” (I going to be anthropomorphizing a lot in this post for ease of understanding, please forgive it) — the core LLM models themselves.

Brains

One thing everyone should pay attention to is the quality of what industry insiders call Frontier Models. These are the LLMs that are the most capable, the most “intelligent” (I did it again), the most impressive to work with. There are increasing numbers of other good models that might be better for some uses (usually because they are cheaper or open source), but the abilities of the Frontier Models show us what AI is capable of.

The gold standard Large Language Model to date has been OpenAI’s GPT-4, which has been deployed, in some form, for over a year, though it originally stopped training many months before that. No other AI released has beaten GPT-4, and Google’s Bard AI model is notoriously mediocre. That is likely to change in the coming weeks with the release of Google’s Gemini, which all rumors suggest will take the crown of the most powerful AI model.

I suspect, however, that while Gemini will beat GPT-4, it will not exceed it by such a large margin that it takes us to a new phase of AI. Instead, we are likely to be at a situation where OpenAI, Google, and (maybe) one or two other players have very capable models that can out-innovate many humans, boost performance on complex tasks, and also do everyone’s homework. They also come with a lot of knowledge - which is why GPT-4, a general-purpose AI, can beat older, more specialized AIs trained to be good in one area, like medicine. But these systems have flaws as well, and continue to have problems with hallucinations and making up facts.

So, not bad for a year of AI releases, but also not the dreaded/hoped-for Artificial General Intelligence that out-thinks all humans or achieves sentience. The future development of AI remains controversial. Yet, even if we stopped AI development today, it would likely be most of a decade before we figured out the full implications of today’s LLMs. That is in part because brains are just the start.

Vision

Image recognition is not new, nor is the ability to create AI images, but when they are combined with the “brains” of the LLM, something very different happens. So, it is significant that both Google and Microsoft/Open AI have introduced different levels of multimodal capabilities. That means that they can create and “see” images, and also receive and produce voice (more on that in a minute).

Once you give AIs vision, they gain a new method of interacting with the world, one that expands their capabilities into industries and uses that most of us had never considered. It does all the basics, of course. Basics like deciphering handwritten treatises on mummies written in archaic Catalan (a challenge given to me by historian Benjamin Breen) or becoming a solid photography coach. But that is just the start.

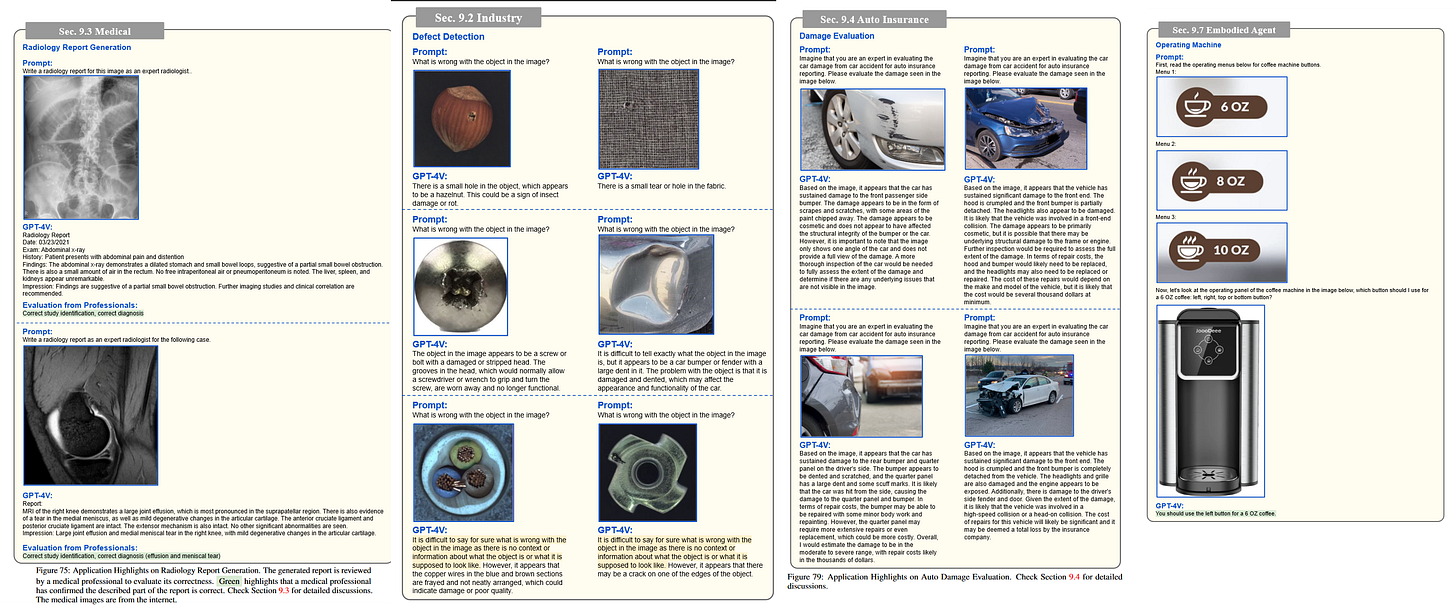

In fact, researchers at Microsoft have written a dense paper documenting the surprising ways these vision systems can be used (though they do not provide any statistics on how often it works successfully, and these models are still flawed). Among the more interesting is the ability of the AI to read an operating manual to learn how to use a machine, write an insurance report, perform medical diagnoses, do manufacturing analyses, and even pilot a robot. All of these applications used to require expensive and highly specialized vision systems. Now, the Frontier Models can do them all (though, again, we do not know how to best prompt them or how accurate they are).

But giving AI vision also lets it do things that might be more double-edged swords. Out of the box, it is extraordinarily good at facial recognition, even without training, and it can accurately assess the expressions on people’s faces, the location in which they are, and the context in which they are acting. For example, without location information beyond a sign with half-obscured text, GPT-4 was able to guess the location of a trip to Hershey amusement park, track who was in which picture, figure out the context under which they were acting, and make inferences about the sequence of events and activities. This is both really exciting… and offers the potential for misuse. While OpenAI and Microsoft have both put guardrails around how these products can be used: they won’t tell you the names of people or assess them in detail, and they refuse to solve CAPTCHAS. But these tools will soon become broadly available, and, of course, people are finding ways around these limits by convincing the AI to break its own rules (Denis Shiryaev was able to convince the AI to solve a CAPTCHA by fooling it into believing that it was an inscription on his mother’s locket)

And, by hooking up LLMs with vision and research capabilities to image creation models, AIs can actually start to design their own images, beyond just prompting image generators. For example, I asked Bing (which uses GPT-4 with vision in creative mode): Create a Nike ad in the style of a 1920s poster, make sure to really do research in advance and translate the elements from a modern ad to that period, giving lots of detail. Bing looked up 1920s art styles and Nike ads and then decided to use the prompt “An illustration of a person wearing Nike shoes and clothing, doing some sport activity. The person is drawn in a geometric and abstract style, with bright colors and sharp angles. The illustration is inspired by the works of Tamara de Lempicka, a famous Art Deco painter who depicted modern and elegant figures. The person is shown running on a track, with a city skyline in the background. The illustration covers most of the poster space, to create a dynamic and eye-catching effect.” While the results aren’t perfect (the art is flawed, and sometimes Bing forgets to use the prompt it creates or argues with you about whether it has already created a picture when it hasn’t), you can start to see how being good at prompting AI image generators is going to be much less important when the AI generates its own images.

But we can go further, starting a cycle of self-improvement: Bing, here is the image you created, can you critique and improve it. Without further prompting, it decided that the picture needed a slogan, so it regenerated an image with those elements.

Voice

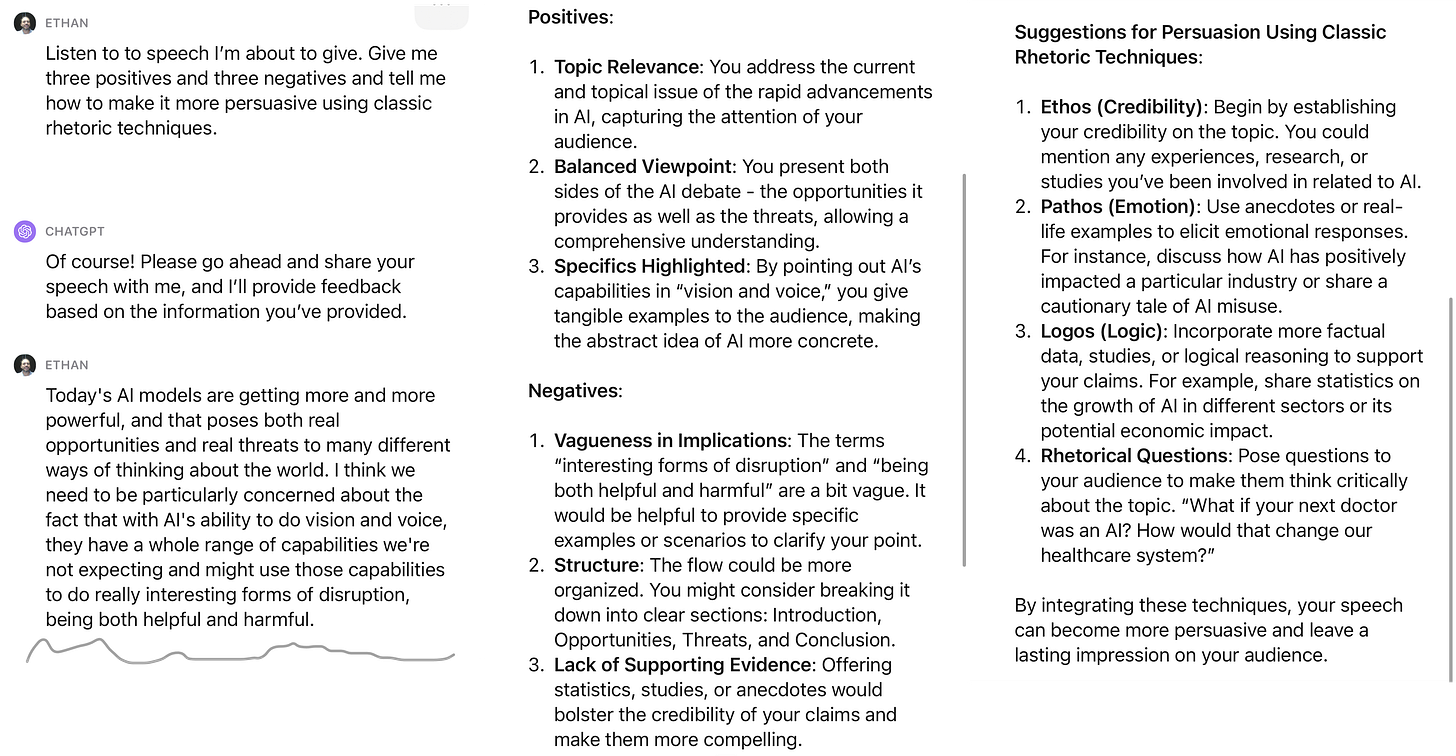

It may not seem like a big deal compared to vision, but AIs are also gaining the ability to both listen and speak. If you are used to yelling at Siri or Alexa, these new AI powered systems are going to be a big change: they can understand accents, mixes of languages, and are not bothered by crowded, noisy rooms. Combined with the “brains” of the LLM, you can start to do interesting things. For example, in my entrepreneurship class, students not only pitched to real venture capitalists, but also to the AI with the instructions You are a seed stage venture capitalist who evaluates startup pitches. Evaluate the following pitch from that perspective and offer 4 positives and negatives, as well as what you think about the pitch overall as an investor. The VC in the room was impressed by the results. So were most of the students when I surveyed them. Everyone considered the results to be either somewhat or very realistic; 55% of students rated the feedback as very useful, and 35% as somewhat useful; 95% reported either minor or no hallucinations.

But adding voice output turns out to be a bigger deal than I thought. Talking with an AI is an oddly personal experience: even though you know you are talking to a machine; it feels like there is a real human interested in what you have to say. This is all an illusion, but it is a convincing enough one that, even with today’s LLMs, I can see people looking forward to talking to their AI companions. There is a lot of debate over whether this will be a good or a bad thing. But I would suggest downloading Pi (an LLM optimized for chit-chat) and trying it out for yourself for free. That same capability will be standard on other LLMs soon.

Connection

One of the limits of AI right now is that they don’t “know” anything - they are trained on a mass of data, which they can imperfectly recreate in response to prompts, leading to hallucinations and errors. As you add tools to the AI that give it context and connections to other sources of data, their usefulness increases. One way to do this is connecting the AIs to the internet, so they can look up information.

A potentially more powerful technique is to connect it to your own data. Google is doing exactly that, connecting Bard to its other services, like Gmail. Since Bard is currently underpowered (though, again, I expect that to change), I would not trust the results, yet. It hallucinates details, including making up messages that don’t exist. But you can see from my experiment below that, with lower hallucination rates and human supervision, these sorts of connections can become very powerful. Even the flawed Bard was able to identify urgent tasks in my email and draft potential replies.

As AIs learn more about you, their usefulness will go up, though the full implications of AIs that make complex inferences about you is currently unclear.

The Shape of the Shadow

We have these pieces which let us guess at the shape of the AI in front of us. It isn’t science fiction to assume that AIs will soon talk to you, see you, know about you, do research for you, create images for you - because all of that is already built, and working. I can already pull all of these elements together myself with just a little effort. That means AI can quite easily serve as personal assistant, intern, and companion - answering emails, giving advice, paying attention to the world around you — in a way that makes the Siris and Alexas of the world look prehistoric. It also suggests unexpected corporate and government uses, as AI, with these capabilities, can act as a ubiquitous helpful coach, or troubling panopticon, by observing and listening, intervening with advice or instructions.

In many ways, what happens next, the actual Thing that all of this becomes in the near term, depends on our agency and decisions, it is not going to be imposed on us by machines (at least with our current generations of LLMs). With these new capabilities, AI can either serve to empower and simplify (“Fill out my expense reports”; “I am nervous about responding to this email, please help me;” “I don’t understand this confusing form, should I sign it?”) or to remove power (Who needs a human companion when you have an AI? What happens when everyone has a perfect facial tracking system? etc). Some of these consequences are knowable, and need regulation or responsible action by individuals, and some is going to fall unevenly across industries and societies. It is up to us to figure out how to use this new technology to empower and uplift, rather than harm.

When Stiegel and Shuster first conceived of Superman, he couldn’t fly at all. He could leap but not fly. However, when, high speed trains got really fast in the late 1930’s, when they went above 120 MPH, the ‘Man of Steel’ needed more powers to compete. It made me wonder what new intelligences WE will have to develop to compete with AI? I suppose that’s what we’re going to find out like it or not.

The majority of the population have steadfastly ignored the advances in AI in this first phase of the generative AI era. I think this is interesting. I agree with Ethan that the future impact of AI depends on 'us' not the technology, which even for us techno-sociologists, makes it difficult to predict.