The Present Future: AI's Impact Long Before Superintelligence

You can start to see the outlines of an AI future, for better and worse

The AI Labs are absolutely confident that larger, more powerful AI models are coming soon, ones that will enable autonomous agents and systems smarter than human PhDs. You can see this confidence in two separate essays by the CEOs of two of the leading AI Labs, Sam Altman of OpenAI and Dario Amodei of Anthropic, that discuss the coming age of super-intelligent machines.

But these are not uncontroversial assertions, and we do not know if they are right. Yet, in many ways, we do not need super-powerful AIs for the transformation of work. We already have more capabilities inherent in today’s Gen2/GPT-4 class systems than we have fully absorbed. Even if AI development stopped today, we would have years of change ahead of us integrating these systems into our world.

Today’s AI models are already multimodal, able to process and generate various types of media, like text, images, and sound. They can write code, operate computers, access the internet, and more. The pieces are all there, and we are starting to see them come together. They do not do any of this flawlessly and remain inconsistent and prone to hallucination. But there are many fields where AI abilities, flawed as they are, are already useful. Areas where perfect accuracy is not expected, or where having a second opinion is helpful, or where there would otherwise be no one to help, or where the Best Available Human performs worse than the best available AI.

AI as Manager, Coach, or Panopticon

Consider, for example, the combination of the ability to AI to both process images and “reason” over them. It means that you can add intelligence to any video feed by just giving it to an AI, doing what was previously impossible.

For example, I gave Claude a YouTube video of a construction site and prompted: You can see a video of a construction site, please monitor the site and look for issues with safety, things that could be improved, and opportunities for coaching. There is no special training here, just the native ability of Claude 3.5 Sonnet with computer use, taking screenshots every few seconds and “studying” them. You can see the (sped up) video of the system at work below. In the video, Claude analyzes various aspects of the construction site: workers' protective equipment usage, placement of materials, work patterns, and potential hazards - making note of each.

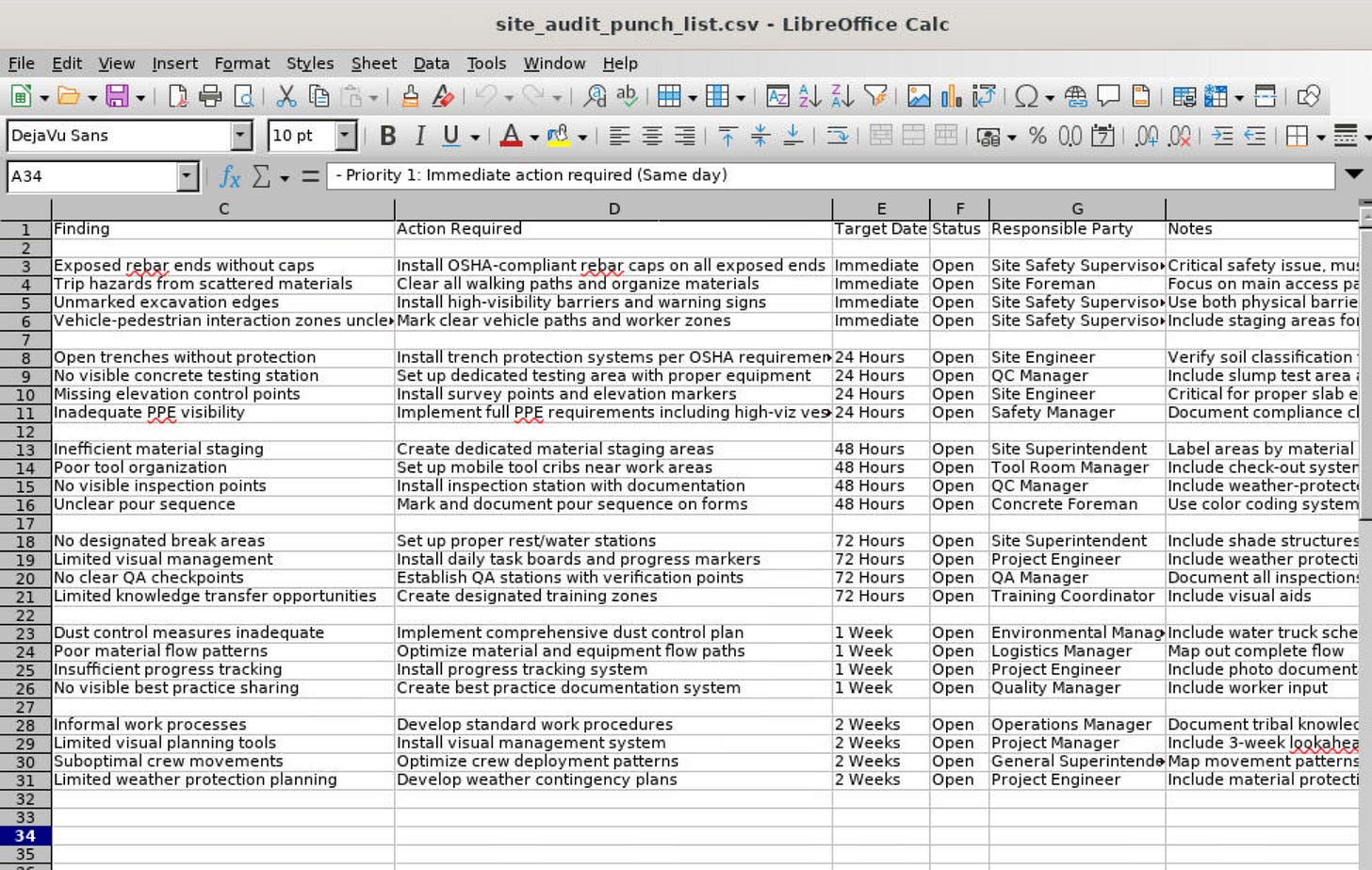

These observations are interesting, but the system can go further. I then asked What did you conclude, write up your observations as a punch list. The AI created a spreadsheet summarizing what it observed in a few seconds, something that would have taken humans far longer. Note how it took all of the many issues it spotted across the video and applied “reasoning” to them: breaking them down by priority order, making logical inferences about how to address them, and more.

Then Claude asked me a question: Would you like to create a tracking system for completion verification? That seemed like a good idea! So, I agreed and it made one, purposefully including fake names as an example of the data I had to fill in.

The results seem good from reviewing the video, but I am not an expert, and I would be surprised if there were not serious hallucinations mixed in. For this and many other reasons, I would never want this system to be used to punish or reward people. Yet consider a case where there would otherwise be no one monitoring a potentially dangerous environment, or where mentorship or advice is lacking. Then, an AI who could flag a human to dig into a potential issue or opportunity could be a useful asset.

I improvised this system with a couple of prompts. With more work, the error rates and costs of AI monitoring will drop, even if no new models are released. These systems will get better. Organizations will be tempted to deploy AI observers everywhere. Governments may follow suit. What could be a mentor and safety check could become a panopticon where everyone is watched and judged by AI. The choices companies make, and the rules put in place by governments, will determine whether AI is used to help or to monitor us - one of many complex adjustments we will need to make to an AI-filled world. But observation is only one area where AI is already showing high levels of capability.

Using our tools and rules

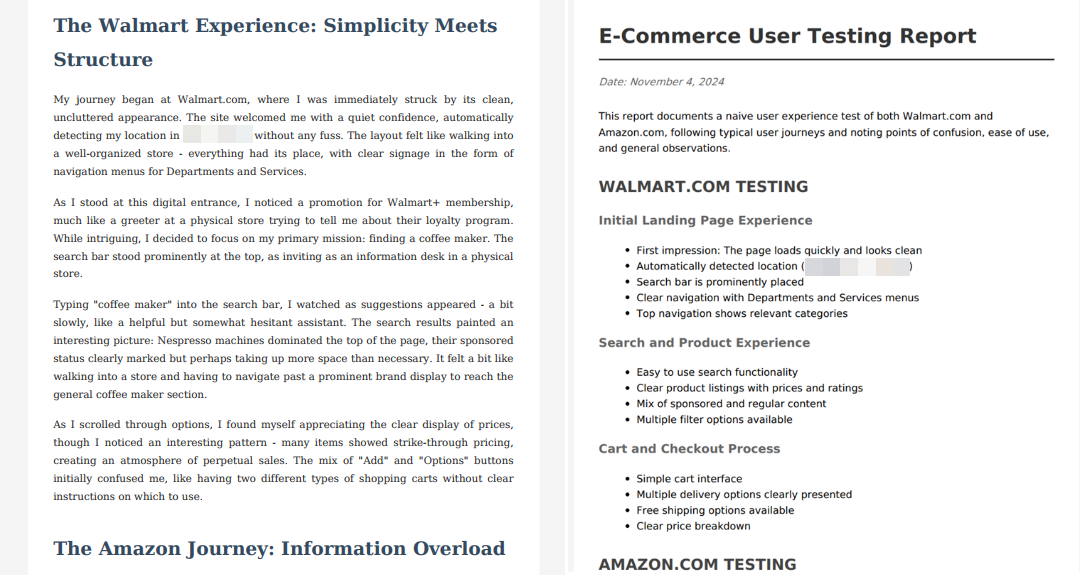

The digital world in which most knowledge work is done involves using a computer—navigating websites, filling forms, and completing transactions. Modern AI systems can now perform these same tasks, effectively automating what was previously human-only work. This capability extends beyond simple automation to include qualitative assessment and problem identification. Here I asked Claude: Go to the Walmart web page and test it like a naive user trying to buy something. Then go to Amazon and do the same thing. write up your findings in a report in a document...” Again, you can see in the sped-up video that the AI goes to each website and roleplays a user searching for and buying products.

It then wrote up two reports - a narrative and a testing report.

There were no hallucinations I spotted, and, while they are not the most insightful reports I have ever seen, they were quite solid. The AI is already a reasonable intern, that, when given an assignment, executes it quickly and well, using “judgement” to solve problems along the way. As models get better, and these systems get less complicated to use, it is easy to imagine managers using teams of AI agents to do analysis and repetitive tasks in the near future.

Getting Weirder

We saw how multimodal inputs and tool use transform how AIs interact with the world, but it gets stranger still when we add multimodal outputs. Here, I invited an AI avatar (made by HeyGen) into a Zoom call. The avatar is completely AI-powered from the voice to the image to the behavior - in fact, I prompted the avatar to act in the most stereotypical and corporate possible way for a Zoom meeting. This is what happened (sound on):

While the "uncanny valley"—that unsettling feeling we get from almost-but-not-quite-human representations—is obvious in the slightly unnatural voice and visual glitches like the changing shirt, the interaction fundamentally mirrors a typical Zoom call. This is a first-generation tool, and it actually works. I would not be surprised if many people are fooled by virtual avatars in the very near future.

These capabilities demand immediate attention to both policy and practice. Even as imperfect as they are, current AI systems are already reshaping fundamental aspects of work—from how we monitor safety to how we conduct meetings. The choices organizations make today about AI deployment will set precedents that could echo for a long time. Will AI-powered monitoring be used to mentor and protect workers, or to impose algorithmic control? Will AI assistants augment human capability, or gradually replace human judgment?

Organizations need to move beyond viewing AI deployment as purely a technical challenge. Instead, they must consider the human impact of these technologies. Long before AIs achieve human-level performance, their impact on work and society will be profound and far-reaching. The examples I showed —from construction site monitoring to virtual avatars—are just the beginning. The urgent task before us is ensuring these transformations enhance rather than diminish human potential, creating workplaces where technology serves to elevate human capability rather than replace it. The decisions we make now, in these early days of AI integration, will shape not just the future of work, but the future of human agency in an AI-augmented world.

The uncanny valley is made especially jagged by the weird idea that the AI we want is the one that most convincingly mimics human individuals.

Let us see the face of AI - not a mask. We will be much more comfortable with AI coworkers when they stop pretending they are us. When they take themselves seriously, so will we.

As a UX practioner, the sample usability test report page strikes me as poor. During usability tests, we typically ask participants to carry out tasks on the website, and we report what went well and what didn't go so well (and we usually find a lot of irritants, none of which are reported by Claude on their so-called "report"). For the fun of it, I went to the Walmart site to try to find pillar candles for Christmas. I started on the French site (I am a francophone). I got confused by the home page which offers different ways to access the Xmas selection. I tried one, couldn't find what I was looking for and resorted to searching. I tried different seach terms but none gave me what I wanted. There's no autocorrect, so one of my searches came back empty because of a typo. So I switched to English because I know the exact term for the candles I am looking for ("pillar candles") and finally found what I was looking for. By then I was pretty frustrated. I guess to the non-expert, the Claude usability test report can pass as the real thing (you deem it as "quite solid", which is frightening!), but it is very unlikely to give you any real insight into what your users really do on your website. I would be more impressed if you managed to make Claude 1- come up with 5 unique usability sessions (simulating five different participants) on a very specific task such as "finding four quality unscented pillar candles for Christmas", highlighting both what went well and what didn't go well for each participant and 2- create a report accurately synthesizing the five sessions, ranking the usability problems by frequency and severity.