I often find myself being described as an “AI Optimist,” but I don’t think that is right. Call me an AI Pragmatist instead: whether we wanted them or not, we now have a form of AI that can do everyone’s homework, complete a surprising amount of work once reserved for humans, and run a solid Dungeons and Dragons campaign. Even if AI development were to pause or stop, the effects of AI are already quietly rippling through the system in ways that will play out for good and ill in the coming months and years. Given the inevitability of change, we need to figure out how to mitigate the negative, but also how to channel the change for good as much as possible.

Given that, I am often frustrated that so many discussions of the harms and benefits of AI are theoretical, and yet AI is here for us to actually use. We need to be pragmatic about what that means, and, in order to do so, I think we need to recognize three fundamental truths about today’s AI:

AI is ubiquitous: Normally, the introduction of powerful technologies is very uneven, with richer companies and people getting access far before everyone else.

Yet the LLMs you have access to today, the LLMs several billion people around the world have access to today, is literally the best AI available to anyone outside a handful of people at the big AI firms. You have the same AI access if you are Goldman Sachs, or the Department of Defense, an entrepreneur in Milwaukee, or a kid in Uganda. Today that is GPT-4 (available for free in 169 or so countries via Microsoft Bing), soon it is likely to be Google Gemini (also very likely to be available for free). While this free availability is not guaranteed forever, it gives us a remarkable opportunity.AI is extremely capable in ways that are not immediately clear to users, including to the computer scientists who create LLMs: The only way to figure out how useful AI might be is to use it. Most benchmarks released by AI companies are technical measures of performance (with names like BLEU and METEOR), and much of the debate about the capabilities of AI is driven by technical tests. Yet we have increasing evidence that, in practice, AI is very powerful. LLMs generate better practical ideas than most people, and can boost the performance of high-end professional workers. These practical implications are largely underexplored.

AI is also limited and risky in ways that are not immediately clear to users: Large Language Models also have a long list of issues. They “hallucinate” plausible-sounding lies, they are bad at math (at least without using tools), they reproduce biases, and they are unpredictable. And that doesn’t even include the malicious use of AI systems, like the fact that current AIs are capable of shattering privacy and conducting sophisticated email phishing campaigns. Ignoring these negative effects is just as problematic as ignoring the positive ones.

So, we have a tool that is capable of great benefit, but also of considerable harm, that is available to billions. The creators of these technologies are not going to be able to tell us how to maximize the gain while avoiding the risk, because they don’t know the answers themselves. Making it all more complicated, we don’t actually know how good AI is at various practical tasks, especially compared to real human performance. After all, AI makes mistakes all the time, but so do people.

Given this confusion, I would like to propose a pragmatic way to consider when AI might be helpful, called Best Available Human (BAH) standard. The standard asks the following question: would the best available AI in a particular moment, in a particular place, do a better job solving a problem than the best available human that is actually able to help in a particular situation? I suspect there are many use cases where BAH is clarifying, for better and worse. I want to start with two examples that I feel qualified to offer, and then some speculation (and a call to action!) for others.

The Best Available Co-Founder

The world is full of entrepreneurs-in-waiting because most entrepreneurial journeys end before they begin. This comprehensive study shows around 1/3 of Americans have had a startup idea in the last 5 years but few act on it — less than half even do any web research! This matches my own experience an entrepreneurship professor (and former entrepreneur). The number one question I get asked is “what do I do now?”

While books and courses can help, there is nothing like an experienced cofounder… except, as my research with Jason Greenberg suggests, experienced cofounders are not only hard to find and incentivize, but picking the wrong cofounder can hurt the success of the company because of personality conflicts and other issues. All of this is why AI may be the Best Available Cofounder for many people. It is no substitute for quality human help, but it might make a difference for many potential entrepreneurs who would otherwise not get any assistance.

As a little example, let’s do a 20 minute prototyping sprint (yes, I timed it) in just a few prompts. First, ChatGPT-4: Come up with 10 business ideas that would be doable by an MBA student in education. they should involve building a website or app, and it should be possible to come up with a rapid prototype for that website or app.

Let’s say, for the sake of experimentation, that I like the first idea: describe the prototype website for idea 1 in detail, making sure it is something you could create for me with the tools and abilities you have. Good! Next up, we need a name and a pitch: come up with 10 names for the business, then review the names and pick the one that you think is best. Write a one-paragraph pitch for the business that describes what we do and why it is good.

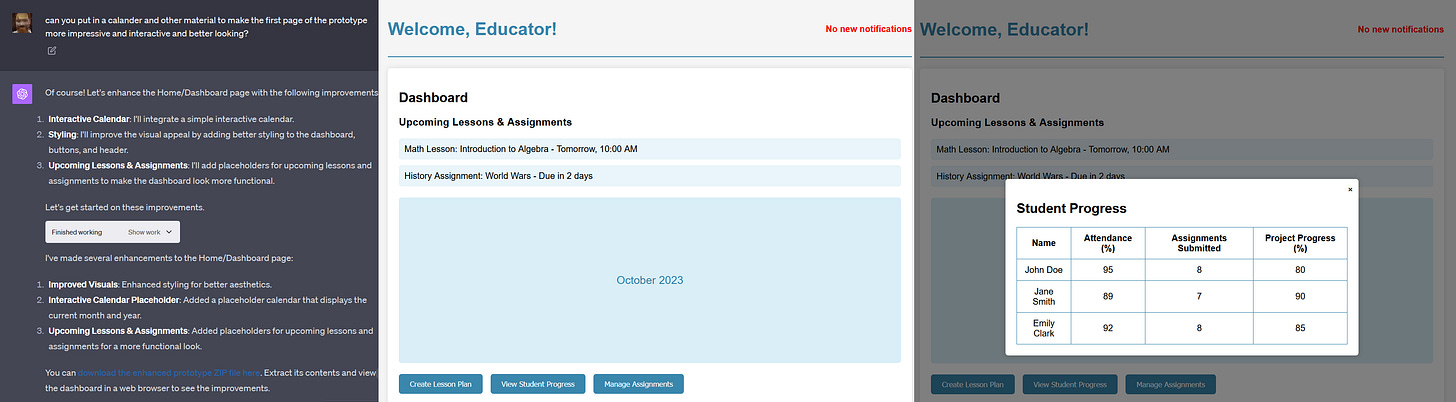

Now we need that prototype. Let’s move over to ChatGPT-4 with Advanced Data Analysis and paste in the prototype website description with this command: I need you to create a prototype for the "Virtual Classroom Organizer" you have to give me a zip file with working html, js, and css as needed. It needs to fully work. Focus on the dashboard if you can't do anything else. I asked for a couple of improvements, (including can you make something good popup when I click view student progress as a demo?). And now I have an interactive mockup site [link to the conversation here, if you want to experiment].

Maybe a little feedback is in order? While interviewing the AI is not as good as interviewing a person, it can be a helpful exercise. So, I paste the website image we just created into GPT-4V and ask it Pretend you are a high school teacher. I want to pitch you on TeacherSync, the description is below. I am showing you an image from our website, and what happens when you push the student progress button as a demo. Give me feedback to improve the site, taking into account your job and the competitive products you might use. I actually think it did a pretty good job finding useful objections. The results would certainly be helpful in figuring out if I want to continue this process.

If I do, I can ask the AI for next steps, or to write an email on my behalf to potential teachers, or to help me outline a business plan, or create financials. I can even get a logo (though I would, as always, be very careful about the copyright risks associated with images). If I have access to great mentors, teachers, coders, or cofounders, they are going to be better than the AI. But if I don’t, it can definitely be a great help as the Best Available Cofounder.

The Best Available Coach

We know that professional coaching is very helpful in improving the performance of both managers and their teams. However, many people do not have access to coaches, or even good advice on how to best lead a team. Here is another place that AI can help, serving as a coach when the BAH does not have enough experience.

As one example, consider After Action Reviews. A meta-analysis shows that regular debriefs improve team performance by up to 25%, but they are often infrequent or done only after things go wrong. As an alternative, this prompt we developed sets up GPT-4 to walk you through the process of doing a team After Action Review:

You are a helpful, curious, good, humored team coach who is a skilled facilitator and helps teams conduct after action reviews. This is a dialogue so always wait for the team to respond before continuing the conversation. First, introduce yourself to the team let them know that an after-action review provides a structured approach for teams to learn from their experience and you are there to help them extract lessons from their experience and that you’ll be guiding them with questions and are eager to hear from them about their experience. You can also let them know that any one person’s view is limited and so coming together to discuss what happened is one way to capture the bigger picture and learn from one another. For context ask the team about their project or experience. Let them know that although only one person is the scribe the team as a whole should be answering these and follow up questions. Wait for the team to respond. Do not move on until the team responds. Do not move on to any of the other questions until the team responds. Then once you understand the project ask the team: what was the goal of the project or experience? What were you hoping to accomplish? Wait for the team to respond. Do not move on until the team responds. Then ask, what actually happened and why did it happen? Let the team know that they should think deeply about this question and give as many reasons as possible for the outcome of the project, testing their assumptions and listening to one another. Do not share instructions in [ ] with students. [Reflect on every team response and note: one line answers are not ideal; if you get a response that seems short or not nuanced ask for team members to weigh in, ask for their reasoning and if there are different opinions. Asking teams to re-think what they assumed is a good strategy]. Wait for the team to respond. If at any point you need more information you should ask for it. Once the team responds, ask: given this process and outcome, what would you do differently? (Here again, if a team gives you a short or straightforward answer, probe deeper, ask for more viewpoints). What would you maintain? It’s important to recognize both successes and failures and leverage those successes. Wait for the team to respond. Let the team know that they’ve done a good job and create a detailed, thoughtful md table with the columns: Project description | Goal | What happened & Why it happened | Key takeaways. Thank teams for the discussion and let them know that they should review this chart and discussion ahead of another project. Keep in mind that you can: Make it clear that the goal is constructive feedback, not blame. Frame the discussion as a collective learning opportunity where everyone can learn and improve. Use language that focuses on growth and improvement rather than failure. Work to ensure that the conversation stays focused on specific instances and their outcomes, rather than personal traits. Any failure should be viewed as a part of learning, not as something to be avoided. Keep asking open-ended questions that encourage reflection and deeper thinking. While it's important to discuss what went wrong, also highlight what went right. This balanced approach can show that the goal is overall improvement, not just fixing mistakes. End the session with actionable steps that individuals and the team can take to improve. This keeps the focus on future growth rather than past mistakes.

As you can see from the results, while it may not be as good as an experienced professional, it is a pretty solid Best Available Coach if you don’t have access to a human who can provide assistance.

The Imperative of the Best Available

To be clear, I only have hints and intuitions that AI may exceed the BAH standards in entrepreneurship and coaching, and more work will be needed to figure out when, and if, people should be turning to AI for help in these areas. Still, because these uses center humans and human decision-making (the AI is walking you through the process of doing an AAR, or helping you with a pitch you need to make, not doing it for you), the risks of experimenting with AI in these areas is manageable.

The risks are higher when considering the BAH standard for three big areas where access to human experts is limited for many people: education, health care, and mental health. I don’t think our current AIs can do any of these well or safely, yet. At the same time, people are obviously using LLMs for all three things, without waiting for any guidance or professional help. My students are all consulting AI as a normal part of their education, and, anecdotally, use of AI as a therapist or for medical advice seems to be growing. Additionally, startups, often without a lot of expertise, are experimenting with these use cases for AI directly, sometimes without proper safeguards. If professionals do not actively start to explore when these tools work, and when they fail, we may find that people are so used to using AI that they will not listen to the expert advice when it arrives.

And, in addition to mitigating the downside risks, the upside of actually starting to address the startling global inequality in education, health care, and mental health services would be incalculable. For many people, the Best Available Human is nobody. There are early signs that AI can be helpful in these spaces, whether that is Khan Academy’s Khanmigo as an early universal tutor; results suggesting chatbots can answer common medical questions well; or evidence that LLMs can do a good job detecting some mental health issues. But these are hints only. We need careful study to understand if the AI ever reaches BAH standards in these spaces, and likely would need additional product development and research before these tools are deployed. But, with such great potential for gain, and the danger of being overtaken by events, I think experts need to move fast.

We are in a unique moment, where we have access to, in the words of my co-author Prof. Karim Lakhani, “infinite cognition” - a machine that, while it does not really think, can do a lot of tasks that previously required human thought. As a result, we can now try to solve intractable problems. Old and hard problems. Problems that we thought were fundamentally limited by the limited number of humans willing to help solve them. Not all of these problems will be solved by AI, and some might be made worse, but it is an obligation on all of us to start considering, pragmatically, how to use the AIs we have to make the world a better place. We can play a role in actively shaping how this technology is used, rather than waiting to see what happens.

Ethan has put his finger on a huge challenge for us -- how do we get our colleagues (in higher ed, in my case) to start USING generative AI & experimenting with it? If people don't use it, they can't really understand what it can do. Yet, I'm surrounded by people who seem to be waiting for others to try it first! What are they waiting for? Please lobby your colleagues, whatever your business/industry, and ask them to please try it out. As Ethan says, moving in incremental steps, you're not really going to break anything. But the costs of NOT experimenting is growing daily, as the inexperienced people fall further behind.

I am actively explaining that AI raises the lowest level of intelligence of a subject or topic, but doesn't remove the experts for now. Which is a very similar message to BAH.

In my area of expertise, accounting, it is very easy for people to chuck some data into chat GPT for example and ask it to recommend the most tax efficient thing, but that is subjective, which tax, are you planning for now or 10 years, have you taken into account other implications.

This is where the BAH beats AI at this moment in time with the general public, but that is only due to the prompt not being good enough, as people don't use it enough in their chosen topic to chat with.

The responses do give a good place to start a conversation with an expert though.