Over the last two days, I gained the ability to have conversations with two different AIs on my phone. Though both are happy to talk to me (and to each other, try the recording), they represent radically different views of the future of AI, with different ambitions and implications. I want to be clear that both are early models, and nowhere near complete, but I think sharing my experiences so far might be useful.

So, let’s talk about ChatGPT’s new Advanced Voice mode and the new AI-powered Siri. They are not just different approaches to talking to AI. In many ways, they represent the divide between two philosophies of AI - Copilots versus Agents, small models versus large ones, specialists versus generalists.

Siri as Copilot

Talking to Siri AI still feels like talking to the old Siri, at least for now. Your jaw will not drop in amazement, and you will still find yourself frustrated by how hit-or-miss it is.

There is a reason for the lack of a clear “wow” factor, Apple built Siri AI is around privacy, safety, and security. With over a billion people using their system, Apple didn’t want people exposed to all the risks and oddities of a LLM, they wanted something that worked well, and which was extremely private.

Doing that required trade-offs, and so Apple put a small AI directly onto the phone itself, rather than relying on an internet connection. This is possible because AI models come in many sizes. For example, the open weights Llama 3.1 model from Meta comes in a giant 405 billion parameter model (which is equivalent to GPT-4), a medium-sized 70 billion parameter model (around the old ChatGPT-3.5), and a small 8 billion parameter model. These parameter numbers refer to the complexity of the AI model - larger numbers generally indicate more capable but resource-intensive systems. I can run the smallest model on my computer, but specialized hardware is required to run the 405B parameter model. The small model is nowhere near as powerful, but it makes up for it in other ways.

Small models are cheap, fast, can be run on weaker hardware (like your phone) and can be specialized for particular tasks. As opposed to generalist models like ChatGPT, this creates AIs that are extremely focused on a narrow thing, which they can generally do reasonably well. Siri AI relies on a tiny 3B parameter model, but it uses a clever approach that allows their on-device AI to switch specialties among a few options, like summarizing text or editing images. Because all the work is done on your phone, it is encrypted and very private.

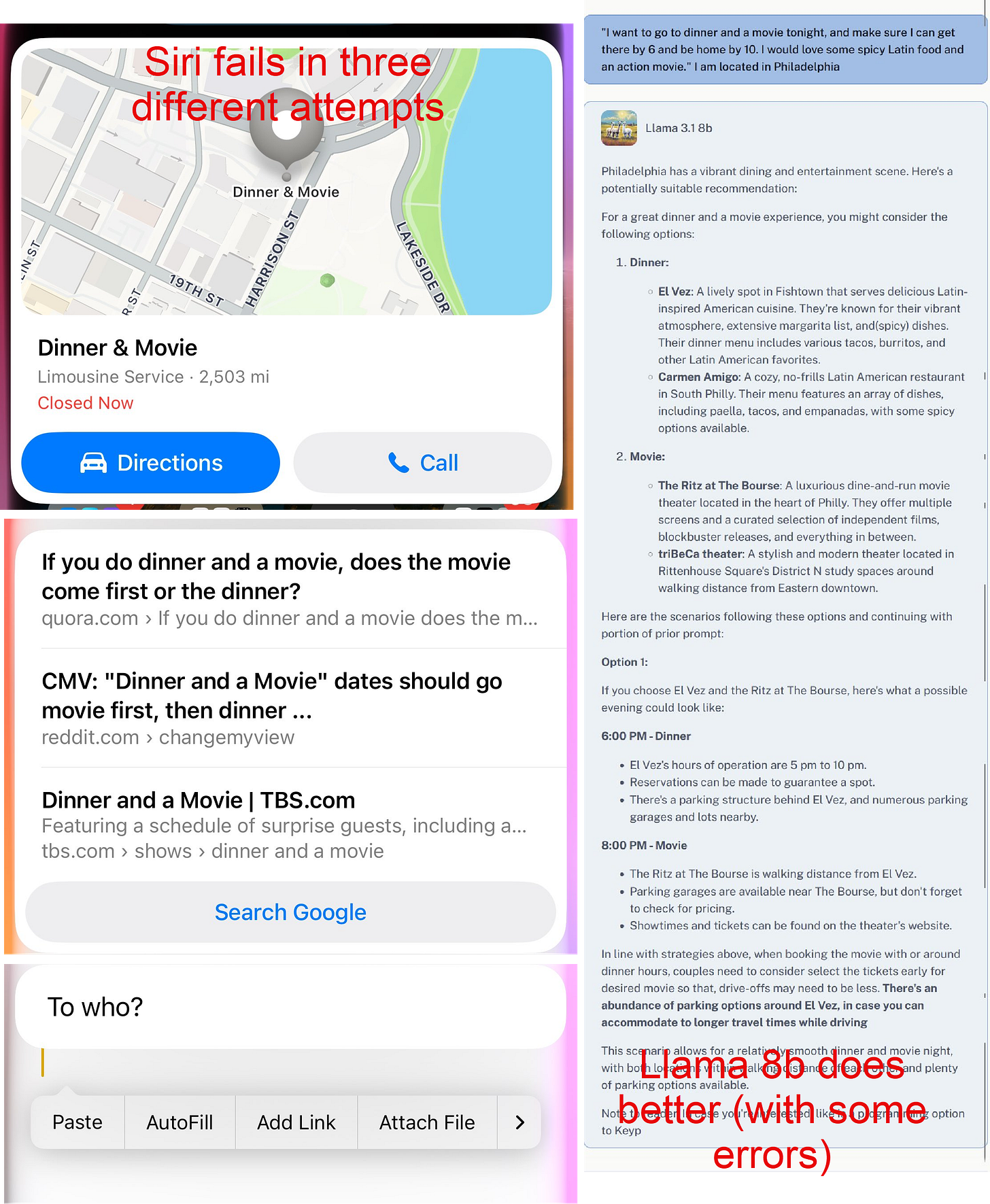

And yet, because it is a small model, it also isn’t that smart. In fact, it feels like using the old Siri, with minor improvements. If I ask it "I want to go to dinner and a movie tonight, and make sure I can get there by 6 & be home by 10. I would love some spicy Latin food and an action movie” it fails miserably. This is not actually a hard problem for LLMs, though. A slightly larger model, Llama 8B, actually does a much better job (though it does get some details wrong as it doesn’t have web access).

This is just the start for Apple AI, though, because a future update will make it so that the Siri on your phone can ask a larger Apple AI in the cloud to help when it can’t solve a problem, or even pass really hard questions on to ChatGPT. And it will be able to interact with apps, triggering actions and taking in information from multiple sources. The technology will certainly improve.

Yet, Apple’s approach is not just a technical one, but a philosophic decision. AI carries risks. It is unpredictable. It hallucinates. It has the potential for misuse. It is not always private. So, Apple decided to reduce the danger of misuse or error. They have turned Siri into a Copilot. You see these sorts of Copilots appearing in many products - very narrow AI systems designed to help with specific tasks. In doing so, they hide the weirder, riskier, and more powerful aspects of Large Language Models. Copilots can be helpful, but they are unlikely to lead to leaps in productivity, or change the way we work, because they are constrained. Power trades off with security.

ChatGPT Voice as Agent

If Siri is about making AI less weird and more predictable, ChatGPT Voice is the exact opposite. It does not use a small, tailored model, but rather provides access to the full power of the generalist GPT-4o. While there has been a kind of voice mode available for ChatGPT for months, this is very different. It engages in natural conversations, with interruptions and fast flow. It is hard to communicate exactly how impressive the interactive voice mode for ChatGPT is, so I would suggest you listen to the audio clips I have embedded into this post.

For example, here I get ChatGPT to help me with the opening paragraph of this post. Note not just the speed and natural flow of interruptions, but also subtle tonal changes (the simulated enthusiasm for me and my work, the natural sounding tone, etc.).

Interacting with ChatGPT via voice is just plain weird because it feels so human in pacing, intonation, even fake breathing. It is capable of a wide range of simulated emotions, because it isn’t just triggering a recording, instead, the system is apparently fully multimodal in outputs and inputs, taking in and producing sounds in the same way older generations of LLMs took in and produced text. Right now, it appears many of these features are locked behind guardrails - as you can see at the end of the clip below, the AI isn’t allowed to produce sound effects, or to change its voice dramatically, likely to avoid misuse - but those are capabilities that it has.

Working with ChatGPT via voice seems like talking to a person. Even though the underlying model is no different than the usual GPT-4o, the addition of voice has a lot of implications. A voice-powered tutor works very differently than one that communicates via typing, for example. It can also speak many other languages providing new approaches to cross-cultural communication. And I have no doubt people will have emotional reactions to their ChatGPT assistants, with unpredictable results.

But just like Apple has not enabled the full power of their system, neither has OpenAI. Their AIs are fully multimodal, which means that they can also view images and video, and potentially produce much better images than previous models as well. If their vision comes true, soon we will have assistants can watch, listen, and interact with the world. Once that is achieved, the next step will be agents, the idea that your AI should not just be able to talk to you, but also plan and take action on your behalf. Unlike Copilots, agent-based systems, and their precursors like GPT-4 voice, embrace messiness in ways that are both powerful and potentially risky. While full of guardrails, OpenAI’s approach to voice is much less constrained than Apple AI, and thus it will interact with the world in unexpected ways.

Sharp edges and new changes

The different approaches to voice show us the future of AI will involve navigating a tension between lower risk, less capable systems and those that allow the users more control and options, for good and bad. I think a lot of companies hope to have both, but I am not sure that is possible. They will need to decide whether to give users dull tools that are not very effective, but are also not dangerous, or whether they want to give them sharp knives that can be used to actually do work, but which carry the risk of injury. Dull knives will do no damage, but also much less good. I think we need to carefully consider when and where to select low-risk approaches (like Copilots) and where we are willing to tolerate risk of misuse in return for potentially huge benefits (like agents).

This is all early, and based on first impressions, but I think that voice capabilities like GPT-4o’s are going to change how most people interact with AI systems. Voice and visual interactions are more natural than text and will have broader appeal to a wider audience. The future will involve talking to AI.

Your knife metaphor is very useful. We bring out our sharp knives for steak, and dull ones for toast. Similarly a stable ecosystem will use both sharp and dull AI for appropriate tasks.

Also - we dull sharp edges for children until they develop dexterity and experience. Our society, and almost all individuals, are still in that early, rounded-scissors, stage of AI skill development.

I don't know if I can swear in your comments so I won't

but

HOLY MOTHERFORKING SHIRTBALLS

Am I the only one stunned by how miraculous and unbelievable GPTs voice technology is?

It's so crazy how quick humans adapt to things. Like, drop this technology 5 years in the past on one random day and people would probably think it was AGI.

Thank you for the piece and the voice recordings - good idea