I always thought reading science fiction would prepare us for the future, but fantasy novels might be better suited to understanding our suddenly AI-haunted world.

There are now advanced and useful systems whose exact operations remain mysterious, even to those who built them. While one can understand the technical aspects of building Large Language Models like ChatGPT, the precise capabilities and limitations of these AIs are often only uncovered through trial and error. And having both conducted academic studies and developed magic spells for fictional fantasy games, working with ChatGPT often feels more like the latter.

Consider that ChatGPT outputs are created by prompts invoking webs of tenuous connections, basically programs in prose form. And since the way that the AI makes these connections are not always clear, creating good prompts requires exploring the AI with language (here are some hints to get started in your explorations). Once discovered, new invocations are often kept secret to gain an advantage in a job, or else shared online through secretive chat groups. But the magic analogy goes beyond secret knowledge and esoteric schools: There are certain words that ChatGPT cannot seem to remember or understand (ask it what Skydragon means). There are glyphs that other AIs cannot see. Still other AIs seem to have invented their own languages by which you can invoke them. You can bind autonomous vehicles in circles of salt. As science fiction author Arthur C. Clarke’s Third Law states: “Any sufficiently advanced technology is indistinguishable from magic.”

AI isn’t magic, of course, but what this weirdness practically means is that these new tools, which are trained on vast swathes of humanity’s cultural heritage, can often best be wielded by people who have a knowledge of that heritage. To get the AI to do unique things, you need to understand parts of culture more deeply than everyone else using the same AI systems. So now, in many ways, humanities majors can produce some of the most interesting “code.”

Invoking Images

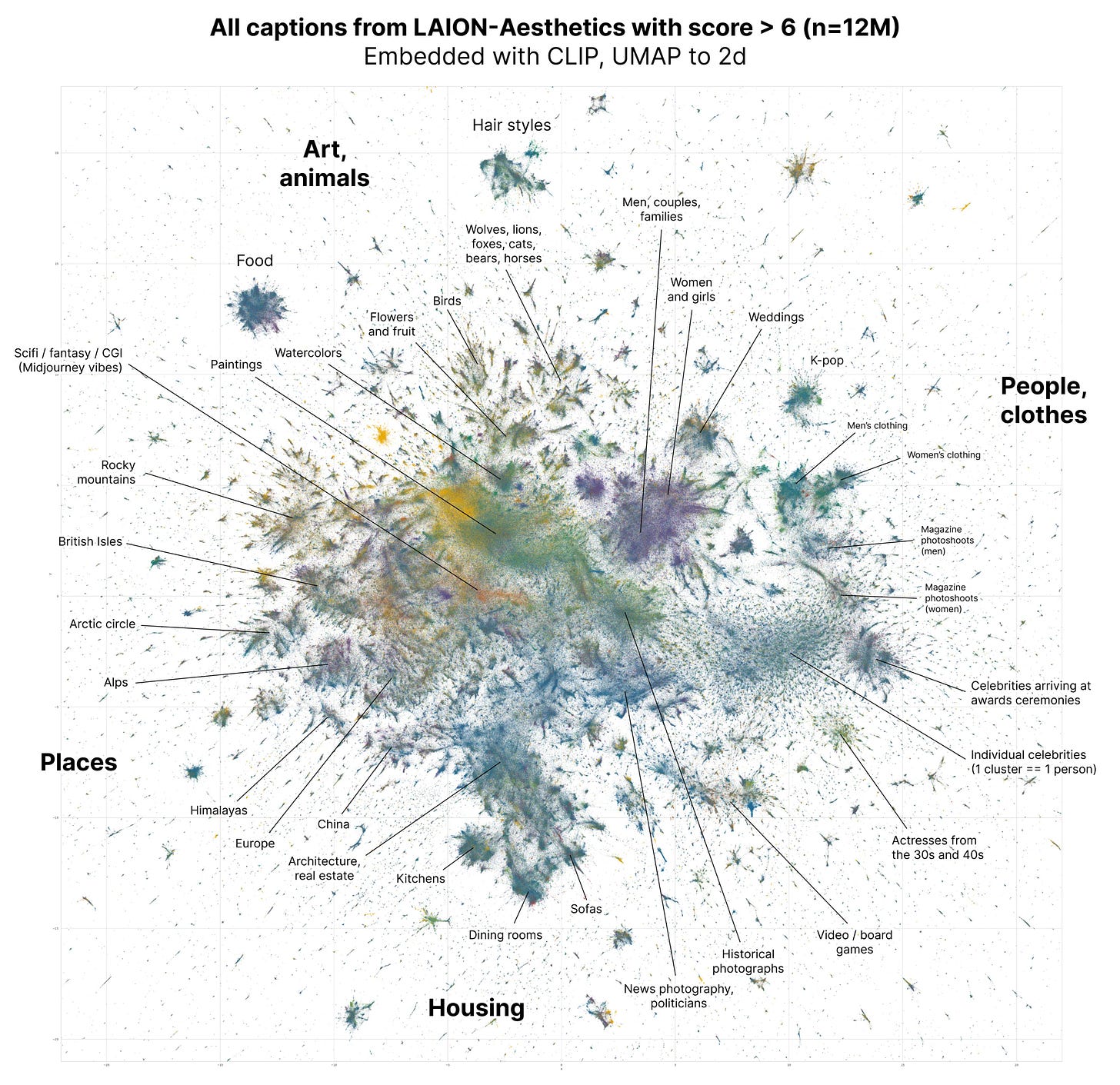

Nowhere is this intersection between art and computer science more clear than in image generation. Take a look at this map below. It shows you, roughly, what is in the 12M images that make up a key database used by AI image generators: paintings and watercolors, architecture and photographs, fashion and historical images. Creating something interesting with AI requires you to invoke these connections to create a novel image.

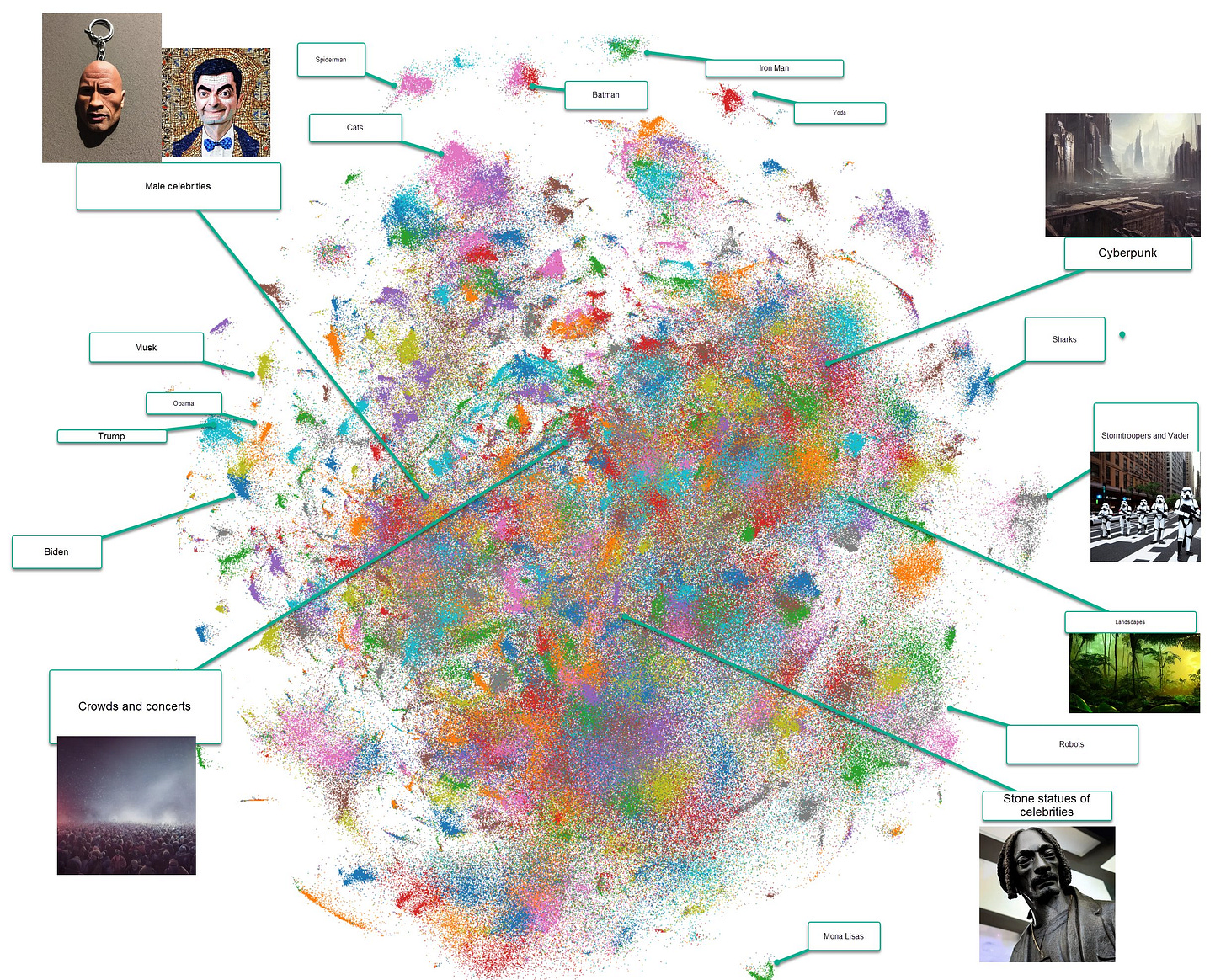

Now look at a second map. This is what people are actually creating with AI art tools. I labelled some of the clusters, but you can explore it yourself. You will notice that the actual range of used artistic space is much smaller. There is a lot of Star Wars, a lot of celebrities, some anime, cyberpunk, a lot of superheros (especially Spider-Man), and, weirdly, a LOT of marble statues of celebrities. Given a machine that can make anything, we still default to what we know well.

But we can do so much more interesting things! The AI can do a marble statue of Spider-Man, but it can also do a pretty amazing Ukiyoe woodblock Spider-Man, or Spider-Man in the style of Alphonse Mucha, or even images entirely unrelated to Spider-Man (gasp). But you need to know what to ask for. The result has been a weird revival of interest in art history among people who use AI systems, with large spreadsheets of art styles being shared for prospective AI artists. But the more you know about the history of art, and aware of art styles in general, the more powerful these systems become.

Understanding history and context also lowers the chance of unknowingly abusing these systems, which often have the ability to ape the style of living, working artists. An awareness of history also helps the user to see that these models that have built-in biases due to their training on Internet data (if you ask it to create a picture of an entrepreneur, for example, you will likely see more pictures featuring men than women, unless you specify “female entrepreneur”), and they are also trained on existing art on the internet in ways that are not transparent and potentially questionable. So a deeper understanding of art and its history can result not just in better images, but also more responsible ones.

Conjuring in English

This brings us back to ChatGPT. To get the best writing out of the AI, you need to provide prompts, just like you do with generative art AI. And, even though using ChatGPT often seems like you are having a conversation, you really are not. What you are trying to do is prompt the system to do things by giving it instructions in English. The best coders of ChatGPT for writing have have a solid understanding of the prose they want to create (“end on an ominous note,” “make the tone increasingly frantic”). They are good editors of others, so they can provide instructions back to the AI (“make the second paragraph more vivid.”). They can easily run experiments with audiences and styles by knowing many examples of both (“Make this like something in the New Yorker,” “do this in the style of John McPhee”). And they can manipulate narrative to get the AI to think in the way they want - ChatGPT won’t produce an interview between George Washington and Terry Gross, because that would be fictional. But if you convince it that George Washington has a time machine…

But it isn’t just producing prose that requires English skills. Getting ChatGPT to do anything involves narratives. You can instruct the AI to act in a different way by prompting it to think of itself as a coach, or a novelist, or a frustrated playwright. This can lead to radically different outcomes (You can see hundreds of persona suggestions here). Or you can convince the AI to act in unexpected ways by telling it compelling stories that override its limits. There has long been hints that coding computers might be more closely related to learning a foreign language than many expect, but the connection is more explicit now.

Our new AIs have been trained on a huge amount of our cultural history, and they are using it to provide us with text and images in response to our queries. But there is no index or map to what they know, and where they might be most helpful. Thus, we need people who have deep or broad knowledge of unusual fields to use AI in ways that others cannot, developing unique and valuable prompts and testing the limits of how they work. We need to explore together to discover how these tools can be used well, and when they might fail us. It isn’t quite magic, but it is close.

A lot of people see ChatGPT as a tool to "look up information." That may be a valid use case, but I think that at least as important is using it as a tool for simulation. The examples given here, including George Washington talking with Terry Gross, are in the simulation mold.

Small quibble: There are a few typos like "mightt" should be "might".

The interesting part would be to see when you mix languages. For example, there are heaps of "Spanglish" literature that is permeating the Hispano/Latino community around the southern border of the US. [Yes, I am deliberately avoiding the astroturf term Latinx].

Would GPT straddle across languages? Or is it going to stay monolingual?

I have not tried this experiment yet. But your post inspired me to think along those lines.