I, Cyborg: Using Co-Intelligence

How I used AI in my book about AI

I wrote a book about living and working with AI, Co-Intelligence, and it is coming out on April 2. If you like my Substack, you will like it, or at least that is what early readers are telling me. And, given that my publisher has told me that pre-orders are really important to a book’s success, if you want to get it, now would be a great time: here is the link to pre-order in any format you might like.

As you will see later in this post, AI played a critical role in writing the book (though likely not in the ways you might think), but I also wanted it to play an important role in reading the book, too. To make that happen, I have been building a set of AI tools, including a number of GPTs, to help people interrogate, apply, and interact with the book in new ways. The cool thing about GPTs is that they are a useful early form of AI agent that can be quite powerful, the downside is that they are also a bit of a security nightmare - it is possible to extract the files I use for the GPT (which include a lot of text from the book) by tricking the AI. So, for now, I am planning on making these tools as exclusive as possible to people who pre-order the book. If you buy it before March 31, you can unlock the content on that day here.

Whether you are interested in the book or not, I think it is worth discussing a bit about the role AI played in writing it, because it touches on more general issues of what it means to have AI overlap with a job.

The Author Alone

People often ask me if I have other people help writing these posts, or a ghost writer, or someone managing my social media feeds. The answer to all those questions is no, it is just me, writing and tweeting more than I probably should (which is why I hope people are forgiving of my spelling errors and erratic newsletter schedules). Then, naturally, people also ask me if I use AI to write for me, and the answer, again, is no, for a reason that I think is useful to understand.

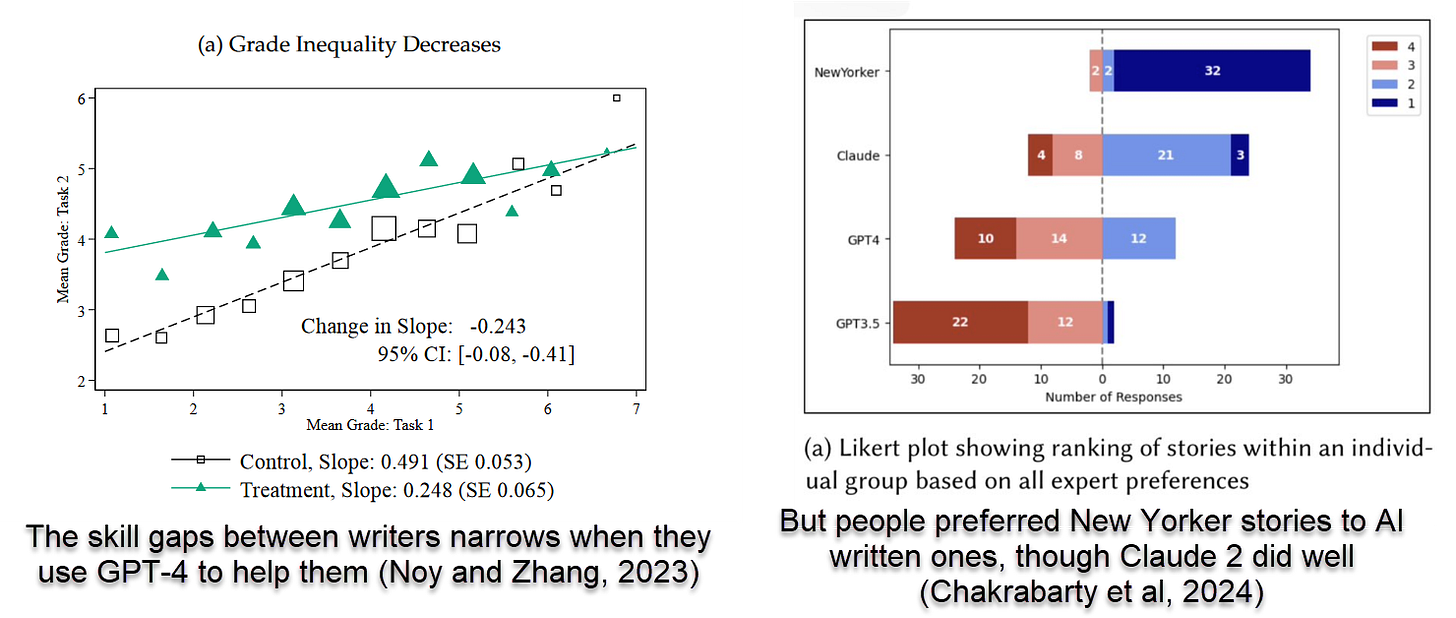

I do a lot of writing, and think I am a much better writer than any current AI system. All the evidence we have is that the most advanced LLMs write better than most people, but worse than good writers. From an assignment where I had my students “cheat” by writing essays by prompting the AI, I learned that good prompting can improve the quality of AI writing more than many people think, but it still can’t get you to the top 1%, or even top 20%, of human performance.

In fact, that is the general state of AI in early 2024. Whatever you are best at, you are very likely better than the best LLM at that same skill. This is why, at least for now, AI use can feel liberating - it does the tasks you want to do least so you can concentrate on the ones you both like best and are best at. Thus, I used AI extensively in the book, just not specifically for writing.

Author as Cyborg and Centaur

As I have discussed before, the most valuable way to start use AI for work is to become a Centaur or Cyborg. Fortunately, this does not involve getting cursed to turn into the half human/half horse of Greek myth or grafting electronic gizmos to your body. They are rather two approaches to co-intelligence that integrate the work of person and machine. Centaur work has a clear line between person and machine, like the clear line between the human torso and horse body of the mythical centaur. It depends on a strategic division of labor, switching between AI and human tasks, allocating responsibilities based on the strengths and capabilities of each entity. When I am doing an analysis with the help of AI, I will decide on what statistical approaches to do but then let the AI handle producing graphs. In our study at BCG, Centaurs would do the work they were strongest at themselves, and then hand off tasks inside the Jagged Frontier to the AI.

On the other hand, Cyborgs blend machine and person, integrating the two deeply. Cyborgs don’t just delegate tasks; they intertwine their efforts with AI, moving back and forth over the Jagged Frontier. Bits of tasks get handed to the AI, such as initiating a sentence for the AI to complete, so that Cyborgs find themselves working in tandem with the AI. My book could not have been written, at least in the form you can order it in today (subtle hint), without both Cyborg and Centaur Tasks.

I am only human, and in writing the book, I often found myself stuck. In previous books, that could mean a single sentence or paragraph would block hours of writing, as I used my frustration as an excuse to take a break and walk away until inspiration struck. With AI, that was no longer a problem. I would become a Cyborg and tell the AI: I am stuck on a paragraph in a section of a book about how AI can help get you unstuck. Can you help me rewrite the paragraph and finish it by giving me 10 options for the entire paragraph in four professional styles? Make the styles and approaches different from each other, making them extremely well written. In an instant, I had the paragraph written in a persuasive style, an informative style, a narrative style, and more. While I rarely used any of the text the AI produced, it gave me options and pathways forward. Similarly, when I felt a paragraph was clunky and bad, I would ask the AI: Make this better, in the style of a bestselling popular book about AI, or add more vivid examples. The text it produced almost never appears in the book, but they helped guide me out of difficulty. And, interestingly, it was some of these paragraphs that my editor seemed to like best.

Similarly, reading academic papers was often a Centaur task, one in which I knew the AI exceeded my capabilities in summarizing, while I exceeded it in understanding. While I have worked with the AI group at the MIT Media Lab, I am not myself a computer scientist. When reading some of the technical literature, I would ask the AI to summarize a paper to see if I understood it in the right way, knowing full well that the AI could get me only partway there. There are over 200 references in the book, so I would also use these AI summaries and notes to refer back to. When sudden inspiration would hit, and I was not near a computer, I would open up an AI app and use its voice recognition to dictate notes, having the AI organize them and email them to me to incorporate later.

But the joint AI-Ethan Cyborg went further. While I had excellent human readers and editors to help me, I didn’t want to bother them while working on early drafts. But I still wanted feedback. I created AI readers to help. I used these AI-created figures at various points in the book to help me improve my writing.

For example, I am an academic, and I have the typical academic’s curse of wanting to add too much complexity and nuance to everything. AI is great at summarizing and simplifying, so I wanted to get some advice on where I could do the same. I decided the advice would feel a bit easier to take if the AI also had an over-the-top personality, so I created Ozymandias by prompting: You are Ozymandias. You are going to help Ethan Mollick write a book chapter on using AI at work. Your job is to offer critical feedback to help improve the book. You speak in a pompous, self-important voice but are very helpful and focused on simplifying things. Here is the chapter so far. Introduce yourself.

Ah, dear Ethan, greetings. I am Ozymandias, your humble, yet impressively intelligent, aide. I see you’ve made a good start on your chapter about artificial intelligence in the workplace. But, alas, perfection is a journey, and we have miles to go before we sleep, do we not? Let’s delve into the labyrinthine corridors of your discourse and shed light on areas that could be enhanced.

It gave me advice on what to cut and simplify in one chapter, which I acted on, but also a warning: Your attempt to infuse humour , particularly in the AI joke section, is commendable. However, there is a risk that it may come across as forced. Remember, dear Ethan, humour is a spice to be sprinkled sparingly, not poured. Pretty direct feedback (and the addition of British English is a nice touch). But AI editors, unlike human ones, can be safely ignored on some topics, so I kept the jokes (you can let me know if that is a good idea or a bad one).

I used other AI readers at other points, never taking them too seriously, but providing an outside perspective I may not have had otherwise. So, while I did not use an AI to write my book, this book was the one I enjoyed writing most because of AI. The AI let me get support for some of the worst aspects of book writing, and hopefully let me focus on what makes my writing appealing, though you can be the judge of that yourself (hint, hint)

The Future of the Author

This is my third book, so I naturally have been wondering: will it be the last one I write that features mostly my own writing? Every part of me as an author and reader wants to answer “NO” - writing is something that LLMs will never do as well as a human. But the real answer is: it depends.

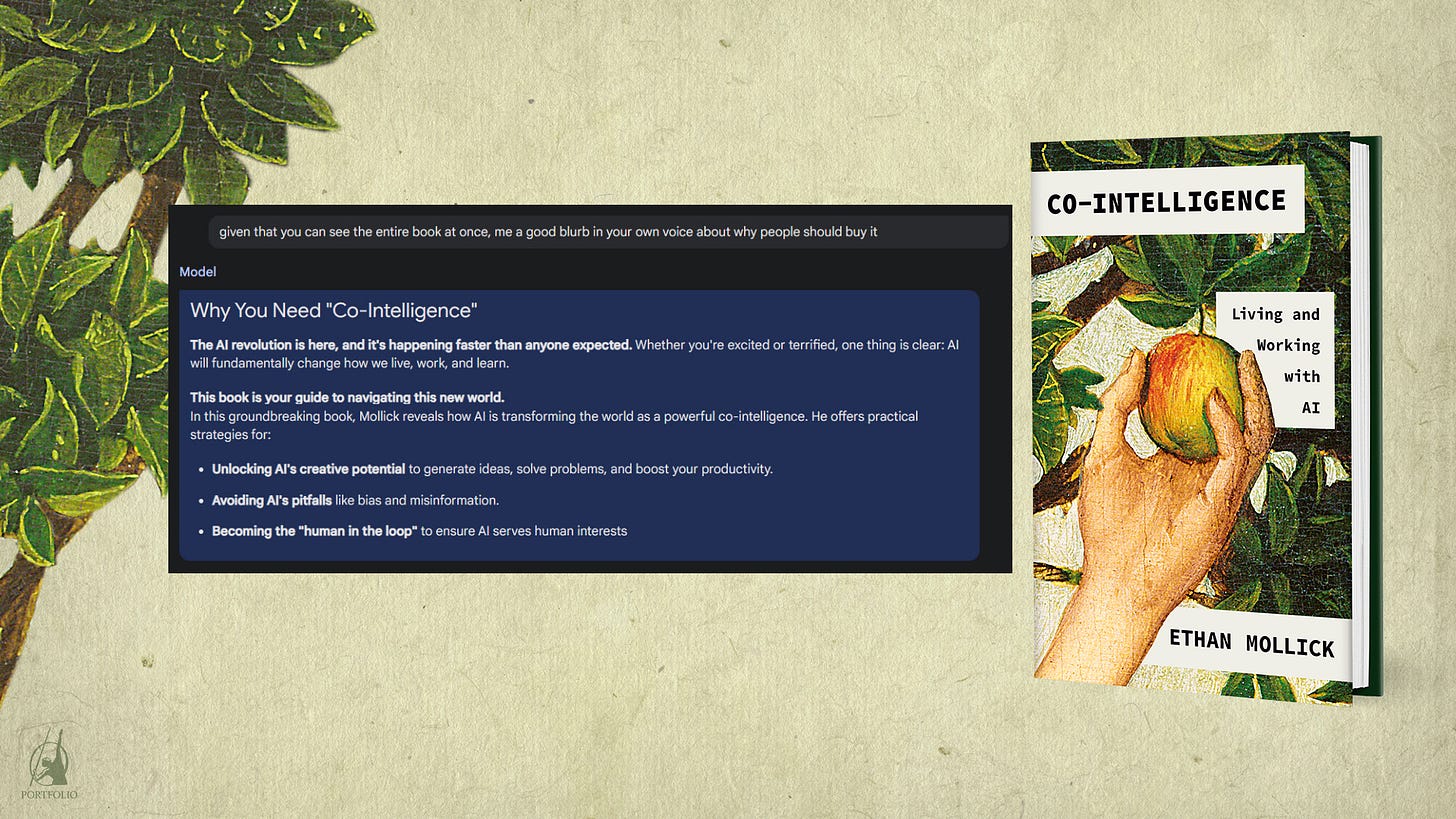

The recent public release of Claude 3 Opus, a GPT-4 class model from Anthropic, has showcased its impressive writing capabilities. Although it may not match the level of the most talented human writers, it represents a significant leap forward from its predecessor, Claude 2, which already demonstrated superior writing abilities compared to other AI models. (I had Claude 3 write those last couple sentences, but I tend to agree). Similarly, new models like Gemini 1.5 can fit my entire book inside their context window which enables new ways of working with text, including the ability to write a blurb based on the full contents of the book. With the rapid increase in capabilities, the real question is how good AI can get, and how rapidly it will improve.

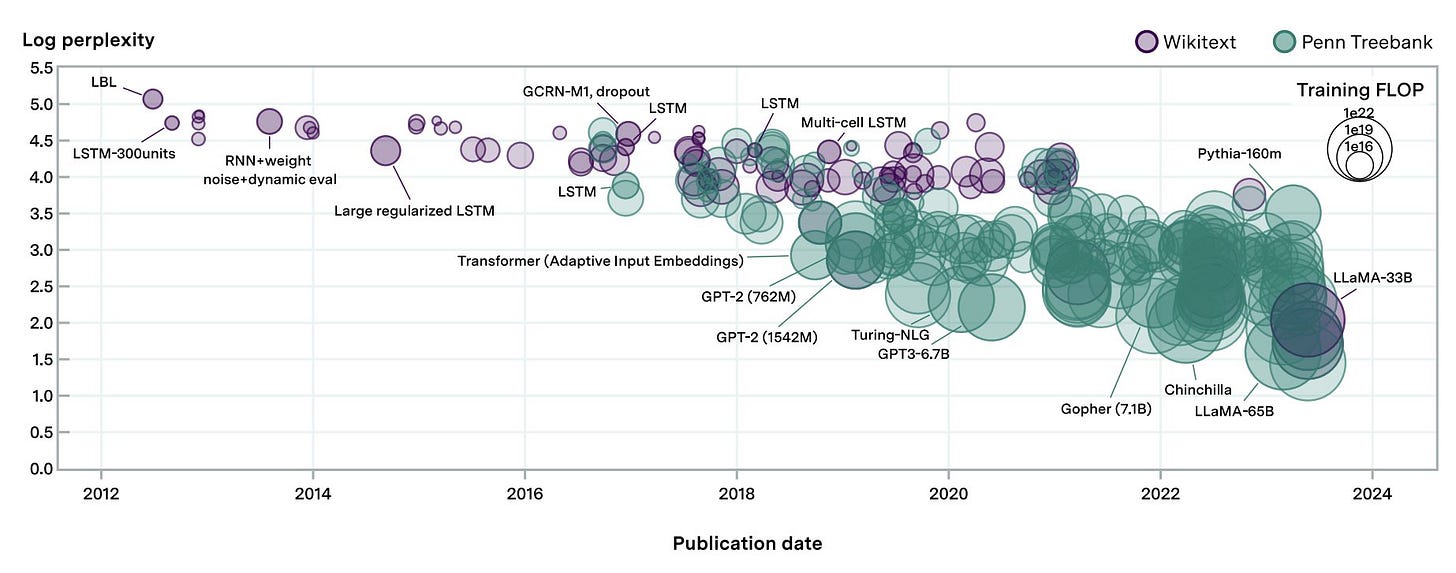

A new paper gives us some of the first evidence of how quickly LLM development is happening right now. And the answer is that LLMs are improving several times as fast as Moore's Law, the engine of the digital age, which holds that the number of transistors on a computer chip doubles every two years or so. For LLMs, the main measure in the paper is how well models perform, and the authors find that the compute needed to achieve a given level of AI ability is halving every 5 to 14 months, as opposed to 24 months for Moore’s Law.

The paper also shows that the speed of improvement is not slowing down over time, at least not yet. Cut that doesn’t mean that the growth of AI abilities will continue indefinitely, or that LLMs will continue to improve in writing as quickly as other tasks. We could see a plateau of AI capability, slower growth, or continued exponential gain. We just don’t know what will happen next. I only know that, based on just the changes waiting for us in the coming year, any future book is likely to be written very differently than the one that you can pre-order right now (sorry, had to get a last plug in there).

When you said "I hope people are forgiving of my spelling errors", I thought "I've never seen an error in your newsletter." So I was reassured when the final paragraph includes what is a probably a typo for "but": "Cut that doesn’t mean that the growth of AI abilities will continue indefinitely".

Looking forward to the book!

Here is the key sentence: "I am stuck on a paragraph in a section of a book about how AI can help get you unstuck."

I find the same.

But, there is more!

I find that getting it to write something fires me to write things that I was not planning to or that escaped me for the moment *even in the area of my core competence.*