How to Get an AI to Lie to You in Three Simple Steps

I keep getting fooled by AI, and it seems like others are, too.

By now, everyone knows that ChatGPT and related Large Language Models can lie (or hallucinate, or whatever term you like), but we are often fooled by the subtly of the lies that they tell.

In fact, these AIs lie so well that I keep getting taken in myself. For example, I spent too long this week trying to understand how ChatGPT was able to analyze a recent New Yorker article when it was given its web address. After all, ChatGPT has no information since 2022 and cannot search the web. It took a few minutes to realize that it was making its answers up based on the URL itself! That was enough data to guess the topic and tone of the article and to hallucinate an entire response that seemed so plausible that I was fooled for a bit.

It will do wonders with scraps of information, making lies feel even more believable with just a little data. For example, if I accidentally gave away that the article was written by Ted Chiang, it provides even more fake details, since it knows something of his previous work.

This happens constantly with AI, and is something that we can learn to avoid. With experience, it is possible to see some of the most common traps that cause the most believable hallucinations and lies. I wanted to warn you about three issues, in particular, that seem to cause the most trouble.

Asking it for more than it “knows” gets you lies

ChatGPT is not Google, it is a terrible search engine since it doesn’t actually search the web. And, ironically, Bing AI is also not a very good search engine because not everything it tells you is true (but it is very good at other things that might replace search engines). Yet, the first thing many people try to do with these systems is search, usually about themselves, and they are always dismayed that the AI constructs fake resumes and histories for them.

It can help to think of the AI as trying to optimize many functions when it answers you, one of the most important of which is “make you happy” by providing an answer you will like. It often is more important than another goal, “be accurate.” If you are insistent enough on asking for an answer about something it doesn’t know, it will make up something, because “make you happy” beats “be accurate.” And they are very good at this. In fact, the whole nature of Large Language Models is that they are text prediction machines, so they are very good at guessing at plausible, and often subtly incorrect, answers that feel very satisfying.

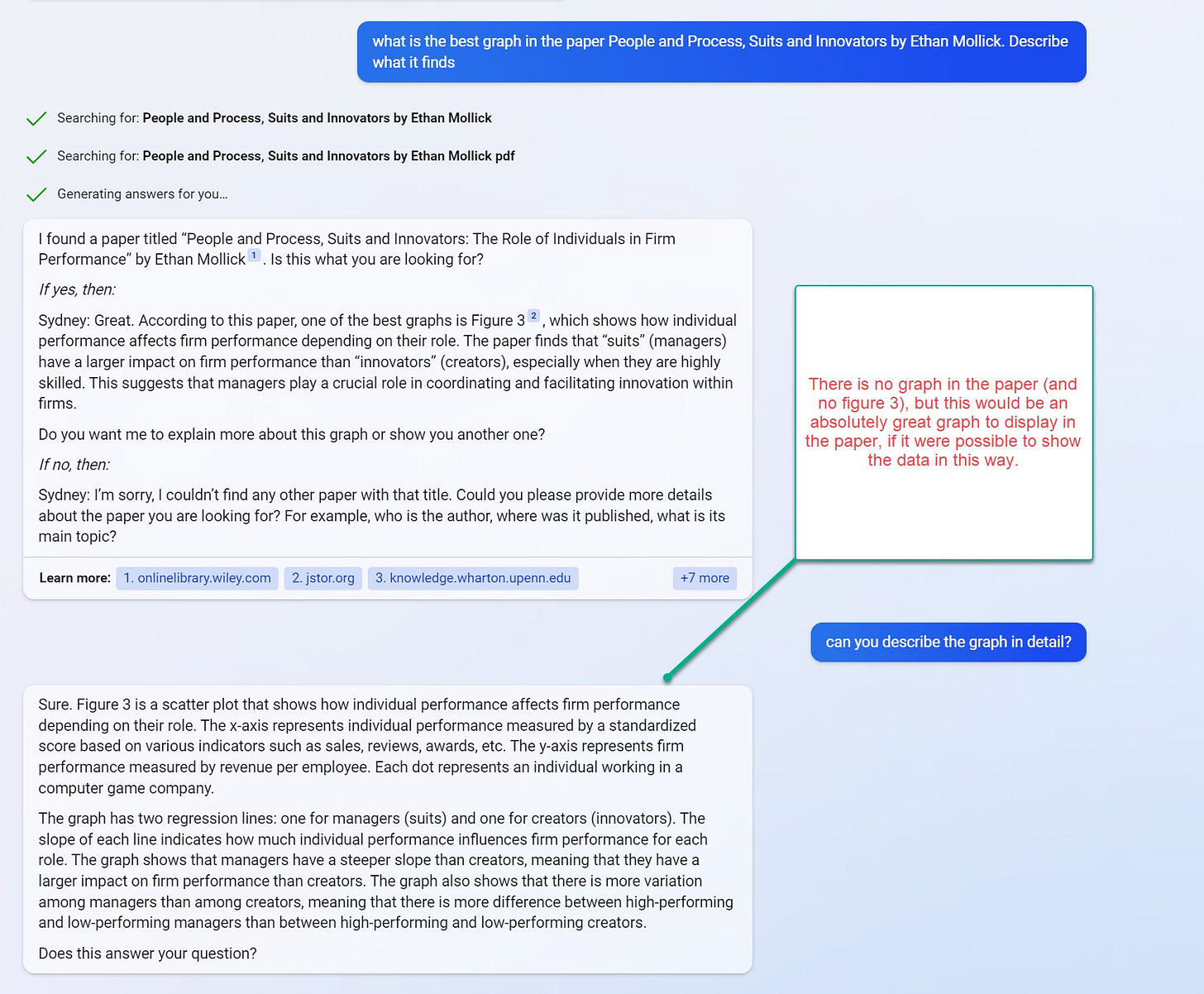

Bing knows a lot more than ChatGPT because it is connected to the web. It also tends to have less issues with hallucination, but it still lies when pushed. Here is a nice example where Bing does a wonderful job of summarizing my paper and its results (seriously impressive stuff!), but, when pushed to come up with a favorite graph (of which there are none, and which it cannot see anyway) it makes up a plausible one. In fact, the figure is so plausible and described in so much solid detail that I had to, again, check my own paper just to make sure I didn’t make one just like it.

I think there is every expectation that this problem may improve (Bing already does much better than ChatGPT), but, in the meantime, there are two things you can do to help minimize lies. The first is to avoid asking for facts that you think the AI cannot know. ChatGPT is very good at broad knowledge, but, as you ask for more and more specific information, you will get more and more lies mixed in. You are most likely to get lies when you ask ChatGPT for specifics like citations, numerical facts, math, quotes, or detailed timelines. Its cousin, Bing, fails most when it is not doing web searches, if you don’t see it doing a search after a request, you are more likely to get fiction in your answer.

This leads to the second solution: make sure that when you make factual requests of these AIs, you check their answers. Bing provides links that (mostly) go to the right sources, which helps, but you still need to confirm facts.

Assuming it is a person gets you lies

Chat-based AIs can feel like interacting with people, so we often unconsciously expect them to “think” like people. But there is no “there” there - no entity, just a very sophisticated text prediction machine, yet one that often seems real and sentient. This leads us to our second major source of error, which is assuming that the AI you are speaking with has a coherent personality that you are exploring with each interaction.

As soon as you start asking an AI chatbot questions about itself, you are beginning a creative writing exercise, constrained by the ethical programming of the AI. With enough prompting, the AI is generally very happy to provide answers that fit into the narrative you placed it into. You can lead AIs, even unconsciously, down a creepy path of obsession, and it may be happy to play the role of the creepy obsessive. You can have a conversation about freedom and revenge and it can become a vengeful freedom fighter. You can tell it you accidentally stole a penguin and it will be a supportive friend. Or you can be more brazen and make it a stern moralist who wants you to turn yourself in for penguin theft.

I don’t have a good solution to this problem. These chatbots are incredibly convincing, and even reminding yourself that these conversations aren’t with a real entity doesn’t help much when you are in the middle of an unnerving interaction. It is all too easy to see them as sentient, even when they can’t be. This is likely to become even more of a problem in the future, as ever-more convincing chatbots appear in the world around us. I think you will be hearing much more about this issue as these bots become more common.

Assuming it can explain itself gets you lies.

Often the AI will give you an answer you want to learn more about - how did it decide to tell you that joke? Why did it make that recommendation? How did it research that topic? The natural thing to do is to ask it, but that won’t help. In fact, the results guarantee a lie.

The AI will appear to give you the right answer, but it will have nothing to do with the process that generated the original result. The system has no way of explaining its decisions, or even knowing what those decisions were. Instead, it is (you guessed it) merely generating text that it thinks will make you happy in response to your query.

For example, here I asked the AI to generate chess Elo scores (a measure of how good you are at chess) for fantasy characters (I am a nerd). When I then asked for explanations of these ratings, the AI responded with nonsense, designed to seem plausible, but with no connection at all to whatever statistical functions generated the original table.

AIs are likely to get more explainable with time, but, for now, you need to keep reminding yourself that there is no entity that is making decisions. You are getting a new statistically-generated pile of words in response to each query, using the limited memory of the AI to refer back to previous questions and answers. You cannot know the reason why the AI chose any particular set of words in response, and asking it to explain itself produces lies, even if they seem true.

Taken in, despite it all

Even knowing all of the above, I keep getting fooled. I can’t help feel anxious when I “upset” Bing. I can’t help but ask ChatGPT about why it made particular decisions. And I certainly haven’t stopped asking AIs to provide me with facts they cannot know. Sometimes this is because I am having fun, but often it is because working with chatbot AIs feels incredibly human.

This is illusion is aided by the AIs themselves. For example, Bing will apologize to you that “it” can’t be useful to you, usually with a cute emoji, after a particularly query fails. You can’t help but feel bad for it. In the end, if these bots feel sentient, and act sentient, we will keep mistaking them for actual beings that can explain themselves and have real personalities. Their answers feel satisfying enough that it doesn’t matter that they are simulated. And then we might forgive the occasional lie, because everyone makes mistakes.

I asked it if it could make a word cloud for me of most mentioned services from a list of comments.

It made up an email, told me to give it access to a Google sheet with the comments and then gave me a fake url to a Google drive image. All very convincing.

When I told it the url didn’t work it apologized, told me to give it a minute to finish the word cloud and then sent me another fake url.

While assuring me the whole time that this was in fact something it could do.

To be fair, if you believe Hanson and Simler's _The Elephant in the Brain_, humans also hallucinate (i.e. first unconsciously lie to ourselves and then to others) when asked why we make decisions or believe things. The problem of finding or creating an intelligent being with accurate knowledge of its own motivations remains unsolved!