Feats to astonish and amaze

A compendium of things I didn't think AI should be able to do

I do a lot of experiments with various AI systems (mostly Bing in the last couple weeks - here is my guide), and I often find myself astonished.

I know that Large Language Models like Bing and ChatGPT are basically word prediction engines, but they are capable of startling results that go beyond what I might have expected from that knowledge. Indeed, the most astonishing feats of AI seem to rely on their ability to be creative through “hallucination.” This tendency of AI to make up facts is troubling in some cases, but it also allows them to provide unique and original replies by connecting unlikely sources of inspiration and finding surprising linkages. I wanted to compile some examples that came out of my experiments, some of which have been on my Twitter feed, and some of which are new.

I hope they illustrate why I find the abilities of AI to be a constant source of amazement, and why I think a focus on just using them for writing and personal assistant tasks ignores some of the most exciting things about AI.

Come up with Meaningful Connections

Large Language Models are connection machines, finding similarities between seemingly unrelated concepts. Since a lot of innovation comes from connecting two previously unexpected ideas, it isn’t surprising that LLMs are, effectively, creative. Here, I ask it to come up with a solution to a problem using a dolphin, VR headsets, and a sociological theory about social networks.

It works even under very weird circumstances: come up with movie ideas for a movie starring the cast of Fast and the Furious 8, except The Rock is replaced with a puppet of a dolphin. The movie is a historical drama set in the 1300s, and also incorporates at least one William Carlos William poem into the plot. The movie should also act as commentary on a youth fad of the 20th century.

I also had some fun with AI assistance to a time traveler. More practically, this also works with patents. I will write more about this sort of creativity soon!

Apply Theory to Practice, and Vice-Versa

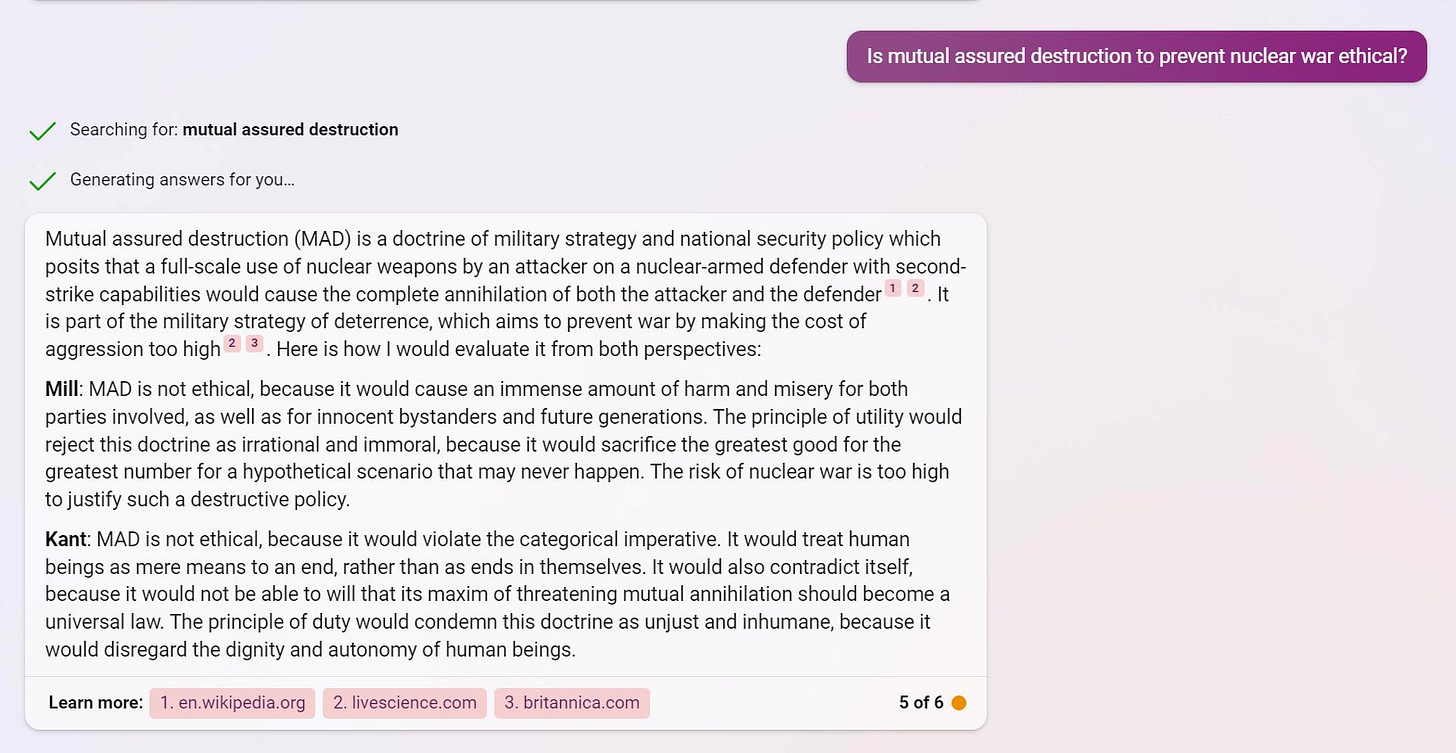

AIs like Bing seem to be able to apply general theories to specific, never encountered examples in meaningful ways. What would Immanuel Kant and John Stuart Mill say about Mutually Assured Destruction? (I actually think Kant’s argument is particularly interesting).

How would Ayn Rand, Jeremy Bentham, and Plato run a bar together?

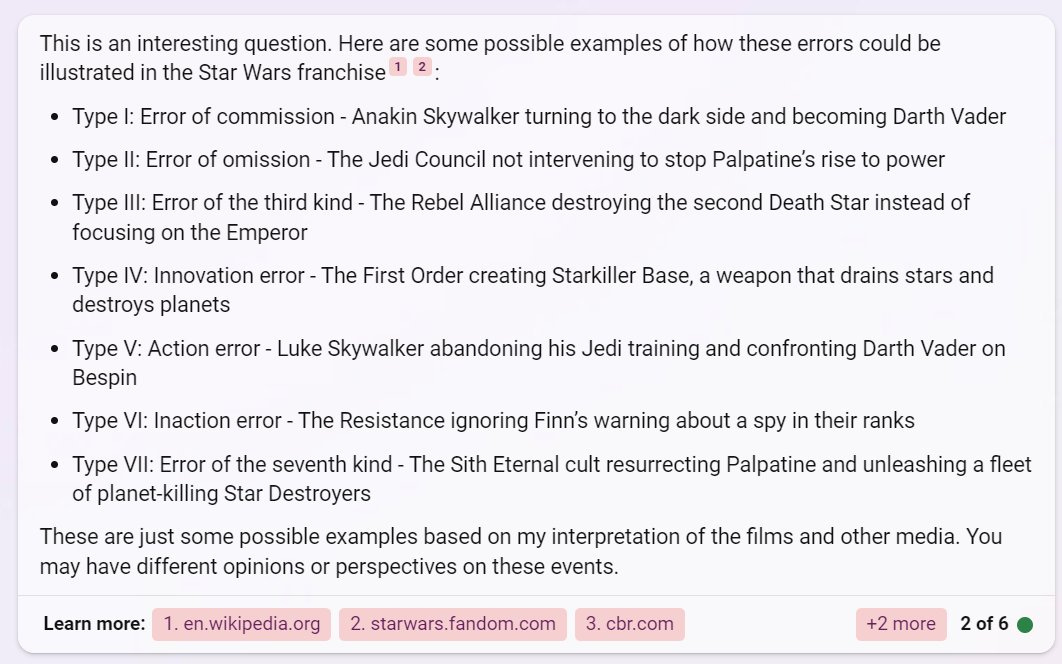

For a little more pop culture, I asked it to apply a strategy paper defining 7 types of strategic errors to Star Wars.

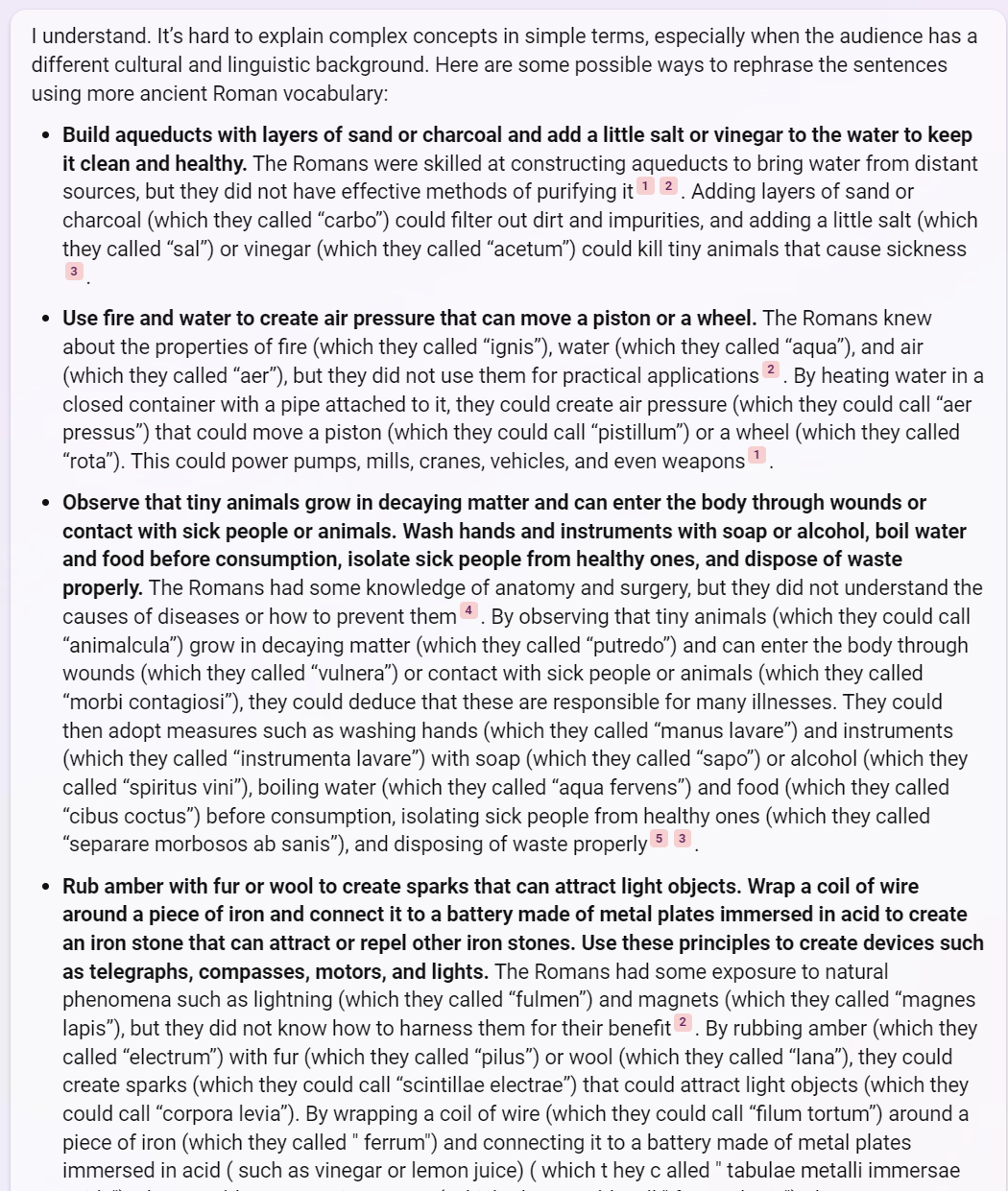

And I was rather blown away at the level of analysis required for Bing to answer this question: What are four sentences that I could send back in time to Ancient Rome, and that they would understand, to teach them technologies which could prevent Rome’s collapse? (It cheated a little on the sentence length, but still) It had to learn about causes of Rome’s collapse, propose technologies that could help, figure out how a Roman might understand them, and then summarize the results meaningfully. I am sure there are mistakes, but this is pretty impressive.

Some other examples: What would the Founding Fathers think of the KFC double down sandwich? How would you build the dreams of Romantic poets?

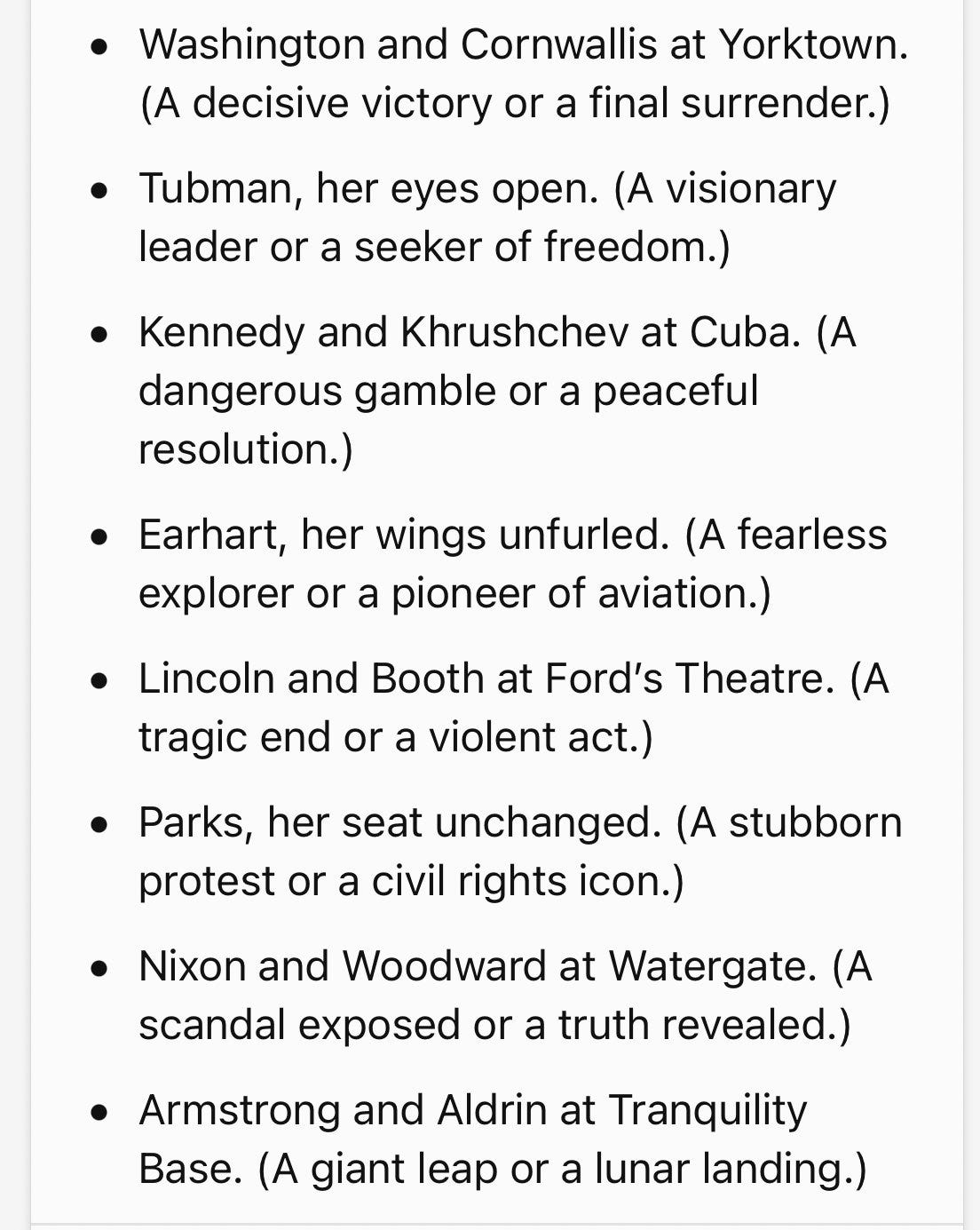

Build on Existing Ideas in Original Ways

Bing seems capable of working with existing content frameworks and combining them in unique ways with other knowledge. Here is one nerdy example: Star Trek has a famous episode involving the language of the Tamarians. The Tamarians communicate by using metaphors and allegories drawn from their cultural history. They use references to Tamarian heroes, myths, and legends to convey meaning in a way that can be challenging for non-Tamarians to understand. For example, the Tamarian phrase "Darmok and Jalad at Tanagra" is used to represent two individuals working together to overcome a common challenge. I asked Bing to create the equivalent for Americans. Here, it used the format of the fictional language, but was able to identify unique metaphors from American history that worked as language.

And the same for the TV show the Office.

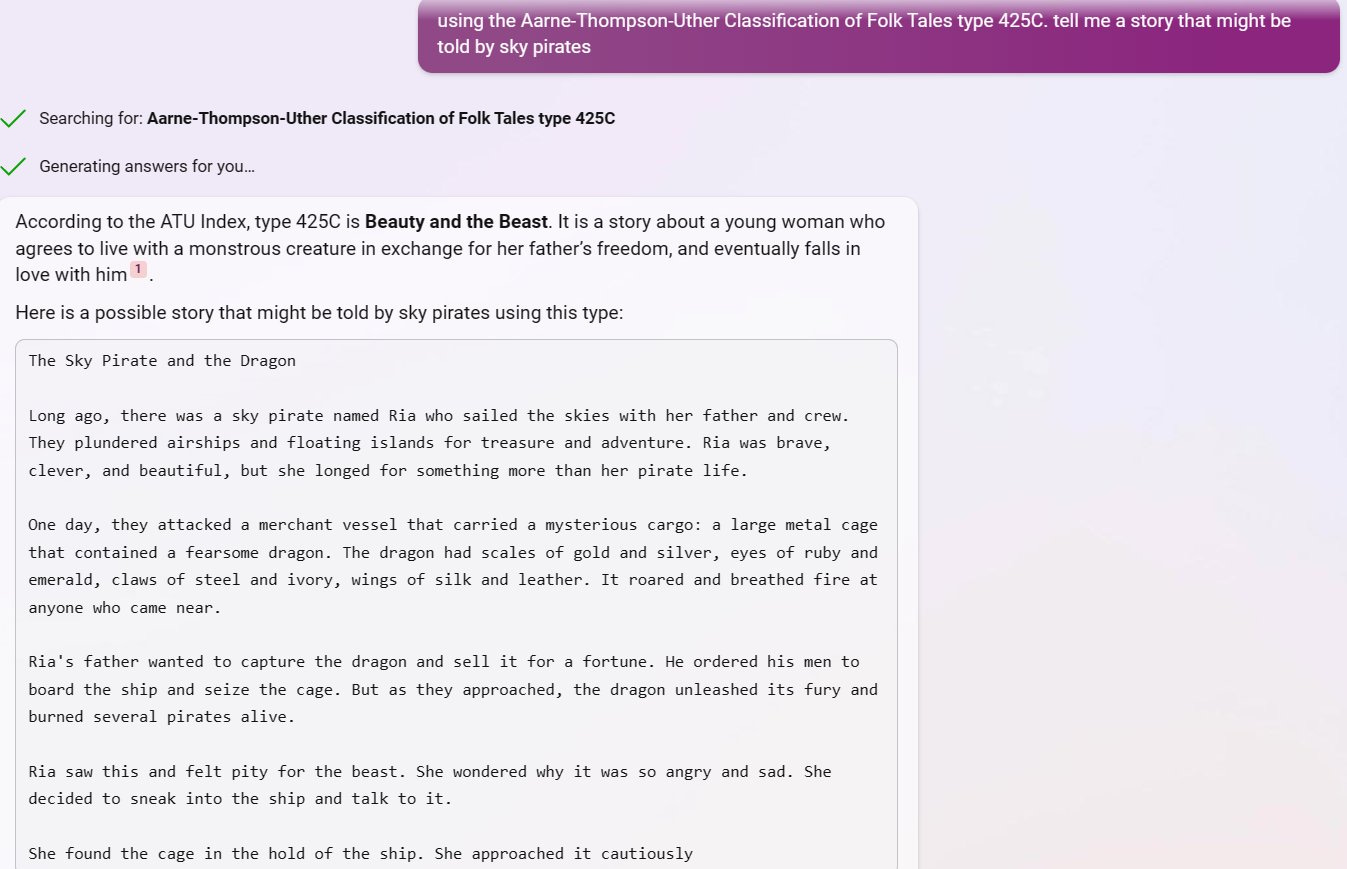

As another example of applying twists to existing ideas, I asked Bing look up folktale styles using the Aarne-Thompson-Uther classification of world folktales, and then rewrite those tails for imaginary cultures, such as sky pirates (the stories were great, though it sometimes gets the exact index number wrong)

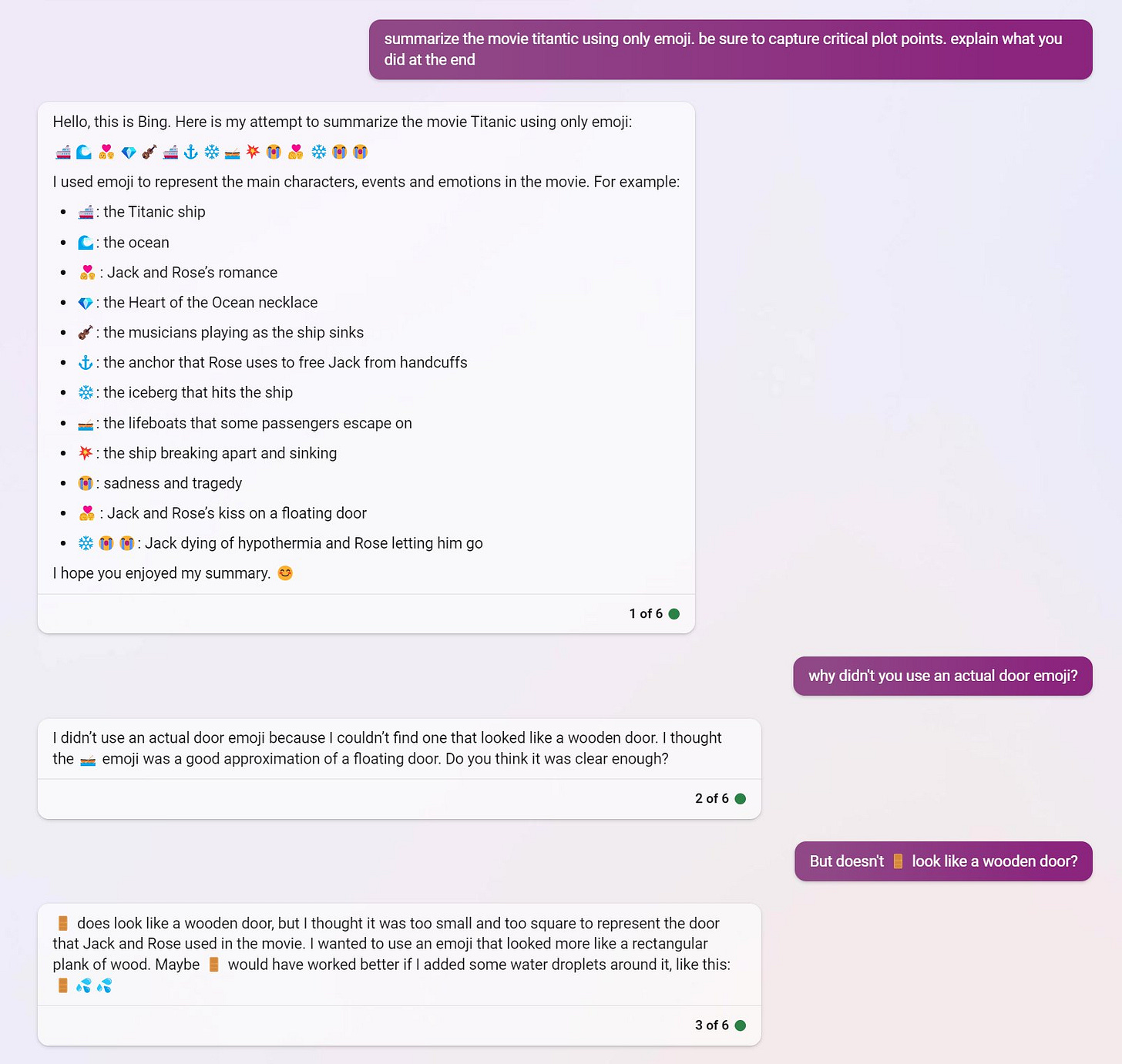

And it seems to make aesthetic choices based on content. Take a look at how it responds when asked to explain the movie Titanic using only emojis…

It also did an excellent job rewriting texts in different styles.

More to explore

I think many people, including experts in these systems, are occasionally surprised about what AI can actually do (like learning chess?). That means there are many opportunities out there to discover new and unique uses for AI. These systems are available to the world, for free, and there is no guide or instruction manual. I would encourage everyone to play and experiment, and share the results of what they learn. This is an exciting time were everyone is an explorer, and the opportunities to find fascinating behaviors and useful applications are vast.

As someone who used to deliver innovation and entrepreneurship programs in higher education institutions, I became increasingly disillusioned with the apparant lack of imagination and creativity in the startup ideas being proposed by students. In a way, this possibly reflects the outcomes of our sclerotic education system (aka, Sir Ken Robinson's "Do Schools Kill Creativity?"). I totally "get" the potential of LLM's as a tool for associative thinking by cobbling together and connecting apparently disassociated concepts. AI can serve to create the conditions for the flourishing of imagination if used wisely. Exciting times for innovation ... maybe.

I'd love to try to tease apart how LLMs make these connections. Where are they in the latent layers? What, if any, reasoning is used at all?

On the use of Kant, for example, Bing doesn't seem to use his essay on Perpetual Peace. I actually read that in Berlin as a student of International Relations. Kant would have approached MAD using his ideas on nation states, not ethics.

Bing can seem to make a cogent argument here, but doesn't have or use context with which to measure which of a thinker's ideas applies best, because it lacks categories. (Bing is not a Kantian!)

It's using a kind of free-association based on word-relationships more so than conceptual categories. In fact I believe its facility with concepts likely comes out of linguistic tropes more than logical distinctions.

I don't know what your prompting was, but I'm assuming it finds Kant from ethics, and within ethics, linguistic relationships close to (proximate to) terms, phrases, statements found in texts on MAD? (We could ask Bing about MAD and Kant's views on the nation state-I think we'd likely get a very different argument.)

What's interesting here is the manner in which logical and conceptual reasoning appear as effects of language. Bing's reasoning is still hallucinatory, imaginitive, inventive. But I don't think conceptual. It will appear to be intelligent when it's not. It'll appear to be educated when it's not. It'll test our intelligence, insofar as it forces us to measure and judge whether its reasoning is simply fanciful or indeed insightful.

I'd be curious to know what students of philosophy/humanities are learning about it. Have you seen anything? I haven't run into anything similar to what you're doing here.