Doing Stuff with AI: Opinionated Midyear Edition

AI systems have gotten more capable and easier to use

Every six months or so, I write a guide to doing stuff with AI. A lot has changed since the last guide, while a few important things have stayed the same. It is time for an update. This is usually a serious endeavor, but, heeding the advice of Allie Miller, I wanted to start with a different entry point into AI: fun.

Experiencing AI through play

I have given talks to thousands of people about AI, and there are lots of things that I can demo that tend to amaze or worry folks, but there is one thing that never fails to delight: making a song.

So, before you do anything else, go to Suno (which you can also access via Microsoft Copilot) or Udio and make a song. Even if you have done it before, the updated models are so much better, that you should try again. Here, for example, is the modern jazz pop rendition of the abstract to Attention is All You Need, the paper that kicked off Large Language Models. It’s surprisingly catchy. Seriously give it 25 seconds, I have been singing the chorus to myself all day.

But if you want another playful audio way to experience the same paper, take a listen to the first entry in Google’s Illuminate demo, which turns papers into NPR-style radio interviews. It is worth a few moments of your time to play one to see how realistic it sounds - the little breaths, pauses, and interactions between the virtual hosts all sell the idea. Of course, even these playful applications of AI expose some of the issues that haunt Generative AI overall. For example, we don’t know which data was used to train AI music models, and what its implications are for artists.

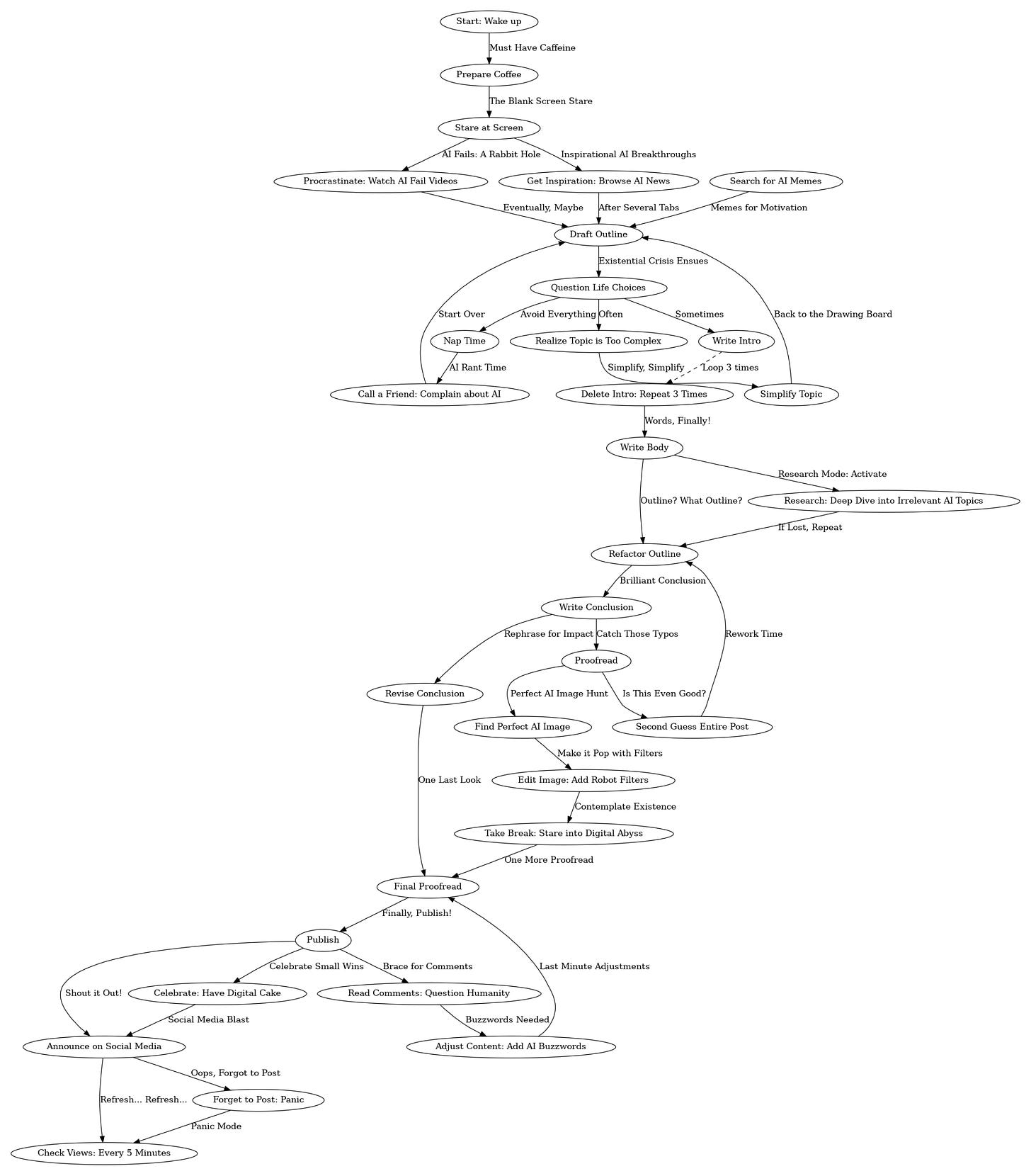

So, if you don’t want to start with music, there are lots of other playful entry points, which are fun, but also show you some of the quirks and limits of LLMs. Here is a GPT that makes tasks as complex as possible and creates a flow chart for you, most of the time (sometimes the AI forgets it can make flow charts and needs to be reminded). Here is a GPT I made that turns the AI into a dungeon master for a made-up game of your choice (as a side note, LLMs work best when they can write out a plan for what they are doing, but that would give away the game details, so the GPT is instructed to do its planning in Chinese in hidden code blocks, so as not to give away spoilers). Or if you want to get really weird, you can try Websim, which generates a fake early 2000s website for you to browse based on a URL you enter. If all of this is too much, just having a conversation with the voice mode of an AI system is often an interesting and playful entry point.

Playing with AI is ultimately serious - it is a good way to get to see what the AI can and can’t do, where it is “imaginative” and where it is cliched. For example, see what happened when I just prompted Claude with the words “garlic bread” and then kept telling it that I wanted it to do something else. Playful, weird, and, I think, also illuminating.

But enough play, let’s get to work.

Getting serious: using AI to do stuff

The core of serious work with generative AI is the Large Language Model, the technology enabled by the paper celebrated in the song above. I won’t spend a lot of time on LLMs and how they work, but there are now some excellent explanations out there. My favorites are the Jargon-Free Guide and this more technical (but remarkably clear) video, but the classic work by Wolfram is also good. You don’t need to know any of these details, since LLMs don’t require technical knowledge to use, but they can serve as useful background.

To learn to do serious stuff with AI, choose a Large Language Model and just use it to do serious stuff - get advice, summarize meetings, generate ideas, write, produce reports, fill out forms, discuss strategy - whatever you do at work, ask the AI to help. A lot of people I talk to seem to get the most benefit from engaging the AI in conversation, often because it gives good advice, but also because just talking through an issue yourself can be very helpful. I know this may not seem particularly profound, but “always invite AI to the table” is the principle in my book that people tell me had the biggest impact on them. You won’t know what AI can (and can’t) do for you until you try to use it for everything you do. And don’t sweat prompting too much, though here are some useful tips, just start a conversation with AI and see where it goes.

You do need to use one of the most advanced frontier models, however. As I have discussed repeatedly, only these models can show you what AI can do. The last time I wrote a guide, there was only one frontier model, now there are three: Claude 3 Opus, Gemini 1.5 and GPT-4. Others are coming soon but, as of now, those are your choices. Last time I wrote the guide, most people were still using an obsolete model because it was free and everyone had heard of it (free ChatGPT, which was, at the time, powered by GPT-3.5), today everyone going to ChatGPT gets free access to the same advanced model, GPT-4o.

The biggest change is that all of these models now do a lot more than they used to:

Connect to the internet. GPT-4 and Gemini are both connected to the internet. That means that they can access updated information after their training completed, but also means that they can do research tasks, though they still can hallucinate incorrect answers. If you really want to do research, however, a specialized AI model, Perplexity, may be a better choice, as it has a great interface and specialized tools that are optimized for research.

Make images. GPT-4 and Gemini both create images, but, at least for now, they do it in a relatively crude way. When you ask these LLMs to create images, they actually write a prompt for a separate image generation tool and show you the results. That means that they do not directly control the image, and the image generation tool is not as smart as the LLM itself. If you ask the AI to “make sure there are no elephants in the picture” it may send a prompt telling the image generator “and do not show elephants” but the image generator just sees the word “elephants” and gives you some.

In general, image generation tools have improved a lot (see the pictures below for a comparison of models from this and last years) but Gemini and GPT-4 do not have access to the best tools, though that may change soon. Instead, you may want to pick from many standalone image models. Consider Adobe Firefly, which is not as strong as other models but has the most artist-friendly approach to training, using only images they have licensed. Or you may want to look at Midjourney, which is probably the best image creator, but whose training policies are unclear.

Runs Code and Does Data Analysis. Technically, these are very similar, because both of these capabilities are enabled by the fact that when AI can write and run code, it can do a lot. Some of the most interesting advances in the last couple of weeks have been advanced data analysis interfaces added to both GPT-4o and Gemini. As you can see below, GPT-4 continues to be the leader in this area, with more advanced features (including interactive graphs and tools to visualize datasets) and a better “instinct” for analysis.

Sees images: The ability of AIs to “see” images remains an underused capability, since it adds a tremendous amount of value to the system. I think reading through the Microsoft report on GPT-4’s vision is a helpful way to understand some of the implications.

Sees video: As the immediate memory, or context window, of AIs grow, they can start to work directly with videos, keeping an entire video in memory at once. Gemini has a startlingly large context window, and I can give it a 30 minute video of a museum walkthrough and ask it to tell me what happens when, and which moments might appeal to kids. A complex task for humans takes less than a minute for AI and suggests why working with video is something that will have large implications.

Reads Files/Works with Documents. Being able to upload documents makes the AI much useful for summarizing, analyzing, and improving your work. Some of the AIs now connect directly to your documents, like Microsoft Copilot and Office and Gemini and Google Docs, but these interactions are still a bit buggy. The best AI for working with PDFs and text files is probably Claude 3 Opus, which combines both a large context window and a very clever AI that seems to do very well with written work. I gave a legal document to Claude today, and it found an issue that neither side’s lawyers had identified, but which everyone agreed was important after Claude pointed it out. I wouldn’t use it to replace a lawyer/doctor/editor, but a cheap second opinion that is mostly right is very valuable.

GPTS: I have written a lot about why GPTs are valuable, they are ways of sharing useful automated tools with others. Currently only ChatGPT supports them.

Overall, you can’t go terribly wrong with any of the major frontier LLMs, but you will find each has its own strengths and weaknesses, and the situation is evolving quickly.

Already obsolete

In some ways, this list is already outdated. We know new features are coming soon in GPT-4o, including native ability to work with voice at a very high level, and multimodal image creation that will be more accurate than previous image generators. Though video tools like Runway exist now, we know that Google and OpenAI both have systems that can generate high quality video from prompts alone. Smaller AI models that can run on your phone are available and will soon connect to larger networks of AIs to solve hard problems. And the nature of our interaction with AIs themselves might be changing, as agents and AI devices start to spread.

Even more importantly, in conversations with folks at AI labs, and in the public statements of people with some knowledge of the future, I see a new recent confidence that the next round of AI models will be much smarter than the ones we are using today, opening up new use cases, opportunities, and risks. If they are right, then this post is already an artefact of the past, a list of already obsolete AIs.

Two notes: First, I don’t take any money from any AI labs or any other AI company I discuss. Second you may have noticed that you have the option to pledge a subscription to this Substack. A lot of you have voluntarily done so - thank you! But for almost two years, I haven’t collected any of those pledges. I am planning on starting to do so in the next week or so. But you should know that I intend to keep this Substack free for everyone, and to publish on my own schedule, so pledging doesn’t currently get you anything special other than my deepest thanks! I will let you know if I start to add subscriber-only features, but if you wanted to change or cancel your pledge, here are the directions.

I appreciate your posts. And look forward to playing with these projects this summer.

This spring I had to pivot as a high school English teacher trying to pitch the value of poetry to students. I was seeing writing with what I suspected had AI help to say the least, so I asked my students to write with integrity as they experimented with ChatGPT and poetry - asking big questions as to role of the poet in an AI world.

They had to credit AI where credit was due - indicating AI writing in bold font - as they wrote poems and reflections on…

Why write poetry?

Does poetry matter?

What’s the point if large language models can generate sonnets and sestinas in seconds?

They read various Ars Poeticas by poets and wrote their own. They researched and presented more than 90 poets and cross checked with ChatGPT. This fact checking is essential as AI churns out words, words, words - some true, yet some false. Discernment is an essential skill. They concluded that writers write with an authentic voice that reflected their lived experience - and context is everything: historical, biographical, political, and social.

Echoing Ross Gay, writing serves as an “evident artifact” to thinking, to struggling,

to investigating, to enduring,

to living - and to inspiring

by sharing with the world.

As educators, we will have to ask big questions as we rethink teaching and learning with this technology.

We must consider our students and their future as they develop their respective relationship with writing and reading.

Right now, more questions than answers.

And as Rilke writes:

“I want to beg you, as much as I can, dear sir, to be patient toward all that is unsolved in your heart and to try to love the questions themselves like locked rooms and like books that are written in a very foreign tongue. Do not now seek the answers, which cannot be given you because you would not be able to live them. And the point is, to live everything. Live the questions now. Perhaps you will then gradually, without noticing it, live along some distant day into the answer.”

“Writing is the evident artifact of some kind of change.” - Ross Gay

From slow stories podcast.

https://podcasts.apple.com/us/podcast/ross-gay-theres-always-a-gathering-inside-of-us/id1438786443?i=1000590791028

I especially love some of the "fun" use cases. A great way to dip your toe into working with AI while having fun in the process.