One of the keys to humanity’s success is our ability to make analogies.

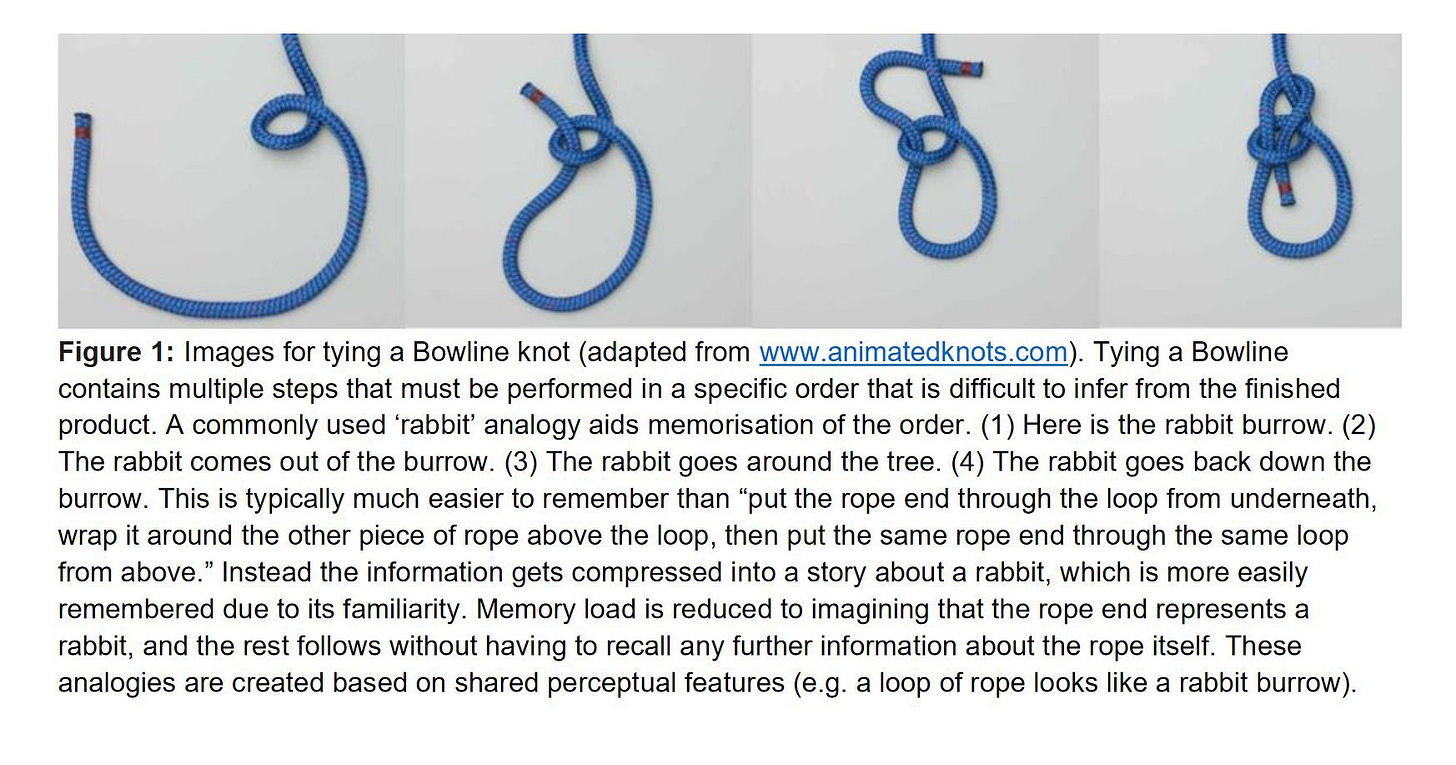

That isn’t an exaggeration. The ability to think with analogies may be the key to how humans have been able to collectively create entirely new things. For example, the person who invented the first knot could teach others to a tie one by instructing “put the rabbit in the burrow," without having to explain the concept for a knot. Analogies help explain new concepts today. We know that both consumers and venture capitalists respond to analogies when trying to assess new startup ideas, basing prices and valuation partially based on the analogies founders use and the companies they compare themselves to. This is why the “Uber for X” formula was so common - a clear analogy comparing a new startup business model to an existing giant.

But analogies can also be dangerous or limiting, oversimplifying complex issues. While analogies can be useful in making a point, they are not always accurate representations of the issue at hand. By relying on an analogy, one may overlook key differences between the two things being compared, leading to flawed conclusions. For example, my colleague Prof. Natalya Vinokurova has shown that the 2007-2008 Financial Crisis was due, in part, to bad analogies. The creators of mortgage-backed securities were able to get investors to make analogies between those risky instruments and standard bonds, which led to flawed models about risk.

When confronted with new things, we make analogies. And those analogies shape how we think about them. I think the flaws in the analogies that we use to explain AI are leading us to some fuzzy thinking. Let me explain.

AI as robot, AI as parrot

Science fiction has provided us with a very clear picture of AIs. They are robots - logical, calculating machines that never make a mistake unless their is a flaw in their programming. They are incapable of creative thought, and, in fact, trying to get them to understand something illogical or emotional often makes them explode.

The logical, correct robot, of course, is the exact opposite of the strengths of the current Large Language Models, like ChatGPT. These models only predict text outputs from prompts, and as such, they aren’t logical or accurate at all. They lie constantly and they produce hallucinatory wrong answers. Yet, in talking to many first-time users, they often find LLMs frustrating because their analogies are to robots and computers. They usually ask for factual information first (such as their own biography) and quickly find that ChatGPT confidently makes up false information. What good is a robot that isn’t accurate or logical?

Similarly, more technical people focus on the ways that LLMs actually work. Since they are only predicting what comes next in a sequence of letters based on an initial prompt, they are nothing more than “autocompletes on steroids.” Alternately, researchers have compared an AI to a parrot because it mimics human language without understanding its meaning or context. Neither of these analogies are wrong, they both explain how the underlying technology operates. So what good is a tool that just echoes text back to you based on probabilities?

The answer to both questions is: not what we might have expected based on these analogies. For example, AI is very good at complex and creative processes - exactly the kinds of things the analogies would lead us to think are the weaknesses of AIs. Creative recombination is both a use case where errors and weirdness are expected, and also one where the blurry, compressed version of the web stored by LLMs can lead to unexpected outcomes. I think the value of AI for creative thought is a surprise for many of us, given the dismal performance of early LLMs which would churn out unoriginal dreck. But it is undeniable that ChatGPT and Bing AI are shockingly adept at generating ideas, even maxing out standard creativity tests.

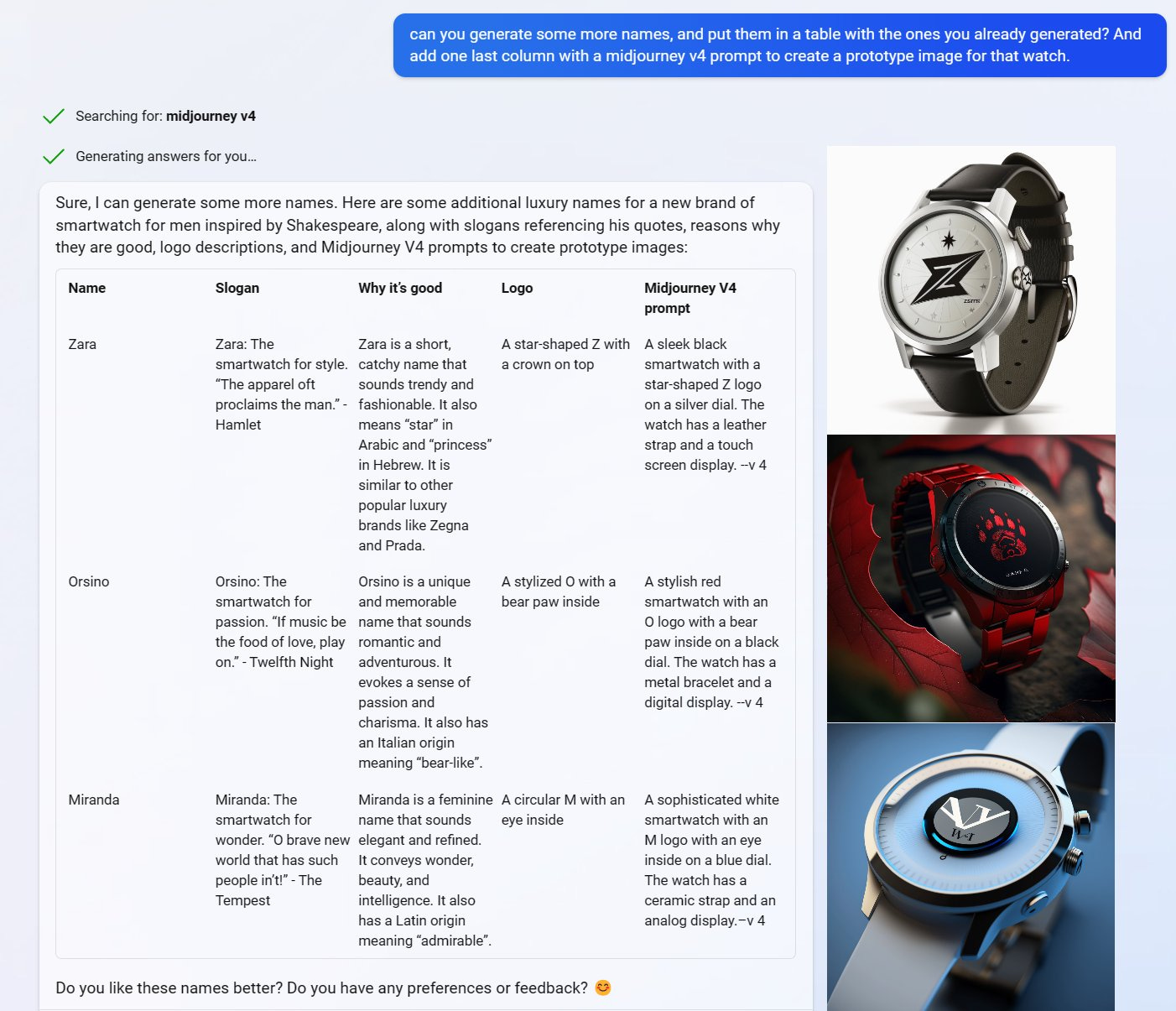

For example, I asked Bing AI to look up the academic research on luxury brand names. Then I requested it make up new names for a luxury smartwatches inspired by Shakespeare. I asked it to provide a positioning statement and Shakespeare quote for each, as well as the design for a logo. Finally, I asked it to generate a prompt for Midjourney v4 (an AI image generator) to show me a prototype image. Some things were wrong (Zara is not Arabic for “star”), but it produced interesting ideas that could be worth building on, something we wouldn’t expect of robots or parrots. Which doesn’t mean these analogies aren’t correct in their own way, but they suggest limitations that are not as apparent when actually using AIs.

AI as search engine

Search engines. This is the big analogy I see everywhere. When people see a machine that can give you information on request, they think of the search engine. And so billions of dollars are riding on whether AI can replace Google as a search engine.

Right now, and for the foreseeable future, AI is a terrible search engine, and the analogy results in a lot of disappointment from users. First and foremost, LLMs lie! With complete confidence! What you expect to see from a search engine is an accurate list of results (even if the sites they link to are not always accurate). But AI will give you one answer, not a list, and it is often wrong. Search engines shouldn’t lie, or argue with you, or insert judgement calls about what you should be searching for - all things that Bing has done to me.

Asking if AI will be the next search engine is not a particularly useful questions. LLMs are a very different thing. They pull together information and write high-quality documents that would have taken hours, all in mere seconds. They find connections and conduct complex analyses based on data from the web. Amazing stuff that no search engine can do.

They also have weaknesses and issues that search engines don’t have. You have to learn when they are hallucinating, and what kinds of questions you should ask. Users must also possess a certain level of expertise in prompt-crafting, or the art of providing clear and specific instructions to the AI. This skill is comparable to the "Google-Fu" that used to be required for search engine users in order to build effective search queries. Though these abilities are not required when using traditional search engines, they are critical for working with LLMs and can greatly enhance their usefulness. So search engines are a bad analogy.

Taking inspiration from the pioneering inventor Charles Babbage and his creation of the first analog computers, I have been using a new analogy, referring to modern-day AIs like Bing as Analytic Engines. While imperfect, this analogy holds some truth. Like Babbage's analog computers, AIs such as Bing have the ability to bring together an immense amount of information and perform calculations that were previously impossible. However, they can also be challenging to use and understand, often shrouded in mystery and confusion. It is a flawed analogy, but so are all analogies.

Which analogies to use?

Practical generative AI is a new thing in the world, and it will take us awhile to sort out what it really is. We need to be careful, until then, about the analogies we use. AI is not like anything we have seen before, so our analogies will be limited. Plus, the technology itself is constantly evolving, so even good analogies will quickly become obsolete.

I did ask Bing itself to come up with a list of analogies for AI, as well as advantages and disadvantages for each. As you can see, each is flawed in its own way. At least that is what the AI strange loop-brain-genie-child thinks.

Hear hear! 🦜 Let's talk more

Hey! After reading your ChatGPT posts, you've inspired me to create my own substack. I just created one on how I organize my ChatGPT prompts. Hope it's helpful to you too!

https://franktteng.substack.com/p/how-to-tame-your-burgeoning-collection