And the great gears begin to turn again...

Progress in two vital areas had slowed, AI could change that

With powerful cellphones connected to all the information in the world, you would think we would be in a scientific golden age, but, in reality, the march of progress has been stalling. The two engines of economic growth — productivity and innovation — have been mysteriously grinding to halt in recent decades, with long-term consequences for all of us. But the rise of useful AI may be exactly what is needed to get the machine working again. And even at this early date, it is possible to hear the creaking of the great gears of progress starting to turn, as AI offers new solution to otherwise intractable problems.

Science and Innovation

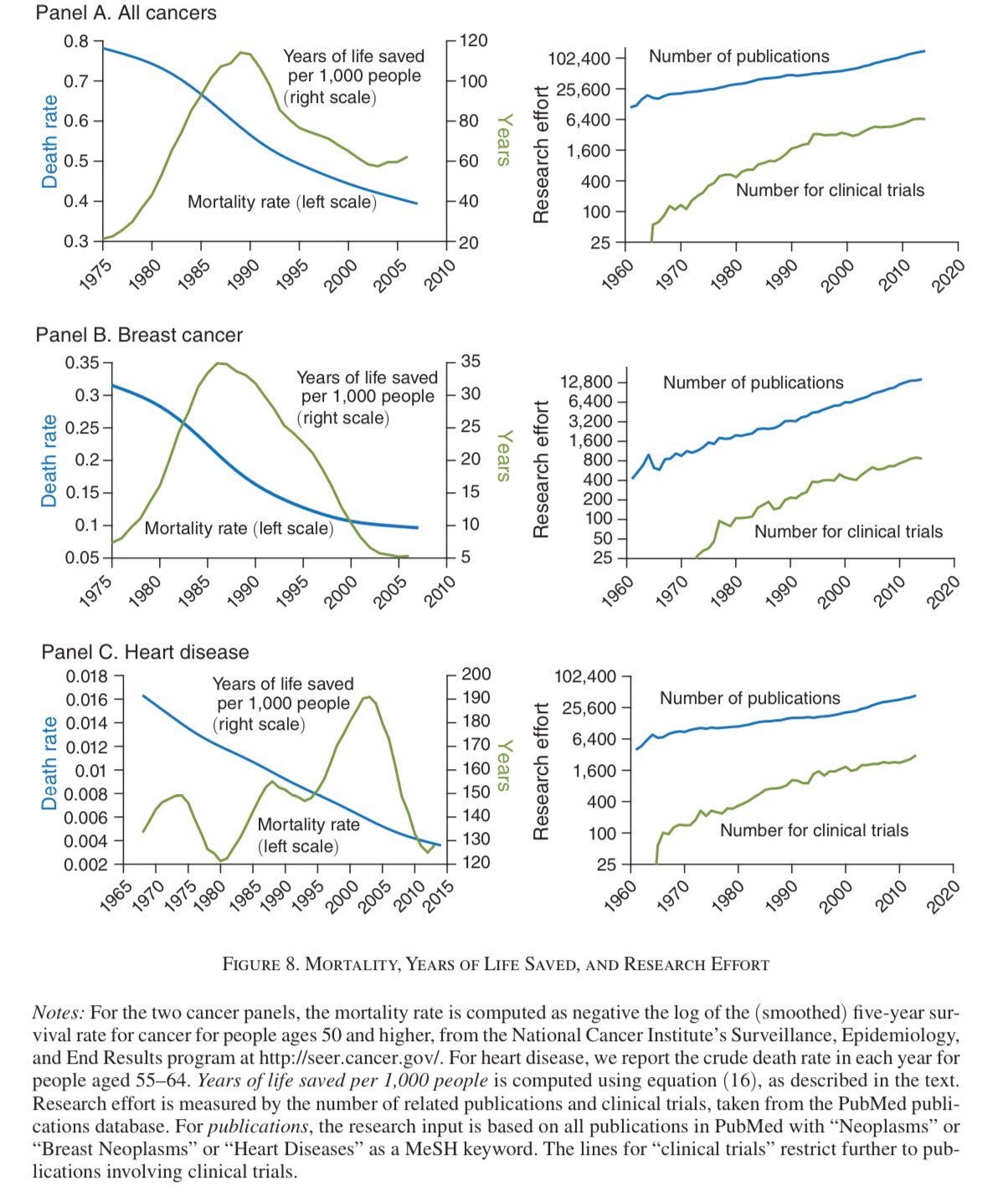

Innovation has been slowing alarmingly. In fact, a recent, convincing, and depressing paper found that the pace of invention is dropping in every field, from agriculture to cancer research. More researchers are required to advance the state of the art. In fact, the speed of innovation appears to be dropping by 50% every 13 years. The paper ends: “to sustain constant growth in GDP per person, the US must double the amount of research effort every 13 years to offset the increased difficulty of finding new ideas.

Part of the issue appears to be a growing problem with scientific research itself: there is too much of it. The burden of knowledge is increasing, in that there is too much to know before a new scientist has enough expertise to start doing research themselves. That appears to be why the age at which scientists or inventors achieve their moment of genius increasing. Half of all pioneering contributions in science now happen after age 40, but it used to be younger scientists who achieved breakthroughs. To see the burden of knowledge at work, consider how much there is to learn about the existing state of even a narrow topic in science, like human metabolic pathways, as illustrated below (high quality version here).

Similarly, startup rates of STEM PhDs are down 38% in the last 20 years. This paper finds that a reason, again, is the burden of knowledge: the nature of science is growing so complex that PhD founders now need large teams and administrative support to make progress, so they go to big firms instead!

Thus, we have the paradox of our Golden Age of science, as illustrated in this paper. More research is being published by more scientists than ever, but the result is actually slowing progress! With too much to read and absorb, papers in more crowded fields are citing new work less, and canonizing highly-cited articles more. The paper ominously concludes: “These findings suggest troubling implications…. If too many papers are published in short order, new ideas cannot be carefully considered against old, and processes of cumulative advantage cannot work to select valuable innovations.”

But there are already signs that AI can help (even though some of the early efforts to summarize research data have resulted in backlash due to the way they were implemented). A new paper has successfully demonstrated that it is possible to correctly determine the most promising directions in science by analyzing past papers with AI, ideally combining human filtering with the AI software. And other work has found that AI shows considerable promise in performing literature reviews.

While specialized tools will likely do much more to advance the use of AI in research, many academics are already experimenting with AI as a way to automate tasks. In fact, part of the reason I am confident that AI has power as summation and analysis tool is that I am using it myself. For example, I trusted ChatGPT to generate a draft abstract to a new whitepaper (which, any academic will tell you, is a surprisingly annoying task). After feeding it the entire first part of our new paper, it did a very solid first pass and summarizing the important points.

Productivity

While the slowdown in science is worrying, there have been few trends as frustrating as the long-term slowdown in productivity. Productivity, the amount of output per worker in a given period of time, is the ultimate driver of progress - it lets us create more stuff in less time. This both boosts the economy and our quality of life. In 1865, the average British worker worked 124,000 hours over their life (similar to the US & Japan). By 1980, the average British worker put in only 69,000 hours of work over their life, despite living longer with a higher standard of living. We went from spending 50% of our lives working to 20%, and got richer besides, thanks to productivity growth.

But since 1980, the rate of productivity gains has stalled, becoming especially bad recently. Despite the rise of software and internet access, the last decade has seen incredibly slow growth in productivity. Those numbers were briefly reversed during a productivity boom during the pandemic, but have fallen back to an even slower long-term trajectory this year. And, frustratingly, no one has a clear explanation as to the root cause.

Even without knowing the cause, there is something that might change the trajectory of productivity growth, and, if you have been reading this Substack, you will already guess that I am referring to generative AI. While the full dimensions of the value of this sort of AI system are unclear, early indications are that it can act as a shortcut for many different kinds of tasks. Many aspects of white collar and managerial work have been unchanged for years, involving lots of time on Excel or Powerpoint or Micosoft Word creating reports and documents, using tools that have been fundamentally unchanged for decades. AI offers the possibility of changing the nature of this work in a widespread and lasting way. Documents can be prepared quickly, designs can be rapidly prototyped, slide decks can be generated with a few clicks, and more. The old constraints on managerial work might change very quickly.

It is very early to say whether this is enough to break the productivity slowdown, but our clearest sign that it might is in programming. AI tools have used extensively in programming over the past year, and it seems to make a big difference. In a recent randomized controlled trial of Copilot, an AI programming companion (conducted by Microsoft, who owns the tool), using AI cut the time required to complete a programming task in half.

We are still very much at the start of any AI revolution, but early signs are encouraging. I am especially excited by the fact that AI helps with the pain points associated with the twin slowdowns in science (too much information) and productivity (too many analytical writing and programming tasks have been untouched by technological advances). If AI does prove to be helpful in innovation and productivity, it would help restart and sustain the engines of global growth, which would be good news for all of us.

Interesting read. I've just started thinking about this, so the thoughts may be a bit simplistic. I agree that there is so very much to learn in many fields. I once had a student who asked, "What's the least I have to do to earn an 'A'?" She had five classes and wanted an A in each. Time prevented her from learning deeply about each. That caused me to rethink what I was teaching, focus on key topics, and allow time for creative thinking and curiosity. The more content one tries to absorb, the less time they have to ponder possibilities, connections, similarities/differences, and "what if?"

I find that a lot of younger people (I'm 78 so nearly everyone at work is a younger person), are more interested in getting a job done than they are in why they are doing the job and if there is a better way to do it, or how to make the product better. They want to be told how to do something. So, training departments create manuals, job aids, and workshops to teach them "best practices." That works fine but doesn't foster original thinking or creativity. And it seems, that's okay, maybe all that's wanted.

Everybody is in such a rush. I would venture to say that more than 90% of the people reading this will say they have more work than they can handle or complete in a 40-hour workweek. And since productivity is a measure of units/time, our mentality is to do more in less time. And, Ta Da, overwork and stress.

One last thought, I read in your article that more and more scientific articles cite older or previous research (meta-analyses) which could uncover new ways to combine older ideas but more often are used to substantiate existing theories or concepts. As AI has proven, data mining is an idea-rich, innovation-rich process. But what about original research? The old, time-consuming way of learning and exploring? You know, original thinking?

I'll end with thoughts on video games... Video games are amazing! Wonderful graphics, captivating game mechanics, and incredible hardware. Wonderfully creative. But where does the creativity lie, with the gamer or the person(s) who design and create the game? Gamers play and get really good at it. They learn the nuances and subtleties built into the game. If I were to hire one or the other, I'd hire the designer. They are the thinkers, the creators, and the innovators. We have too many gamers and not enough designers.

I apologize for the simplistic nature of this response, but the article got me thinking and this is top-of-the-head stuff. I'd love to read your thoughts.

Great post. The way I put it is that the cost to creativity has declined to nearly zero. One thing that we'll see with the advent of all this generative AI output is new tools that help us curate the deluge of information. Summarization tools are one possibility. https://davefriedman.substack.com/p/chatgpt-enables-costless-creativity